BK101

Knowledge Base

Computers

Computer is a machine for performing calculations

automatically. An expert at

calculation or at operating calculating machines.

A machine that can be instructed to carry out

sequences of arithmetic or logical operations automatically via

computer programming. Memory

and Processing.

Computer is a machine for performing calculations

automatically. An expert at

calculation or at operating calculating machines.

A machine that can be instructed to carry out

sequences of arithmetic or logical operations automatically via

computer programming. Memory

and Processing."A Keen Impassioned Beauty of a Great Machine" - "A Bicycle for the Brain"

Hardware - IC's - Code - Software - OS - VPN - Servers - Networks - Super Computers

You can learn several different subjects at the same time when you're learning about computers. You can learn Problem Solving, Math, Languages, Communication, Technology, Electricity, Physics and Intelligence, just to name a few.

Basic Computer Skills - Computer Literacy - History of Computers - Films about Computers

Computer Science is the study of the theory, experimentation, and engineering that form the basis for the design and use of computers. It is the scientific and practical approach to computation and its applications and the systematic study of the feasibility, structure, expression, and mechanization of the methodical procedures (or algorithms) that underlie the acquisition, representation, processing, storage, communication of, and access to information. An alternate, more succinct definition of computer science is the study of automating algorithmic processes that scale. A computer scientist specializes in the theory of computation and the design of computational systems. Pioneers in Computer Science (wiki).

Computer Science Books (wiki) - List of Computer Books (wiki)

Theoretical Computer Science is a division or subset of general computer science and mathematics that focuses on more abstract or mathematical aspects of computing and includes the theory of computation, which is the branch that deals with how efficiently problems can be solved on a model of computation, using an algorithm. The field is divided into three major branches: automata theory and language, computability theory, and computational complexity theory, which are linked by the question: "What are the fundamental capabilities and limitations of computers?".

Doctor of Computer Science is a doctorate in Computer Science by dissertation or multiple research papers.

Computer Engineering is a discipline that integrates several fields of electrical engineering and computer science required to develop computer hardware and software. Computer engineers usually have training in electronic engineering (or electrical engineering), software design, and hardware–software integration instead of only software engineering or electronic engineering. Computer engineers are involved in many hardware and software aspects of computing, from the design of individual microcontrollers, microprocessors, personal computers, and supercomputers, to circuit design. This field of engineering not only focuses on how computer systems themselves work, but also how they integrate into the larger picture. Usual tasks involving computer engineers include writing software and firmware for embedded microcontrollers, designing VLSI chips, designing analog sensors, designing mixed signal circuit boards, and designing operating systems. Computer engineers are also suited for robotics research, which relies heavily on using digital systems to control and monitor electrical systems like motors, communications, and sensors. In many institutions, computer engineering students are allowed to choose areas of in-depth study in their junior and senior year, because the full breadth of knowledge used in the design and application of computers is beyond the scope of an undergraduate degree. Other institutions may require engineering students to complete one or two years of General Engineering before declaring computer engineering as their primary focus. Telephone.

Computer Architecture is a set of rules and methods that describe the functionality, organization, and implementation of computer systems. Some definitions of architecture define it as describing the capabilities and programming model of a computer but not a particular implementation. In other definitions computer architecture involves instruction set architecture design, microarchitecture design, logic design, and implementation. How Does a Computer Work - Help Fixing PC's.

Minimalism in computing refers to the application of minimalist philosophies and principles in the design and use of hardware and software. Minimalism, in this sense, means designing systems that use the least hardware and software resources possible.

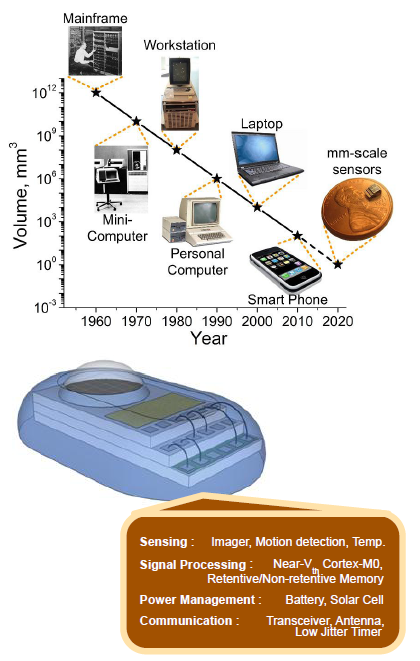

Computer Types

General Purpose Computer is a computer that is designed to be able to carry out many different tasks. Desktop computers and laptops are examples of general purpose computers. Among other things, they can be used to access the internet.

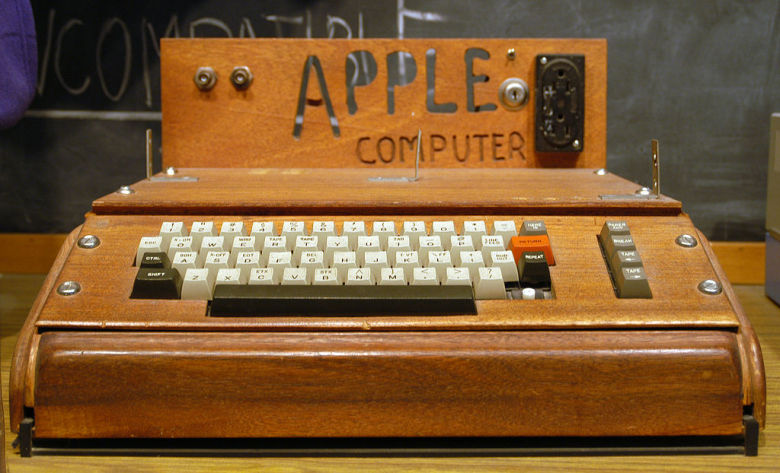

Personal Computer is a multi-purpose computer whose size, capabilities, and price make it feasible for individual use. Personal computers are intended to be operated directly by an end user, rather than by a computer expert or technician. Unlike large costly minicomputer and mainframes, time-sharing by many people at the same time is not used with personal computers.

First Computers - History of Computers - Super Computers - Artificial Intelligence

Smartphones - Remote Communication - Great Inventions - Technology Advancement

Portable Computer was a computer designed to be easily moved from one place to another and included a display and keyboard. Operating Systems.

Laptop is a small portable personal computer with a "clamshell" form factor, typically having a thin LCD or LED computer screen mounted on the inside of the upper lid of the clamshell and an alphanumeric keyboard on the inside of the lower lid. The clamshell is opened up to use the computer. Laptops are folded shut for transportation, and thus are suitable for mobile use. Its name comes from lap, as it was deemed to be placed on a person's lap when being used. Although originally there was a distinction between laptops and notebooks (the former being bigger and heavier than the latter), as of 2014, there is often no longer any difference. Laptops are commonly used in a variety of settings, such as at work, in education, for playing games, Internet surfing, for personal multimedia, and general home computer use. Laptops combine all the input/output components and capabilities of a desktop computer, including the display screen, small speakers, a keyboard, hard disk drive, optical disc drive, pointing devices (such as a touchpad or trackpad), a processor, and memory into a single unit. Most modern laptops feature integrated webcams and built-in microphones, while many also have touchscreens. Laptops can be powered either from an internal battery or by an external power supply from an AC adapter. Hardware specifications, such as the processor speed and memory capacity, significantly vary between different types, makes, models and price points. Design elements, form factor and construction can also vary significantly between models depending on intended use. Examples of specialized models of laptops include rugged notebooks for use in construction or military applications, as well as low production cost laptops such as those from the One Laptop per Child (OLPC) organization, which incorporate features like solar charging and semi-flexible components not found on most laptop computers. Portable computers, which later developed into modern laptops, were originally considered to be a small niche market, mostly for specialized field applications, such as in the military, for accountants, or for traveling sales representatives. As the portable computers evolved into the modern laptop, they became widely used for a variety of purposes.

Tablet Computer is a mobile device, typically with a mobile operating system and touchscreen display processing circuitry, and a rechargeable battery in a single thin, flat package. Tablets, being computers, do what other personal computers do, but lack some input/output (I/O) abilities that others have. Modern tablets largely resemble modern Smartphones, the only differences being that tablets are relatively larger than smartphones, with screens 7 inches (18 cm) or larger, measured diagonally, and may not support access to a cellular network.

Desktop Computer is a personal computer designed for regular use at a single location on or near a desk or table due to its size and power requirements. The most common configuration has a case that houses the power supply, motherboard (a printed circuit board with a microprocessor as the central processing unit (CPU), memory, bus, and other electronic components), disk storage (usually one or more hard disk drives, optical disc drives, and in early models a floppy disk drive); a keyboard and mouse for input; and a computer monitor, speakers, and, often, a printer for output. The case may be oriented horizontally or vertically and placed either underneath, beside, or on top of a desk.

Workstation is a special computer designed for technical or scientific applications. Intended primarily to be used by one person at a time, they are commonly connected to a local area network and run multi-user operating systems. The term workstation has also been used loosely to refer to everything from a mainframe computer terminal to a PC connected to a network, but the most common form refers to the group of hardware offered by several current and defunct companies such as Sun Microsystems, Silicon Graphics, Apollo Computer, DEC, HP, NeXT and IBM which opened the door for the 3D graphics animation revolution of the late 1990s.

Industrial PC is a computer intended for industrial purposes (production of goods and services), with a form factor between a nettop and a server rack. Industrial PCs have higher dependability and precision standards, and are generally more expensive than consumer electronics. They often use complex instruction sets, such as x86, where reduced instruction sets such as ARM would otherwise be used. Controllers.

Computing Types

Bio-Inspired Computing is a field of study that loosely knits together subfields related to the topics of connectionism, social behaviour and emergence. It is often closely related to the field of artificial intelligence, as many of its pursuits can be linked to machine learning. It relies heavily on the fields of biology, computer science and mathematics. Briefly put, it is the use of computers to model the living phenomena, and simultaneously the study of life to improve the usage of computers. Biologically inspired computing is a major subset of natural computation.

Biological Computation is the study of the computations performed by natural biota, including the subject matter of systems biology. The design of algorithms inspired by the computational methods of biota. The design and engineering of manufactured computational devices using synthetic biology components. Computer methods for the analysis of biological data, elsewhere called computational biology. When biological computation refers to using biology to build computers, it is a subfield of computer science and is distinct from the interdisciplinary science of bioinformatics which simply uses computers to better understand biology.

Computational Biology involves the development and application of data-analytical and theoretical methods, mathematical modeling and computational simulation techniques to the study of biological, behavioral, and social systems. The field is broadly defined and includes foundations in computer science, applied mathematics, animation, statistics, biochemistry, chemistry, biophysics, molecular biology, genetics, genomics, ecology, evolution, anatomy, neuroscience, and visualization. Computational biology is different from biological computation, which is a subfield of computer science and computer engineering using bioengineering and biology to build computers, but is similar to bioinformatics, which is an interdisciplinary science using computers to store and process biological data. Information

Model of Computation is the definition of the set of allowable operations used in computation and their respective costs. It is used for measuring the complexity of an algorithm in execution time and or memory space: by assuming a certain model of computation, it is possible to analyze the computational resources required or to discuss the limitations of algorithms or computers.

Computer Simulation - Virtual Reality - Turing Machine

Ubiquitous Computing is a concept in software engineering and computer science where computing is made to appear anytime and everywhere. In contrast to desktop computing, ubiquitous computing can occur using any device, in any location, and in any format. A user interacts with the computer, which can exist in many different forms, including laptop computers, tablets and terminals in everyday objects such as a fridge or a pair of glasses. The underlying technologies to support ubiquitous computing include Internet, advanced middleware, operating system, mobile code, sensors, microprocessors, new I/O and user interfaces, networks, mobile protocols, location and positioning and new materials.

Parallel Computing is a type of computation in which many calculations or the execution of processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: bit-level, instruction-level, data, and task parallelism. Parallelism has been employed for many years, mainly in high-performance computing, but interest in it has grown lately due to the physical constraints preventing frequency scaling. As power consumption (and consequently heat generation) by computers has become a concern in recent years, parallel computing has become the dominant paradigm in computer architecture, mainly in the form of multi-core processors. Working Together - Multitasking.

Task Parallelism is a form of parallelization of computer code across multiple processors in parallel computing environments. Task parallelism focuses on distributing tasks—concurrently performed by processes or threads—across different processors. It contrasts to data parallelism as another form of parallelism.

Human Brain Parallel Processing

Human Centered Computing studies the design, development, and deployment of mixed-initiative human-computer systems. It is emerged from the convergence of multiple disciplines that are concerned both with understanding human beings and with the design of computational artifacts. Human-centered computing is closely related to human-computer interaction and information science. Human-centered computing is usually concerned with systems and practices of technology use while human-computer interaction is more focused on ergonomics and the usability of computing artifacts and information science is focused on practices surrounding the collection, manipulation, and use of information.

Distributed Computing components located on networked computers communicate and coordinate their actions by passing messages. The components interact with each other in order to achieve a common goal. Distributed Workforce.

Cloud Computing is a type of Internet-based computing that provides shared computer processing resources and data to computers and other devices on demand. It is a model for enabling ubiquitous, on-demand access to a shared pool of configurable computing resources (e.g., computer networks, servers, storage, applications and services), which can be rapidly provisioned and released with minimal management effort. Cloud computing and storage solutions provide users and enterprises with various capabilities to store and process their data in either privately owned, or third-party data centers that may be located far from the user–ranging in distance from across a city to across the world. Cloud computing relies on sharing of resources to achieve coherence and economy of scale, similar to a utility (like the electricity grid) over an electricity network. Cloud Computing Tools.

Reversible Computing is a model of computing where the computational process to some extent is reversible, i.e., time-invertible. In a computational model that uses deterministic transitions from one state of the abstract machine to another, a necessary condition for reversibility is that the relation of the mapping from states to their successors must be one-to-one. Reversible computing is generally considered an unconventional form of computing.

Adaptable - Compatible

Natural Computing is a terminology introduced to encompass three classes of methods: 1) those that take inspiration from nature for the development of novel problem-solving techniques; 2) those that are based on the use of computers to synthesize natural phenomena; and 3) those that employ natural materials (e.g., molecules) to compute. The main fields of research that compose these three branches are artificial neural networks, evolutionary algorithms, swarm intelligence, artificial immune systems, fractal geometry, artificial life, DNA computing, and quantum computing, among others.

DNA Computing is a branch of computing which uses DNA, biochemistry, and molecular biology hardware, instead of the traditional silicon-based computer technologies. Research and development in this area concerns theory, experiments, and applications of DNA computing. The term "molectronics" has sometimes been used, but this term had already been used for an earlier technology, a then-unsuccessful rival of the first integrated circuits; this term has also been used more generally, for molecular-scale electronic technology.

Quantum Computer (super computers)

UW engineers borrow from electronics to build largest circuits to date in living eukaryotic cells. Living cells must constantly process information to keep track of the changing world around them and arrive at an appropriate response.

Hardware

Hardware is the collection of physical components that constitute a computer system. Computer hardware is the physical parts or components of a computer, such as monitor, keyboard, computer data storage, hard disk drive (HDD), graphic card, sound card, memory (RAM), motherboard, and so on, all of which are tangible physical objects. By contrast, software is instructions that can be stored and run by hardware. Hardware is directed by the software to execute any command or instruction. A combination of hardware and software forms a usable computing system.

Computer Hardware includes the physical, tangible parts or components of a computer, such as the cabinet, central processing unit, monitor, keyboard, computer data storage, graphic card, sound card, speakers and motherboard. By contrast, software is instructions that can be stored and run by hardware. Hardware is so-termed because it is "hard" or rigid with respect to changes or modifications; whereas software is "soft" because it is easy to update or change. Intermediate between software and hardware is "firmware", which is software that is strongly coupled to the particular hardware of a computer system and thus the most difficult to change but also among the most stable with respect to consistency of interface. The progression from levels of "hardness" to "softness" in computer systems parallels a progression of layers of abstraction in computing. Hardware is typically directed by the software to execute any command or instruction. A combination of hardware and software forms a usable computing system, although other systems exist with only hardware components.

Hardware Architecture refers to the identification of a system's physical components and their interrelationships. This description, often called a hardware design model, allows hardware designers to understand how their components fit into a system architecture and provides to software component designers important information needed for software development and integration. Clear definition of a hardware architecture allows the various traditional engineering disciplines (e.g., electrical and mechanical engineering) to work more effectively together to develop and manufacture new machines, devices and components. Processors

Computer Architecture is a set of rules and methods that describe the functionality, organization, and implementation of computer systems. Some definitions of architecture define it as describing the capabilities and programming model of a computer but not a particular implementation. In other definitions computer architecture involves instruction set architecture design, microarchitecture design, logic design, and implementation.

Memory

Computer Memory refers to the computer hardware devices involved to store information for immediate use in a computer; it is synonymous with the term "primary storage". Computer memory operates at a high speed, for example random-access memory (RAM), as a distinction from storage that provides slow-to-access program and data storage but offers higher capacities. If needed, contents of the computer memory can be transferred to secondary storage, through a memory management technique called "virtual memory". An archaic synonym for memory is store. The term "memory", meaning "primary storage" or "main memory", is often associated with addressable semiconductor memory, i.e. integrated circuits consisting of silicon-based transistors, used for example as primary storage but also other purposes in computers and other digital electronic devices. There are two main kinds of semiconductor memory, volatile and non-volatile. Examples of non-volatile memory are flash memory (used as secondary memory) and ROM, PROM, EPROM and EEPROM memory (used for storing firmware such as BIOS). Examples of volatile memory are primary storage, which is typically dynamic random-access memory (DRAM), and fast CPU cache memory, which is typically static random-access memory (SRAM) that is fast but energy-consuming, offering lower memory areal density than DRAM. Most semiconductor memory is organized into memory cells or bistable flip-flops, each storing one bit (0 or 1). Flash memory organization includes both one bit per memory cell and multiple bits per cell (called MLC, Multiple Level Cell). The memory cells are grouped into words of fixed word length, for example 1, 2, 4, 8, 16, 32, 64 or 128 bit. Each word can be accessed by a binary address of N bit, making it possible to store 2 raised by N words in the memory. This implies that processor registers normally are not considered as memory, since they only store one word and do not include an addressing mechanism. Typical secondary storage devices are hard disk drives and solid-state

drives. Magnetic Memory (memristors).

Memory Cell in computing is the fundamental building block of computer memory. The memory cell is an electronic circuit that stores one bit of binary information and it must be set to store a logic 1 (high voltage level) and reset to store a logic 0 (low voltage level). Its value is maintained/stored until it is changed by the set/reset process. The value in the memory cell can be accessed by reading it. Brain Memory - Data Storage - Knowledge Preservation.

Random-Access Memory is a form of computer data storage which stores frequently used program instructions to increase the general speed of a system. A RAM device allows data items to be read or written in almost the same amount of time.

Dynamic Random-Access Memory is a type of random access semiconductor memory that stores each bit of data in a separate tiny capacitor within an integrated circuit. The capacitor can either be charged or discharged; these two states are taken to represent the two values of a bit, conventionally called 0 and 1. The electric charge on the capacitors slowly leaks off, so without intervention the data on the chip would soon be lost. To prevent this, DRAM requires an external memory refresh circuit which periodically rewrites the data in the capacitors, restoring them to their original charge. This refresh process is the defining characteristic of dynamic random-access memory, in contrast to static random-access memory (SRAM) which does not require data to be refreshed. Unlike flash memory, DRAM is volatile memory (vs. non-volatile memory), since it loses its data quickly when power is removed. However, DRAM does exhibit limited data remanence. DRAM is widely used in digital electronics where low-cost and high-capacity memory is required. One of the largest applications for DRAM is the main memory (colloquially called the "RAM") in modern computers and graphics cards (where the "main memory" is called the graphics memory). It is also used in many portable devices and video game consoles. In contrast, SRAM, which is faster and more expensive than DRAM, is typically used where speed is of greater concern than cost and size, such as the cache memories in processors. Due to its need of a system to perform refreshing, DRAM has more complicated circuitry and timing requirements than SRAM, but it is much more widely used. The advantage of DRAM is the structural simplicity of its memory cells: only one transistor and a capacitor are required per bit, compared to four or six transistors in SRAM. This allows DRAM to reach very high densities, making DRAM much cheaper per bit. The transistors and capacitors used are extremely small; billions can fit on a single memory chip. Due to the dynamic nature of its memory cells, DRAM consumes relatively large amounts of power, with different ways for managing the power consumption. DRAM had a 47% increase in the price-per-bit in 2017, the largest jump in 30 years since the 45% percent jump in 1988, while in recent years the price has been going down.

Universal Memory refers to a hypothetical computer data storage device combining the cost benefits of DRAM, the speed of SRAM, the non-volatility of flash memory along with infinite durability. Such a device, if it ever becomes possible to develop, would have a far-reaching impact on the computer market. Computers for most of their recent history have depended on several different data storage technologies simultaneously as part of their operation. Each one operates at a level in the memory hierarchy where another would be unsuitable. A personal computer might include a few megabytes of fast but volatile and expensive SRAM as the CPU cache, several gigabytes of slower DRAM for program memory, and multiple hundreds of gigabytes of the slow but non-volatile flash memory or a few terabytes of "spinning platters" hard disk drive for long term storage.

Universal Memory can record or delete data using 100 times less energy than Dynamic Random Access Memory (DRAM) and flash drives. It promises to transform daily life with its ultra-low energy consumption, allowing computers which do not need to boot up and which could sleep between key strokes. While writing data to DRAM is fast and low-energy, the data is volatile and must be continuously 'refreshed' to avoid it being lost: this is clearly inconvenient and inefficient. Flash stores data robustly, but writing and erasing is slow, energy intensive and deteriorates it, making it unsuitable for working memory.

Read-Only Memory is a type of non-volatile memory used in computers and other electronic devices. Data stored in ROM can only be modified slowly, with difficulty, or not at all, so it is mainly used to store firmware (software that is closely tied to specific hardware, and unlikely to need frequent updates) or application software in plug-in cartridges. OS.

Volatile Memory is memory that lasts only while the power is on, but when the power is interrupted, the stored data is quickly lost, and thus would be lost after a restart. Volatile computer memory requires power to maintain the stored information and only retains its contents while powered on. Short Term Memory.

Non-Volatile Memory is a type of computer memory that can retrieve stored information even after having been power cycled (turned off and back on). The opposite of non-volatile memory is Volatile Memory which needs constant power in order to prevent data from being erased. Memory Error Correction.

Conductive Bridging Random Access Memory - CBRAM storing data in a non-volatile or near-permanent way, to reduce the size and power consumption of components.

Programmable Metallization Cell is a non-volatile computer memory widely used flash memory, providing a combination of longer lifetimes, lower power, and better memory density.

Flash Memory is non-volatile computer storage medium that can be electrically erased and reprogrammed. Jump Drive.

Multi-Level Cell is a memory element capable of storing more than a single bit of information, compared to a single-level cell (SLC) which can store only one bit per memory element. Triple-level cells (TLC) and quad-level cells (QLC) are versions of MLC memory, which can store 3 and 4 bits per cell, respectively. Note that due to the convention, the name "multi-level cell" is sometimes used specifically to refer to the "two-level cell", which is slightly confusing. Overall, the memories are named as follows: SLC (1 bit per cell) - fastest, more reliable, but highest cost. MLC (2 bits per cell). TLC (3 bits per cell). QLC (4 bits per cell) - slowest, least cost. Examples of MLC memories are MLC NAND flash, MLC PCM (phase change memory), etc. For example, in SLC NAND flash technology, each cell can exist in one of the two states, storing one bit of information per cell. Most MLC NAND flash memory has four possible states per cell, so it can store two bits of information per cell. This reduces the amount of margin separating the states and results in the possibility of more errors. Multi-level cells which are designed for low error rates are sometimes called enterprise MLC (eMLC). There are tools for modeling the area/latency/energy of MLC memories.

Solid-State Storage is a type of non-volatile computer storage that stores and retrieves digital information using only electronic circuits, without any involvement of moving mechanical parts. This differs fundamentally from the traditional electromechanical storage paradigm, which accesses data using rotating or linearly moving media coated with magnetic material.

Solid-State Drive is a solid-state storage device that uses integrated circuit assemblies as memory to store data persistently. SSD technology primarily uses electronic interfaces compatible with traditional block input/output (I/O) hard disk drives (HDDs), which permit simple replacements in common applications. New I/O interfaces like SATA Express and M.2 have been designed to address specific requirements of the SSD technology. SSDs have no moving mechanical components. This distinguishes them from traditional electromechanical drives such as hard disk drives (HDDs) or floppy disks, which contain spinning disks and movable read/write heads. Compared with electromechanical drives, SSDs are typically more resistant to physical shock, run silently, have quicker access time and lower latency. However, while the price of SSDs has continued to decline over time SSDs are (as of 2018) still more expensive per unit of storage than HDDs and are expected to continue so into the next decade.

Solid State Drive (amazon).

Scientists perfect technique to boost capacity of computer storage a thousand-fold. New technique leads to world’s densest solid-state memory that can store 45 million songs on the surface of a quarter.

Hard Disk Drive is a data storage device that uses magnetic storage to store and retrieve digital information using one or more rigid rapidly rotating disks (platters) coated with magnetic material. The platters are paired with magnetic heads, usually arranged on a moving actuator arm, which read and write data to the platter surfaces. Data is accessed in a random-access manner, meaning that individual blocks of data can be stored or retrieved in any order and not only sequentially. HDDs are a type of non-volatile storage, retaining stored data even when powered off. Storage Types.

NAND Gate is a logic gate which produces an output which is false only if all its inputs are true; thus its output is complement to that of the AND gate. A LOW (0) output results only if both the inputs to the gate are HIGH (1); if one or both inputs are LOW (0), a HIGH (1) output results. It is made using transistors and junction diodes.

Floating-gate MOSFET is a field-effect transistor, whose structure is similar to a conventional MOSFET. The gate of the FGMOS is electrically isolated, creating a floating node in DC, and a number of secondary gates or inputs are deposited above the floating gate (FG) and are electrically isolated from it. These inputs are only capacitively connected to the FG. Since the FG is completely surrounded by highly resistive material, the charge contained in it remains unchanged for long periods of time. Usually Fowler-Nordheim tunneling and hot-carrier injection mechanisms are used to modify the amount of charge stored in the FG.

Field-effect transistor is a transistor that uses an electric field to control the electrical behaviour of the device. FETs are also known as unipolar transistors since they involve single-carrier-type operation. Many different implementations of field effect transistors exist. Field effect transistors generally display very high input impedance at low frequencies. The conductivity between the drain and source terminals is controlled by an electric field in the device, which is generated by the voltage difference between the body and the gate of the device

Molecule that works as Flash Storage - Macronix

EEPROM stands for electrically erasable programmable read-only memory and is a type of non-volatile memory used in computers and other electronic devices to store relatively small amounts of data but allowing individual bytes to be erased and reprogrammed.

Computer Data Storage is a technology consisting of computer components and recording media that are used to retain digital data. It is a core function and fundamental component of computers. Knowledge Preservation.

Memory-Mapped File is a segment of virtual memory that has been assigned a direct byte-for-byte correlation with some portion of a file or file-like resource. This resource is typically a file that is physically present on disk, but can also be a device, shared memory object, or other resource that the operating system can reference through a file descriptor. Once present, this correlation between the file and the memory space permits applications to treat the mapped portion as if it were primary memory.

Memory-Mapped I/O are two complementary methods of performing input/output (I/O) between the CPU and peripheral devices in a computer. An alternative approach is using dedicated I/O processors, commonly known as channels on mainframe computers, which execute their own instructions.

Virtual Memory is a memory management technique that is implemented using both hardware and software. It maps memory addresses used by a program, called virtual addresses, into physical addresses in computer memory. Main storage, as seen by a process or task, appears as a contiguous address space or collection of contiguous segments. The operating system manages virtual address spaces and the assignment of real memory to virtual memory. Address translation hardware in the CPU, often referred to as a memory management unit or MMU, automatically translates virtual addresses to physical addresses. Software within the operating system may extend these capabilities to provide a virtual address space that can exceed the capacity of real memory and thus reference more memory than is physically present in the computer. The primary benefits of virtual memory include freeing applications from having to manage a shared memory space, increased security due to memory isolation, and being able to conceptually use more memory than might be physically available, using the technique of paging.

Persistent Memory is any method or apparatus for efficiently storing data structures such that they can continue to be accessed using memory instructions or memory APIs even after the end of the process that created or last modified them. Often confused with non-volatile random-access memory (NVRAM), persistent memory is instead more closely linked to the concept of persistence in its emphasis on program state that exists outside the fault zone of the process that created it. Efficient, memory-like access is the defining characteristic of persistent memory. It can be provided using microprocessor memory instructions, such as load and store. It can also be provided using APIs that implement remote direct memory access verbs, such as RDMA read and RDMA write. Other low-latency methods that allow byte-grain access to data also qualify. Persistent memory capabilities extend beyond non-volatility of stored bits. For instance, the loss of key metadata, such as page table entries or other constructs that translate virtual addresses to physical addresses, may render durable bits non-persistent. In this respect, persistent memory resembles more abstract forms of computer storage, such as file systems. In fact, almost all existing persistent memory technologies implement at least a basic file system that can be used for associating names or identifiers with stored extents, and at a minimum provide file system methods that can be used for naming and allocating such extents.

Magnetoresistive Random-Access Memory is a non-volatile random-access memory technology available today that began its development in the 1990s. Continued increases in density of existing memory technologies – notably flash RAM and DRAM – kept it in a niche role in the market, but its proponents believe that the advantages are so overwhelming that magnetoresistive RAM will eventually become a dominant type of memory, potentially even becoming a universal memory. It is currently in production by Everspin, and other companies including GlobalFoundries and Samsung have announced product plans.. A recent, comprehensive review article on magnetoresistance and magnetic random access memories is available as an open access paper in Materials Toda.

Computer Memory (amazon) - Internal Hard Drives (amazon)

Laptop Computers (amazon) - Desktop Computers (amazon)

Webopedia has definitions to words, phrases and abbreviations related to computing and information technology.

Molecular Memory is a term for data storage technologies that use molecular species as the data storage element, rather than e.g. circuits, magnetics, inorganic materials or physical shapes. The molecular component can be described as a molecular switch, and may perform this function by any of several mechanisms, including charge storage, photochromism, or changes in capacitance. In a perfect molecular memory device, each individual molecule contains a bit of data, leading to massive data capacity. However, practical devices are more likely to use large numbers of molecules for each bit, in the manner of 3D optical data storage (many examples of which can be considered molecular memory devices). The term "molecular memory" is most often used to mean indicate very fast, electronically addressed solid-state data storage, as is the term computer memory. At present, molecular memories are still found only in laboratories.

Molecular Memory can be used to Increase the Memory Capacity of Hard Disks. Scientists have taken part in research where the first molecule capable of remembering the direction of a magnetic above liquid nitrogen temperatures has been prepared and characterized. The results may be used in the future to massively increase the storage capacity of hard disks without increasing their physical size.

Researchers develop 128Mb STT-MRAM with world's fastest write speed for embedded memory. A research team has successfully developed 128Mb-density STT-MRAM (spin-transfer torque magnetoresistive random access memory) with a write speed of 14 ns for use in embedded memory applications, such as cache in IOT and AI. This is currently the world's fastest write speed for embedded memory application with a density over 100Mb and will pave the way for the mass-production of large capacity STT-MRAM. STT-MRAM is capable of high-speed operation and consumes very little power as it retains data even when the power is off. Because of these features, STT-MRAM is gaining traction as the next-generation technology for applications such as embedded memory, main memory and logic. Three large semiconductor fabrication plants have announced that risk mass-production will begin in 2018. As memory is a vital component of computer systems, handheld devices and storage, its performance and reliability are of great importance for green energy solutions. The current capacity of STT-MRAM is ranged between 8Mb-40Mb. But to make STT-MRAM more practical, it is necessary to increase the memory density. The team at the Center for Innovative Integrated Electronic Systems (CIES) has increased the memory density of STT-MRAM by intensively developing STT-MRAMs in which magnetic tunnel junctions (MTJs) are integrated with CMOS. This will significantly reduce the power-consumption of embedded memory such as cache and eFlash memory. MTJs were miniaturized through a series of process developments. To reduce the memory size needed for higher-density STT-MRAM, the MTJs were formed directly on via holes -- small openings that allow a conductive connection between the different layers of a semiconductor device. By using the reduced size memory cell, the research group has designed 128Mb-density STT-MRAM and fabricated a chip. In the fabricated chip, the researchers measured a write speed of subarray. As a result, high-speed operation with 14ns was demonstrated at a low power supply voltage of 1.2 V. To date, this is the fastest write speed operation in an STT-MRAM chip with a density over 100Mb in the world.

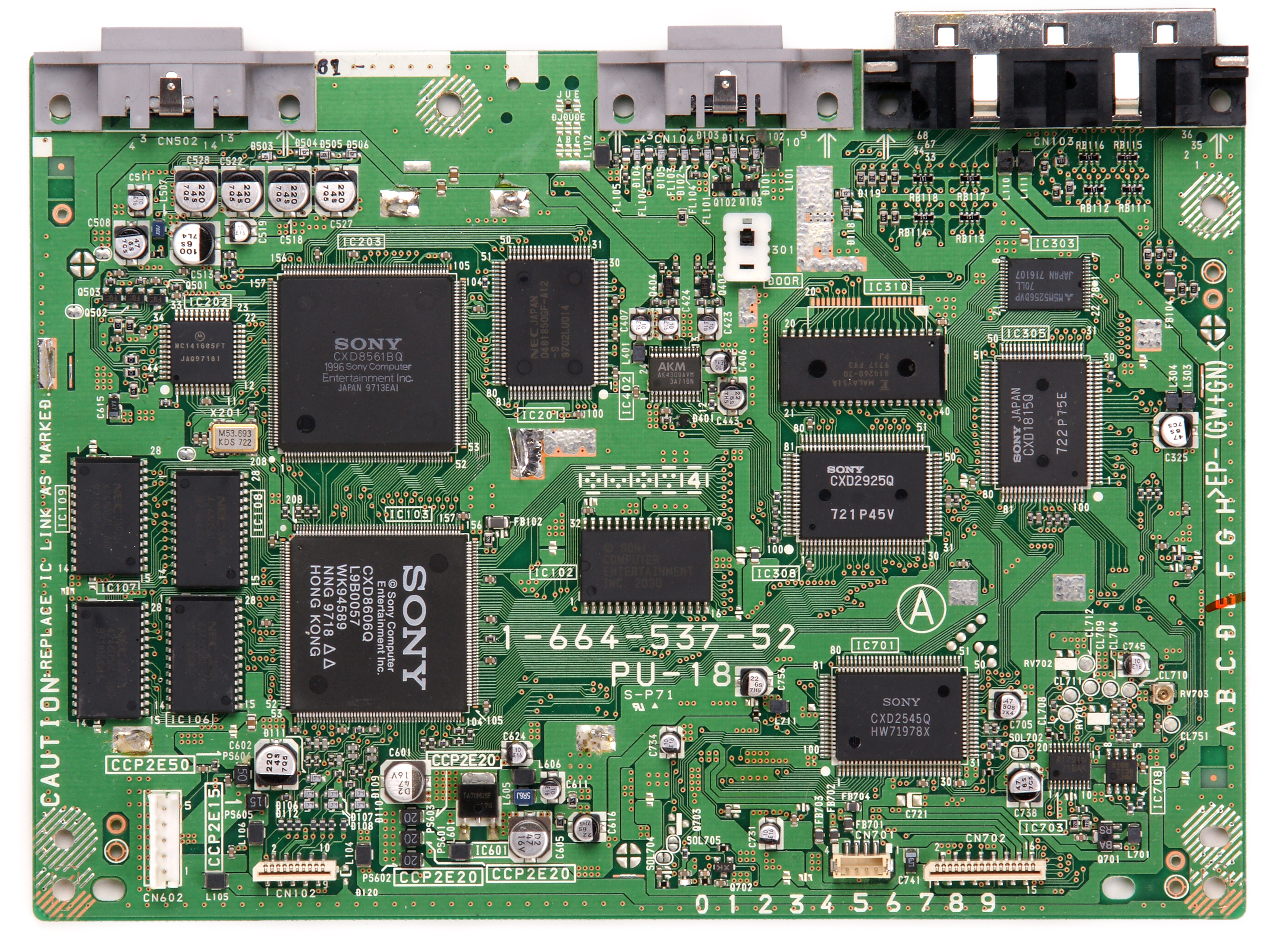

Motherboard - Main Circuit Board

Motherboard is the main printed circuit board (PCB) found in

general purpose microcomputers and other expandable systems. It holds and

allows communication between many of the crucial electronic components of

a system, such as the central processing unit

(CPU) and memory, and

provides connectors for other peripherals. Unlike a backplane, a

motherboard usually contains significant sub-systems such as the central

processor, the chipset's input/output and memory controllers, interface

connectors, and other components integrated for general purpose use.

Motherboard specifically refers to a PCB with expansion capability and as

the name suggests, this board is often referred to as the "mother"

of all components attached to it, which often include peripherals,

interface cards, and daughtercards: sound cards, video cards, network

cards, hard drives, or other forms of persistent storage; TV tuner cards,

cards providing extra USB or FireWire slots and a variety of other custom

components. Similarly, the term mainboard is applied to devices with a

single board and no additional expansions or capability, such as

controlling boards in laser printers, televisions, washing machines and

other embedded systems with limited expansion abilities.

Mother Board (image)

Motherboard is the main printed circuit board (PCB) found in

general purpose microcomputers and other expandable systems. It holds and

allows communication between many of the crucial electronic components of

a system, such as the central processing unit

(CPU) and memory, and

provides connectors for other peripherals. Unlike a backplane, a

motherboard usually contains significant sub-systems such as the central

processor, the chipset's input/output and memory controllers, interface

connectors, and other components integrated for general purpose use.

Motherboard specifically refers to a PCB with expansion capability and as

the name suggests, this board is often referred to as the "mother"

of all components attached to it, which often include peripherals,

interface cards, and daughtercards: sound cards, video cards, network

cards, hard drives, or other forms of persistent storage; TV tuner cards,

cards providing extra USB or FireWire slots and a variety of other custom

components. Similarly, the term mainboard is applied to devices with a

single board and no additional expansions or capability, such as

controlling boards in laser printers, televisions, washing machines and

other embedded systems with limited expansion abilities.

Mother Board (image)Circuit Board Components - Design (Circuit Boards)

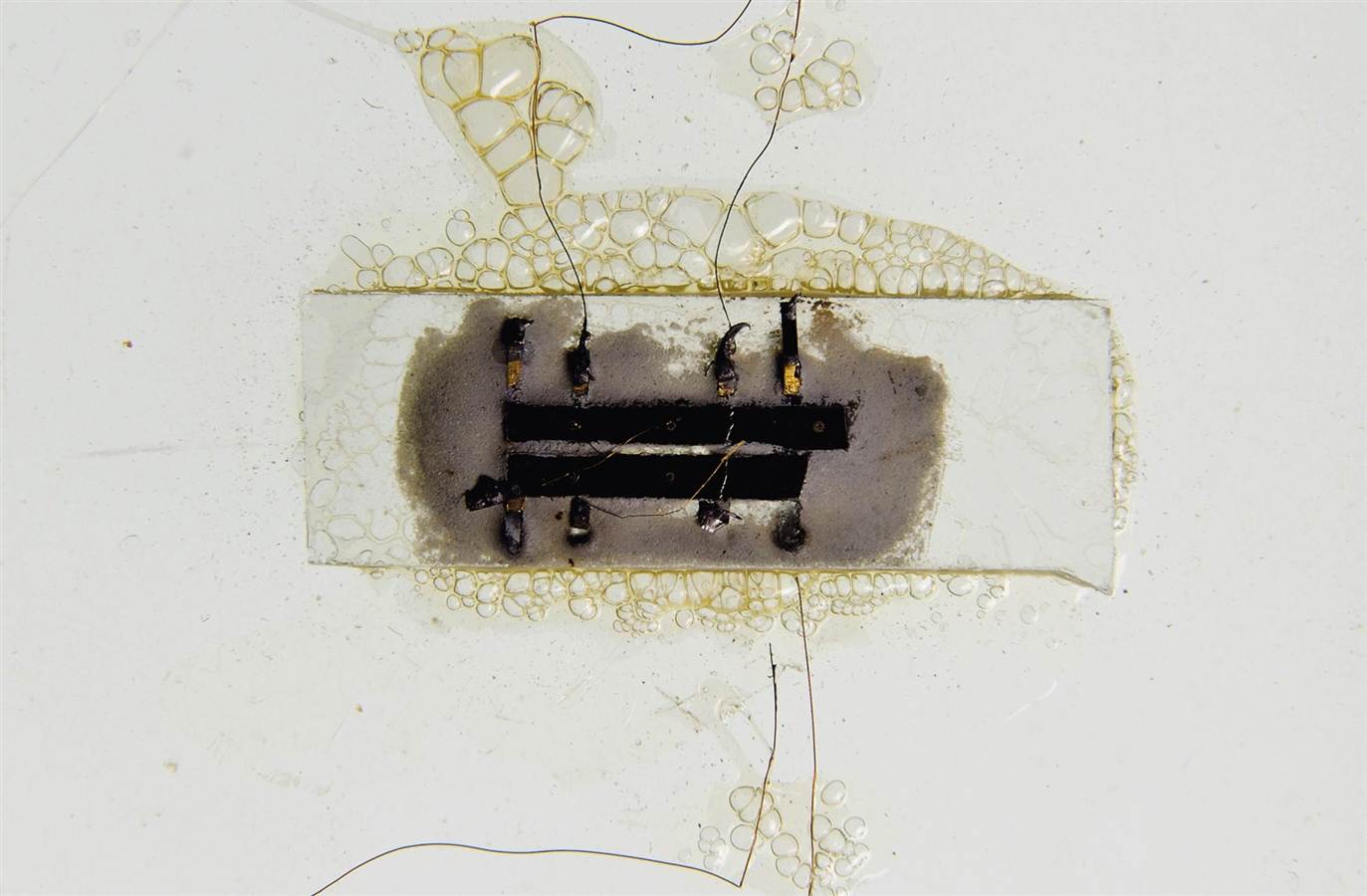

Integrated Circuit - I.C.

Printed Circuit Board mechanically supports and electrically connects electronic components or electrical components using conductive tracks, pads and other features etched from one or more sheet layers of copper laminated onto and/or between sheet layers of a non-conductive substrate. Components are generally soldered onto the PCB to both electrically connect and mechanically fasten them to it. Printed circuit boards are used in all but the simplest electronic products. They are also used in some electrical products, such as passive switch boxes.

Processor

Processor is the part of a computer or microprocessor chip that does most of the data processing, which is the procedure that interprets the input based on programmed instructions so that the information can be prepared for a particular purpose or output.

Microprocessor accepts digital or binary data as input, processes it according to instructions stored in its memory, and provides results as output. Transistors.

Central Processing Unit carries out the instructions of a computer program by performing the basic arithmetic, logical, control and input/output (I/O) operations specified by the instructions. Uses voltage control as a language. (Central Processing Unit is also know as the CPU for short).

Coprocessor is a computer processor used to supplement the functions of the primary processor or the CPU.

Multi-Core Processor can run multiple instructions at the same time, increasing overall speed for programs.

Multiprocessing is a computer system having two or more processing units (multiple processors) each sharing main memory and peripherals, in order to simultaneously process programs. It is the use of two or more central processing units (CPUs) within a single computer system. The term also refers to the ability of a system to support more than one processor or the ability to allocate tasks between them. There are many variations on this basic theme, and the definition of multiprocessing can vary with context, mostly as a function of how CPUs are defined. (multiple cores on one die, multiple dies in one package, multiple packages in one system unit, etc.). Brain Processing.

Context Switch in computing is the process of storing the state of a process or of a thread, so that it can be restored and execution resumed from the same point later. This allows multiple processes to share a single CPU, and is an essential feature of a multitasking operating system.

Graphics Processing Unit is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. GPUs are used in embedded systems, mobile phones, personal computers, workstations, and game consoles. Modern GPUs are very efficient at manipulating computer graphics and image processing, and their highly parallel structure makes them more efficient than general-purpose CPUs for algorithms where the processing of large blocks of data is done in parallel. In a personal computer, a GPU can be present on a video card, or it can be embedded on the motherboard or—in certain CPUs—on the CPU die. NVIDIA TITAN V is the most powerful graphics card ever created for the PC.

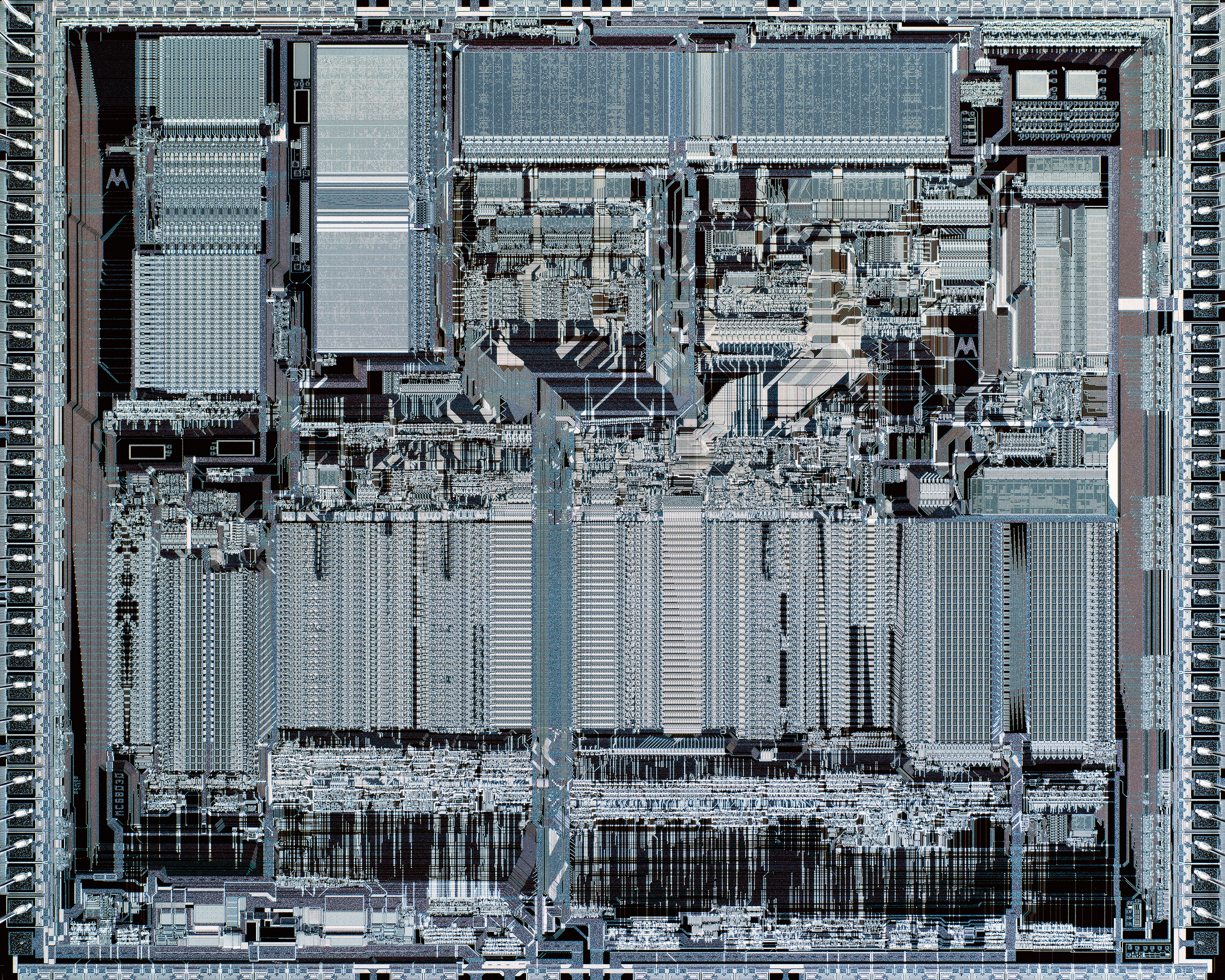

Processor Design is the design engineering task of creating a microprocessor, a component of computer hardware. It is a subfield of electronics engineering and computer engineering. The design process involves choosing an instruction set and a certain execution paradigm (e.g. VLIW or RISC) and results in a microarchitecture described in e.g. VHDL or Verilog. This description is then manufactured employing some of the various semiconductor device fabrication processes. This results in a die which is bonded onto a chip carrier. This chip carrier is then soldered onto, or inserted into a socket on, a printed circuit board (PCB).The mode of operation of any microprocessor is the execution of lists of instructions. Instructions typically include those to compute or manipulate data values using registers, change or retrieve values in read/write memory, perform relational tests between data values and to control program flow.

Multitasking - Batch Process - Process - Processing - Speed

Information Processor is a system (be it electrical, mechanical or biological) which takes information (a sequence of enumerated symbols or states) in one form and processes (transforms) it into another form, e.g. to statistics, by an algorithmic process. An information processing system is made up of four basic parts, or sub-systems: input, processor, storage, output.

Processor Affinity enables the binding and unbinding of a process or a thread to a central processing unit.

Clock Signal clock signal is a particular type of signal that oscillates between a high and a low state and is used like a metronome to coordinate actions of digital circuits. A clock signal is produced by a clock generator. Although more complex arrangements are used, the most common clock signal is in the form of a square wave with a 50% duty cycle, usually with a fixed, constant frequency. Circuits using the clock signal for synchronization may become active at either the rising edge, falling edge, or, in the case of double data rate, both in the rising and in the falling edges of the clock cycle.

Clock Generator is a circuit that produces a timing signal (known as a clock signal and behaves as such) for use in synchronizing a circuit's operation. The signal can range from a simple symmetrical square wave to more complex arrangements. The basic parts that all clock generators share are a resonant circuit and an amplifier. The resonant circuit is usually a quartz piezo-electric oscillator, although simpler tank circuits and even RC circuits may be used. The amplifier circuit usually inverts the signal from the oscillator and feeds a portion back into the oscillator to maintain oscillation. The generator may have additional sections to modify the basic signal. The 8088 for example, used a 2/3 duty cycle clock, which required the clock generator to incorporate logic to convert the 50/50 duty cycle which is typical of raw oscillators. Other such optional sections include frequency divider or clock multiplier sections. Programmable clock generators allow the number used in the divider or multiplier to be changed, allowing any of a wide variety of output frequencies to be selected without modifying the hardware. The clock generator in a motherboard is often changed by computer enthusiasts to control the speed of their CPU, FSB, GPU and RAM. Typically the programmable clock generator is set by the BIOS at boot time to the selected value; although some systems have dynamic frequency scaling, which frequently re-programs the clock generator.

Crystal Oscillator is an electronic oscillator circuit that uses the mechanical resonance of a vibrating crystal of piezoelectric material to create an electrical signal with a precise frequency.

Clock Speed typically refers to the frequency at which a chip like a central processing unit (CPU), one core of a multi-core processor, is running and is used as an indicator of the processor's speed. It is measured in clock cycles per second or its equivalent, the SI unit hertz (Hz). The clock rate of the first generation of computers was measured in hertz or kilohertz (kHz), but in the 21st century the speed of modern CPUs is commonly advertised in gigahertz (GHz). This metric is most useful when comparing processors within the same family, holding constant other features that may impact performance. Video card and CPU manufacturers commonly select their highest performing units from a manufacturing batch and set their maximum clock rate higher, fetching a higher price.

Counter in digital electronics is a device which stores (and sometimes displays) the number of times a particular event or process has occurred, often in relationship to a clock signal. The most common type is a sequential digital logic circuit with an input line called the "clock" and multiple output lines. The values on the output lines represent a number in the binary or BCD number system. Each pulse applied to the clock input increments or decrements the number in the counter. A counter circuit is usually constructed of a number of flip-flops connected in cascade. Counters are a very widely used component in digital circuits, and are manufactured as separate integrated circuits and also incorporated as parts of larger integrated circuits. (7 Bit Counter).

555 timer IC is an integrated circuit (chip) used in a variety of timer, pulse generation, and oscillator applications. The 555 can be used to provide time delays, as an oscillator, and as a flip-flop element. Derivatives provide two or four timing circuits in one package.

Semiconductor Design standard cell methodology is a method of designing application-specific integrated circuits (ASICs) with mostly digital-logic features.

BIOS

Silicon Photonics is the study and application of photonic systems which use silicon as an optical medium.

Transistor is a semiconductor device used to amplify or switch electronic signals and electrical power. It is composed of semiconductor material usually with at least three terminals for connection to an external circuit. A voltage or current applied to one pair of the transistor's terminals controls the current through another pair of terminals. Because the controlled (output) power can be higher than the controlling (input) power, a transistor can amplify a signal. Today, some transistors are packaged individually, but many more are found embedded in integrated circuits. CPU - Binary Code - Memistors.

Transistors, How do they work? (youtube)

Making your own 4 Bit Computer from Transistors (youtube)

See How Computers Add Numbers In One Lesson (youtube)

Carbon Nanotube Field-effect Transistor refers to a field-effect transistor that utilizes a single carbon nanotube or an array of carbon nanotubes as the channel material instead of bulk silicon in the traditional MOSFET structure. First demonstrated in 1998, there have been major developments in CNTFETs since.

Analog Chip is a set of miniature electronic analog circuits formed on a single piece of semiconductor material.

Analog Signal is any continuous signal for which the time varying feature (variable) of the signal is a representation of some other time varying quantity, i.e., analogous to another time varying signal. For example, in an analog audio signal, the instantaneous voltage of the signal varies continuously with the pressure of the sound waves. It differs from a digital signal, in which the continuous quantity is a representation of a sequence of discrete values which can only take on one of a finite number of values. The term analog signal usually refers to electrical signals; however, mechanical, pneumatic, hydraulic, human speech, and other systems may also convey or be considered analog signals. An analog signal uses some property of the medium to convey the signal's information. For example, an aneroid barometer uses rotary position as the signal to convey pressure information. In an electrical signal, the voltage, current, or frequency of the signal may be varied to represent the information.

Digital Signal is a signal that is constructed from a discrete set of waveforms of a physical quantity so as to represent a sequence of discrete values. A logic signal is a digital signal with only two possible values, and describes an arbitrary bit stream. Other types of digital signals can represent three-valued logic or higher valued logics. Conversion.

Spintronics and Nanophotonics combined in 2-D material is a way to convert the spin information into a predictable light signal at room temperature. The discovery brings the worlds of spintronics and nanophotonics closer together and might lead to the development of an energy-efficient way of processing data.

Math Works - Nimbula - Learning Tools - Digikey Electronic Components - Nand 2 Tetris

Interfaces - Brain - Robots - 3D Printing - Operating Systems - Code - Programing - Computer Courses - Online Dictionary of Computer - Technology Terms - CS Unplugged - Computer Science without using computers.

Computer Standards List (wiki) - IPv6 recent version of the Internet Protocol. IPv6 - Web 2.0 (wiki)

Trouble-Shoot PC's - Fixing PC's - PC Maintenance Tips

Variable (cs) - Technology Education - Engineering - Technology Addiction - Technical Competitions - Internet.

Digital Displays

Digital Signage is a sub segment of signage. Digital signages use technologies such as LCD, LED and Projection to display content such as digital images, video, streaming media, and information. They can be found in public spaces, transportation systems, museums, stadiums, retail stores, hotels, restaurants, and corporate buildings etc., to provide wayfinding, exhibitions, marketing and outdoor advertising. Digital Signage market is expected to grow from USD $15 billion to over USD $24bn by 2020. Interface.

Display Device is an output device for presentation of information in visual or tactile form (the latter used for example in tactile electronic displays for blind people). When the input information that is supplied has an electrical signal, the display is called an electronic display. Common applications for electronic visual displays are televisions or computer monitors.

Colors - Eyes (sight) - Eye Strain - Frame Rate

LED Display is a flat panel display, which uses an array of light-emitting diodes as pixels for a video display. Their brightness allows them to be used outdoors in store signs and billboards, and in recent years they have also become commonly used in destination signs on public transport vehicles. LED displays are capable of providing general illumination in addition to visual display, as when used for stage lighting or other decorative (as opposed to informational) purposes.

Organic Light-Emitting Diode (OLED) is a Light-Emitting Diode (LED) in which the emissive electroluminescent layer is a film of organic compound that emits light in response to an electric current. This layer of organic semiconductor is situated between two electrodes; typically, at least one of these electrodes is transparent. OLEDs are used to create digital displays in devices such as television screens, computer monitors, portable systems such as mobile phones, handheld game consoles and PDAs. A major area of research is the development of white OLED devices for use in solid-state lighting applications.

AMOLED is a display technology used in smartwatches, mobile devices, laptops, and televisions. OLED describes a specific type of thin-film-display technology in which organic compounds form the electroluminescent material, and active matrix refers to the technology behind the addressing of pixels.

High-Dynamic-Range Imaging is a high dynamic range (HDR) technique used in imaging and photography to reproduce a greater dynamic range of luminosity than is possible with standard digital imaging or photographic techniques. The aim is to present a similar range of luminance to that experienced through the human visual system. The human eye, through adaptation of the iris and other methods, adjusts constantly to adapt to a broad range of luminance present in the environment. The brain continuously interprets this information so that a viewer can see in a wide range of light conditions.

Graphics Display Resolution is the width and height dimensions of an electronic visual display device, such as a computer monitor, in pixels. Certain combinations of width and height are standardized and typically given a name and an initialism that is descriptive of its dimensions. A higher display resolution in a display of the same size means that displayed content appears sharper. Display Resolution is the number of distinct pixels in each dimension that can be displayed. Ratio.

4K Resolution refers to a horizontal resolution on the order of 4,000 pixels and vertical resolution on the order of 2,000 pixels.

Smartphones - Tech Addiction

Computer Monitor is an electronic visual display for computers. A monitor usually comprises the display device, circuitry, casing, and power supply. The display device in modern monitors is typically a thin film transistor liquid crystal display (TFT-LCD) or a flat panel LED display, while older monitors used a cathode ray tubes (CRT). It can be connected to the computer via VGA, DVI, HDMI, DisplayPort, Thunderbolt, LVDS (Low-voltage differential signaling) or other proprietary connectors and signals.

Durable Monitor Screens (computers)

Liquid-Crystal Display is a flat-panel display or other electronically modulated optical device that uses the light-modulating properties of liquid crystals. Liquid crystals do not emit light directly, instead using a backlight or reflector to produce images in color or monochrome. LCDs are available to display arbitrary images (as in a general-purpose computer display) or fixed images with low information content, which can be displayed or hidden, such as preset words, digits, and 7-segment displays, as in a digital clock. They use the same basic technology, except that arbitrary images are made up of a large number of small pixels, while other displays have larger elements. LCDs are used in a wide range of applications including computer monitors, televisions, instrument panels, aircraft cockpit displays, and indoor and outdoor signage. Small LCD screens are common in portable consumer devices such as digital cameras, watches, calculators, and mobile telephones, including smartphones. LCD screens are also used on consumer electronics products such as DVD players, video game devices and clocks. LCD screens have replaced heavy, bulky cathode ray tube (CRT) displays in nearly all applications. LCD screens are available in a wider range of screen sizes than CRT and plasma displays, with LCD screens available in sizes ranging from tiny digital watches to huge, big-screen television sets. Since LCD screens do not use phosphors, they do not suffer image burn-in when a static image is displayed on a screen for a long time (e.g., the table frame for an aircraft schedule on an indoor sign). LCDs are, however, susceptible to image persistence. The LCD screen is more energy-efficient and can be disposed of more safely than a CRT can. Its low electrical power consumption enables it to be used in battery-powered electronic equipment more efficiently than CRTs can be. By 2008, annual sales of televisions with LCD screens exceeded sales of CRT units worldwide, and the CRT became obsolete for most purposes.

Pixel is a physical point in a raster image, or the smallest addressable element in an all points addressable display device; so it is the smallest controllable element of a picture represented on the screen. Each pixel is a sample of an original image; more samples typically provide more accurate representations of the original. The intensity of each pixel is variable. In color imaging systems, a color is typically represented by three or four component intensities such as red, green, and blue, or cyan, magenta, yellow, and black. A pixel is generally thought of as the smallest single component of a digital image. However, the definition is highly context-sensitive. For example, there can be "printed pixels" in a page, or pixels carried by electronic signals, or represented by digital values, or pixels on a display device, or pixels in a digital camera (photosensor elements). This list is not exhaustive and, depending on context, synonyms include pel, sample, byte, bit, dot, and spot. Pixels can be used as a unit of measure such as: 2400 pixels per inch, 640 pixels per line, or spaced 10 pixels apart. The measures dots per inch (dpi) and pixels per inch (ppi) are sometimes used interchangeably, but have distinct meanings, especially for printer devices, where dpi is a measure of the printer's density of dot (e.g. ink droplet) placement. For example, a high-quality photographic image may be printed with 600 ppi on a 1200 dpi inkjet printer. Even higher dpi numbers, such as the 4800 dpi quoted by printer manufacturers since 2002, do not mean much in terms of achievable resolution. The more pixels used to represent an image, the closer the result can resemble the original. The number of pixels in an image is sometimes called the resolution, though resolution has a more specific definition. Pixel counts can be expressed as a single number, as in a "three-megapixel" digital camera, which has a nominal three million pixels, or as a pair of numbers, as in a "640 by 480 display", which has 640 pixels from side to side and 480 from top to bottom (as in a VGA display), and therefore has a total number of 640×480 = 307,200 pixels or 0.3 megapixels. The pixels, or color samples, that form a digitized image (such as a JPEG file used on a web page) may or may not be in one-to-one correspondence with screen pixels, depending on how a computer displays an image. In computing, an image composed of pixels is known as a bitmapped image or a raster image. The word raster originates from television scanning patterns, and has been widely used to describe similar halftone printing and storage techniques. Anti-Aliasing is the smoothing the jagged appearance of diagonal lines in a bitmapped image. The pixels that surround the edges of the line are changed to varying shades of gray or color in order to blend the sharp edge into the background. Matrix.

Touchscreen is a input and output device normally layered on the top of an electronic visual display of an information processing system. A user can give input or control the information processing system through simple or multi-touch gestures by touching the screen with a special stylus and/or one or more fingers. Some touchscreens use ordinary or specially coated gloves to work while others may only work using a special stylus/pen. The user can use the touchscreen to react to what is displayed and to control how it is displayed; for example, zooming to increase the text size. The touchscreen enables the user to interact directly with what is displayed, rather than using a mouse, touchpad, or any other such device (other than a stylus, which is optional for most modern touchscreens). Touchscreens are common in devices such as game consoles, personal computers, tablet computers, electronic voting machines, point of sale systems ,and smartphones. They can also be attached to computers or, as terminals, to networks. They also play a prominent role in the design of digital appliances such as personal digital assistants (PDAs) and some e-readers. Touchscreen Features recognizes multi touch gestures like swipes, pinch, flicks, tap, double tap and drag. Accepts touch inputs by gloved fingers, finger nails, pens, keys, credit cards, styluses, erasers, etc. Touch events can be recorded even if the user's finger does not touch the screen.

Making plastic more transparent while also adding electrical conductivity. In an effort to improve large touchscreens, LED light panels and window-mounted infrared solar cells, researchers have made plastic conductive while also making it more transparent.

Interfaces

Stylus in computing is a small pen-shaped instrument that is used to input commands to a computer screen, mobile device or graphics tablet. With touchscreen devices, a user places a stylus on the surface of the screen to draw or make selections by tapping the stylus on the screen. In this manner, the stylus can be used instead of a mouse or trackpad as a pointing device, a technique commonly called pen computing. Pen-like input devices which are larger than a stylus, and offer increased functionality such as programmable buttons, pressure sensitivity and electronic erasers, are often known as digital pens.

7-Segment Display - 9-Segment Display - 14-Segment Display

LCD that is paper-thin, flexible, light, tough and cheap perhaps only costing $5 for a 5-inch screen. flexible paper like display that could be updated as fast as the news cycles. Less than half a millimeter thick, the new flexi-LCD design could revolutionize printed media. A front polarizer-free optically rewritable (ORW) liquid crystal display (LCD).

Software - Digital Information

Software is that part of a computer system that consists of encoded information or computer instructions, in contrast to the physical hardware from which the system is built. Software are written programs that are stored in read/write memory, which includes procedures or rules and associated documentation pertaining to the operation of a computer system. Software is a specialized tool for performing advanced calculations that allow the user to be more productive and work incredibly fast and efficient. Software is a time saver.

Operating System - Code - Analog - Word Processing - Apps - AI - Algorithms

Software Engineering is the application of engineering to the development of software in a systematic method. Typical formal definitions of Software Engineering are: Research, design, develop, and test operating systems-level software, compilers, and network distribution software for medical, industrial, military, communications, aerospace, business, scientific, and general computing applications. The systematic application of scientific and technological knowledge, methods, and experience to the design, implementation, testing, and documentation of software"; The application of a systematic, disciplined, quantifiable approach to the development, operation, and maintenance of software; An engineering discipline that is concerned with all aspects of software production; And the establishment and use of sound engineering principles in order to economically obtain software that is reliable and works efficiently on real machines.

Software Architecture refers to the high level structures of a software system, the discipline of creating such structures, and the documentation of these structures. These structures are needed to reason about the software system. Each structure comprises software elements, relations among them, and properties of both elements and relations. The architecture of a software system is a metaphor, analogous to the architecture of a building.

Software Framework is an abstraction in which software providing generic functionality can be selectively changed by additional user-written code, thus providing application-specific software. A software framework provides a standard way to build and deploy applications. A software framework is a universal, reusable software environment that provides particular functionality as part of a larger software platform to facilitate development of software applications, products and solutions. Software frameworks may include support programs, compilers, code libraries, tool sets, and Application Programming Interfaces (APIs) that bring together all the different components to enable development of a project or system. Frameworks have key distinguishing features that separate them from normal libraries: inversion of control: In a framework, unlike in libraries or in standard user applications, the overall program's flow of control is not dictated by the caller, but by the framework. Extensibility: A user can extend the framework - usually by selective overriding; or programmers can add specialized user code to provide specific functionality. Non-modifiable framework code: The framework code, in general, is not supposed to be modified, while accepting user-implemented extensions. In other words, users can extend the framework, but should not modify its code.

Abstraction is a technique for hiding complexity of computer systems. It works by establishing a level of simplicity on which a person interacts with the system, suppressing the more complex details below the current level. The programmer works with an idealized interface (usually well defined) and can add additional levels of functionality that would otherwise be too complex to handle.

Software Development is the process of computer programming, documenting, testing, and bug fixing involved in creating and maintaining applications and frameworks resulting in a software product. Software development is a process of writing and maintaining the source code, but in a broader sense, it includes all that is involved between the conception of the desired software through to the final manifestation of the software, sometimes in a planned and structured process. Therefore, software development may include research, new development, prototyping, modification, reuse, re-engineering, maintenance, or any other activities that result in software products.

Don't Repeat Yourself is a principle of software development aimed at reducing repetition of software patterns, replacing it with abstractions or using data normalization to avoid redundancy.

Worse is Better is when software that is limited, but simple to use, may be more appealing to the user and market than the reverse. The idea that quality does not necessarily increase with functionality—that there is a point where less functionality ("worse") is a preferable option ("better") in terms of practicality and usability.

Unix Philosophy is bringing the concepts of modularity and reusability into software engineering practice.

Reusability - Smart Innovation - Compatibility - Simplicity

Software Versioning is when some schemes use a zero in the first sequence to designate alpha or beta status for releases that are not stable enough for general or practical deployment and are intended for testing or internal use only. It can be used in the third position: 0 for alpha (status) - 1 for beta (status) - 2 for release candidate - 3 for (final) release. Version 1.0 is used as a major milestone, indicating that the software is "complete", that it has all major features, and is considered reliable enough for general release. A good example of this is the Linux kernel, which was first released as version 0.01 in 1991, and took until 1994 to reach version 1.0.0. Me 2.0

Software Developer is a person concerned with facets of the software development process, including the research, design, programming, and testing of computer software. Other job titles which are often used with similar meanings are programmer, software analyst, and software engineer. According to developer Eric Sink, the differences between system design, software development, and programming are more apparent. Already in the current market place there can be found a segregation between programmers and developers, being that one who implements is not the same as the one who designs the class structure or hierarchy. Even more so that developers become systems architects, those who design the multi-leveled architecture or component interactions of a large software system. (see also Debate over who is a software engineer).

Software Development Process is splitting of software development work into distinct phases (or stages) containing activities with the intent of better planning and management. It is often considered a subset of the systems development life cycle. The methodology may include the pre-definition of specific deliverables and artifacts that are created and completed by a project team to develop or maintain an application. Common methodologies include waterfall, prototyping, iterative and incremental development, spiral development, rapid application development, extreme programming and various types of agile methodology. Some people consider a life-cycle "model" a more general term for a category of methodologies and a software development "process" a more specific term to refer to a specific process chosen by a specific organization. For example, there are many specific software development processes that fit the spiral life-cycle model. Project Management.

Scrum in software Development is a framework for managing software development. It is designed for teams of three to nine developers who break their work into actions that can be completed within fixed duration cycles (called "sprints"), track progress and re-plan in daily 15-minute stand-up meetings, and collaborate to deliver workable software every sprint. Approaches to coordinating the work of multiple scrum teams in larger organizations include Large-Scale Scrum, Scaled Agile Framework (SAFe) and Scrum of Scrums, among others. Scrum is an iterative and incremental agile software development framework for managing product development. It defines "a flexible, holistic product development strategy where a development team works as a unit to reach a common goal", challenges assumptions of the "traditional, sequential approach" to product development, and enables teams to self-organize by encouraging physical co-location or close online collaboration of all team members, as well as daily face-to-face communication among all team members and disciplines involved. A key principle of Scrum is its recognition that during product development, the customers can change their minds about what they want and need (often called requirements volatility), and that unpredicted challenges cannot be easily addressed in a traditional predictive or planned manner. As such, Scrum adopts an evidence-based empirical approach—accepting that the problem cannot be fully understood or defined, focusing instead on maximizing the team's ability to deliver quickly, to respond to emerging requirements and to adapt to evolving technologies and changes in market conditions.