BK101

Knowledge Base

Coding - Learning Computer Programing Language Code

What Does Learning to Code Mean?

Coding is computer programing. It's learning a particular

programming language or

assembly language made up of letters,

symbols and

numbers that you type in

using a keyboard. You are basically converting

language into code that

will be used to communicate to

machines, so that the Machines perform

particular functions that you need to have done. Actions that will ultimately help

you to accomplish a particular goal. Learning code will make you bilingual.

Coding is computer programing. It's learning a particular

programming language or

assembly language made up of letters,

symbols and

numbers that you type in

using a keyboard. You are basically converting

language into code that

will be used to communicate to

machines, so that the Machines perform

particular functions that you need to have done. Actions that will ultimately help

you to accomplish a particular goal. Learning code will make you bilingual.

Coding in social sciences is an analytical process in which data, in both quantitative form such as questionnaires results or qualitative such as interview transcripts is categorized to facilitate analysis. Coding means the transformation of data into a form understandable by computer software. Reusability - Accessibility - Usability.

Computer Knowledge - Command Line Interface

Computer Program is a detailed step set of instructions that can be interpreted and carried out by a computer. A computer is a machine that can quickly and accurately follow, carry out, and execute the detailed step-by-step set of instructions in a computer program. Computer programmers design, write, and test computer programs—so they are deeply involved in doing computational thinking. It's like morse code has come full circle, from dot dot dash, to on or off, zero's and ones.

Levels of Computer Programming Languages

Machine Code

is lowest possible level at which you can program a computer. Consisting of strings of

1's and 0's, and stored as

binary numbers. The main problem with using machine

code directly is

that it's very easy to make a mistake, and very hard to find it once you

realize the mistake has been made.

Machine Code is a set of instructions executed directly by a

computer's central processing unit (CPU). Each instruction performs a very

specific task, such as a load, a jump, or an ALU operation on a unit of

data in a CPU register or memory. Every program directly executed by a CPU

is made up of a series of such

instructions.

DNA - Code.

Machine Code

is lowest possible level at which you can program a computer. Consisting of strings of

1's and 0's, and stored as

binary numbers. The main problem with using machine

code directly is

that it's very easy to make a mistake, and very hard to find it once you

realize the mistake has been made.

Machine Code is a set of instructions executed directly by a

computer's central processing unit (CPU). Each instruction performs a very

specific task, such as a load, a jump, or an ALU operation on a unit of

data in a CPU register or memory. Every program directly executed by a CPU

is made up of a series of such

instructions.

DNA - Code.I/O is used to describe any program, operation or device that transfers data to or from a computer and to or from a peripheral device. Every transfer is an output from one device and an input into another. I/O is short for input/output (pronounced "eye-oh"). Transistors - PLC.

BIOS is an acronym for Basic Input / Output System and also known as the System BIOS, ROM BIOS or PC BIOS) is non-volatile firmware used to perform hardware initialization during the Booting Process (power-on startup), and to provide runtime services for Operating Systems and Programs. The BIOS firmware comes pre-installed on a personal computer's system board, and it is the first software to run when powered on. The name originates from the Basic Input/Output System used in the CP/M operating system in 1975. Originally proprietary to the IBM PC, the BIOS has been reverse engineered by companies looking to create compatible systems. The interface of that original system serves as a de facto standard. The BIOS in modern PCs initializes and tests the system hardware components, and loads a boot loader from a mass memory device which then initializes an operating system. In the era of MS-DOS, the BIOS provided a hardware abstraction layer for the keyboard, display, and other input/output (I/O) devices that standardized an interface to application programs and the operating system. More recent operating systems do not use the BIOS after loading, instead accessing the hardware components directly. Most BIOS implementations are specifically designed to work with a particular computer or motherboard model, by interfacing with various devices that make up the complementary system chipset. Originally, BIOS firmware was stored in a ROM chip on the PC motherboard. In modern computer systems, the BIOS contents are stored on flash memory so it can be rewritten without removing the chip from the motherboard. This allows easy, end-user updates to the BIOS firmware so new features can be added or bugs can be fixed, but it also creates a possibility for the computer to become infected with BIOS rootkits. Furthermore, a BIOS upgrade that fails can brick the motherboard permanently, unless the system includes some form of backup for this case. Unified Extensible Firmware Interface (UEFI) is a successor to BIOS, aiming to address its technical shortcomings. BIOS a set of computer instructions in firmware which control input and output operations. The Bios is non-volatile firmware used to perform hardware initialization during the booting process (power-on startup), and to provide runtime services for operating systems and programs. The BIOS firmware comes pre-installed on a personal computer's system board, and it is the first software to run when powered on. The name originates from the Basic Input/Output System used in the CP/M operating system in 1975. Originally proprietary to the IBM PC, the BIOS has been reverse engineered by companies looking to create compatible systems. The interface of that original system serves as a de facto standard. The BIOS in modern PCs initializes and tests the system hardware components, and loads a boot loader from a mass memory device which then initializes an operating system. In the era of MS-DOS, the BIOS provided a hardware abstraction layer for the keyboard, display, and other input/output (I/O) devices that standardized an interface to application programs and the operating system. More recent operating systems do not use the BIOS after loading, instead accessing the hardware components directly. Most BIOS implementations are specifically designed to work with a particular computer or motherboard model, by interfacing with various devices that make up the complementary system chipset. Originally, BIOS firmware was stored in a ROM chip on the PC motherboard. In modern computer systems, the BIOS contents are stored on flash memory so it can be rewritten without removing the chip from the motherboard. This allows easy, end-user updates to the BIOS firmware so new features can be added or bugs can be fixed, but it also creates a possibility for the computer to become infected with BIOS rootkits. Furthermore, a BIOS upgrade that fails can brick the motherboard permanently, unless the system includes some form of backup for this case.

Assembly Language is nothing more than a symbolic representation of machine code, which also allows symbolic designation of memory locations. Thus, an instruction to add the contents of a memory location to an internal CPU register called the accumulator might be add a number instead of a string of binary digits (bits). No matter how close assembly language is to machine code, the computer still cannot understand it. The assembly-language program must be translated into machine code by a separate program called an assembler. The assembler program recognizes the character strings that make up the symbolic names of the various machine operations, and substitutes the required machine code for each instruction. At the same time, it also calculates the required address in memory for each symbolic name of a memory location, and substitutes those addresses for the names. The final result is a machine-language program that can run on its own at any time; the assembler and the assembly-language program are no longer needed. To help distinguish between the "before" and "after" versions of the program, the original assembly-language program is also known as the source code, while the final machine-language program is designated the object code. If an assembly-language program needs to be changed or corrected, it is necessary to make the changes to the source code and then re-assemble it to create a new object program. Assembly Language is a low-level programming language for a computer, or other programmable device, in which there is a very strong (generally one-to-one) correspondence between the language and the architecture's machine code instructions. Each assembly language is specific to a particular computer architecture.

High-Level Language is a programming language such as C, FORTRAN, or Pascal that enables a programmer to write programs that are more or less independent of a particular type of computer. Such languages are considered high-level because they are closer to human languages and further from machine languages.

Programming Languages

Source Code is any collection of computer instructions (possibly with comments) written using some human-readable computer language, usually as text. The source code of a program is specially designed to facilitate the work of computer programmers, who specify the actions to be performed by a computer mostly by writing source code. The source code is often transformed by a compiler program into low-level machine code understood by the computer. Source Lines of Code (wiki).

Opcode is the portion of a machine language instruction that specifies the operation to be performed. Beside the opcode itself, most instructions also specify the data they will process, in the form of operands. In addition to opcodes used in the instruction set architectures of various CPUs, which are hardware devices, they can also be used in abstract computing machines as part of their byte code specifications.

Visual Programming Language is any programming language that lets users create programs by manipulating program elements graphically rather than by specifying them textually. A VPL allows programming with visual expressions, spatial arrangements of text and graphic symbols, used either as elements of syntax or secondary notation. For example, many VPLs (known as dataflow or diagrammatic programming) are based on the idea of "boxes and arrows", where boxes or other screen objects are treated as entities, connected by arrows, lines or arcs which represent relations. Visual Knowledge.

Compiled Language are the high-level equivalent of assembly language. Each instruction in the compiler language can correspond to many machine instructions. Once the program has been written, it is translated to the equivalent machine code by a program called a compiler. Once the program has been compiled, the resulting machine code is saved separately, and can be run on its own at any time. As with assembly-language programs, updating or correcting a compiled program requires that the original (source) program be modified appropriately and then recompiled to form a new machine-language (object) program. Typically, the compiled machine code is less efficient than the code produced when using assembly language. This means that it runs a bit more slowly and uses a bit more memory than the equivalent assembled program. To offset this drawback, however, we also have the fact that it takes much less time to develop a compiler-language program, so it can be ready to go sooner than the assembly-language program. Compiled Language translators that generate machine code from source code, and not interpreters (step-by-step executors of source code, where no pre-runtime translation takes place. Operating Systems.

Interpreter Language, like a compiler language, is considered to be high level. However, it operates in a totally different manner from a compiler language. Rather, the interpreter program resides in memory, and directly executes the high-level program without preliminary translation to machine code. This use of an interpreter program to directly execute the user's program has both advantages and disadvantages. The primary advantage is that you can run the program to test its operation, make a few changes, and run it again directly. There is no need to recompile because no new machine code is ever produced. This can enormously speed up the development and testing process. On the down side, this arrangement requires that both the interpreter and the user's program reside in memory at the same time. In addition, because the interpreter has to scan the user's program one line at a time and execute internal portions of itself in response, execution of an interpreted program is much slower than for a compiled program. Interpreter computer program directly executes, i.e. performs, instructions written in a programming or scripting language, without previously compiling them into a machine language program. An interpreter generally uses one of the following strategies for program execution: parse the source code and perform its behavior directly. translate source code into some efficient intermediate representation and immediately execute this. explicitly execute stored precompiled code made by a compiler which is part of the interpreter system. Interpreted language is a programming language in which programs are directly executed by an interpreter.

There is only one programming language that any computer can actually understand and execute: its own native binary machine code. This is the lowest possible level of language in which it is possible to write a computer program. All other languages are said to be high level or low level according to how closely they can be said to resemble machine code. In this context, a low-level language corresponds closely to machine code, so that a single low-level language instruction translates to a single machine-language instruction. A high-level language instruction typically translates into a series of machine-language instructions. Low-Level Programming Languages have the advantage that they can be written to take advantage of any peculiarities in the architecture of the central processing unit (CPU) which is the "brain" of any computer. Thus, a program written in a low-level language can be extremely efficient, making optimum use of both computer memory and processing time. However, to write a low-level program takes a substantial amount of time, as well as a clear understanding of the inner workings of the processor itself. Therefore, low-level programming is typically used only for very small programs, or for segments of code that are highly critical and must run as efficiently as possible. High-level languages permit faster development of large programs. The final program as executed by the computer is not as efficient, but the savings in programmer time generally far outweigh the inefficiencies of the finished product. This is because the cost of writing a program is nearly constant for each line of code, regardless of the language. Thus, a high-level language where each line of code translates to 10 machine instructions costs only one tenth as much in program development as a low-level language where each line of code represents only a single machine instruction.

Scripting Language is a programming language that supports scripts; programs written for a special run-time environment that automate the execution of tasks that could alternatively be executed one-by-one by a human operator. Scripting languages are often interpreted (rather than compiled).

Conditional Probability is a measure of the probability of an event given that (by assumption, presumption, assertion or evidence) another event has occurred. If the event of interest is A and the event B is known or assumed to have occurred, "the conditional probability of A given B", or "the probability of A under the condition B", is usually written as P(A|B), or sometimes PB(A). For example, the probability that any given person has a cough on any given day may be only 5%. But if we know or assume that the person has a cold, then they are much more likely to be coughing. The conditional probability of coughing given that you have a cold might be a much higher 75%.

Transaction Processing is information processing in computer science that is divided into individual, indivisible operations called transactions. Each transaction must succeed or fail as a complete unit; it can never be only partially complete. Atomic Transaction.

Relevance Logic is a kind of non-classical logic requiring the antecedent and consequent of implications to be relevantly related. They may be viewed as a family of substructural or modal logics. (It is generally, but not universally, called relevant logic by Australian logicians, and relevance logic by other English-speaking logicians.) Relevance logic aims to capture aspects of implication that are ignored by the "material implication" operator in classical truth-functional logic, namely the notion of relevance between antecedent and conditional of a true implication. This idea is not new: C. I. Lewis was led to invent modal logic, and specifically strict implication, on the grounds that classical logic grants paradoxes of material implication such as the principle that a falsehood implies any proposition. Hence "if I'm a donkey, then two and two is four" is true when translated as a material implication, yet it seems intuitively false since a true implication must tie the antecedent and consequent together by some notion of relevance. And whether or not I'm a donkey seems in no way relevant to whether two and two is four.

Algorithms - Apps

Multitier Architecture is a client–server architecture in which presentation, application processing, and data management functions are physically separated. The most widespread use of multitier architecture is the three-tier architecture.

Computer Architecture - Architecture (engineering)

Software - Hardware - Networks

Innovation - Information Management - Knowledge Management - Computer Multitasking - Variables - Virtualization - Computer Generated Imagery.

Instruction Set Architecture specifies the behavior of machine code running on implementations of that ISA in a fashion that does not depend on the characteristics of that implementation, providing binary compatibility between implementations. This enables multiple implementations of an ISA that differ in performance, physical size, and monetary cost (among other things), but that are capable of running the same machine code, so that a lower-performance, lower-cost machine can be replaced with a higher-cost, higher-performance machine without having to replace software. It also enables the evolution of the microarchitectures of the implementations of that ISA, so that a newer, higher-performance implementation of an ISA can run software that runs on previous generations of implementations. If an operating system maintains a standard and compatible application binary interface (ABI) for a particular ISA, machine code for that ISA and operating system will run on future implementations of that ISA and newer versions of that operating system. However, if an ISA supports running multiple operating systems, it does not guarantee that machine code for one operating system will run on another operating system, unless the first operating system supports running machine code built for the other operating system. An ISA can be extended by adding instructions or other capabilities, or adding support for larger addresses and data values; an implementation of the extended ISA will still be able to execute machine code for versions of the ISA without those extensions. Machine code using those extensions will only run on implementations that support those extensions. The binary compatibility that they provide make ISAs one of the most fundamental abstractions in computing. ISA is an abstract model of a computer. It is also referred to as architecture or computer architecture. A realization of an ISA, such as a central processing unit (CPU), is called an implementation. In general, an ISA defines the supported data types, the registers, the hardware support for managing main memory fundamental features (such as the memory consistency, addressing modes, virtual memory), and the input/output model of a family of implementations of the ISA.

Languages used in Computer Programming

Programming Language is a formal computer language designed

to communicate instructions to a machine, particularly a computer.

Programming languages can be used to create programs to control the

behavior of a machine or to express

algorithms.

Programming Language is a formal computer language designed

to communicate instructions to a machine, particularly a computer.

Programming languages can be used to create programs to control the

behavior of a machine or to express

algorithms.List of Programming Languages (PDF)

Computer Languages (wiki)

Dynamic Language is a class of high-level programming languages which, at runtime, execute many common programming behaviors that static programming languages perform during compilation. These behaviors could include extension of the program, by adding new code, by extending objects and definitions, or by modifying the type system. Although similar behaviours can be emulated in nearly any language, with varying degrees of difficulty, complexity and performance costs, dynamic languages provide direct tools to make use of them. Many of these features were first implemented as native features in the Lisp programming language.

Programming Paradigm are a way to classify programming languages based on the features of various programming languages. Languages can be classified into multiple paradigm. Some paradigms are concerned mainly with implications for the execution model of the language, such as allowing side effects, or whether the sequence of operations is defined by the execution model. Other paradigms are concerned mainly with the way that code is organized, such as grouping code into units along with the state that is modified by the code. Yet others are concerned mainly with the style of syntax and grammar. Common programming paradigms include: Imperative which allows side effects, functional which disallows side effects, declarative which does not state the order in which operations execute, object-oriented which groups code together with the state the code modifies, procedural which groups code into functions, logic which has a particular style of execution model coupled to a particular style of syntax and grammar, and symbolic programming which has a particular style of syntax and grammar. Redundancy.

Formal Speaking Languages

C programming language is a general-purpose, imperative computer programming language, supporting structured programming, lexical variable scope and recursion, while a static type system prevents many unintended operations. By design, C provides constructs that map efficiently to typical machine instructions, and therefore it has found lasting use in applications that had formerly been coded in assembly language, including operating systems, as well as various application software for computers ranging from supercomputers to embedded systems. List of C-family Programming Languages (wiki)

C++ is a general-purpose programming language. It has imperative, object-oriented and generic programming features, while also providing facilities for low-level memory manipulation.

C# is a multi-paradigm programming language encompassing strong typing, imperative, declarative, functional, generic, object-oriented (class-based), and component-oriented programming disciplines.

Objective-C is a general-purpose, object-oriented programming language that adds Smalltalk-style messaging to the C programming language. It was the main programming language used by Apple for the OS X and iOS operating systems, and their respective application programming interfaces (APIs) Cocoa and Cocoa Touch prior to the introduction of Swift.

bfoit

LPC is an object-oriented programming language derived from C and developed originally by Lars Pensjö to facilitate MUD building on LPMuds. Though designed for game development, its flexibility has led to it being used for a variety of purposes, and to its evolution into the language Pike.

Perl is a family of high-level, general-purpose, interpreted, dynamic programming languages. The languages in this family include Perl 5 and Perl 6.

Verilog is a hardware description language (HDL) used to model electronic systems. It is most commonly used in the design and verification of digital circuits at the register-transfer level of abstraction. It is also used in the verification of analog circuits and mixed-signal circuits, as well as in the design of genetic circuits.

Pascal is an imperative and procedural programming language, which Niklaus Wirth designed in 1968–69 and published in 1970, as a small, efficient language intended to encourage good programming practices using structured programming and data structuring. A derivative known as Object Pascal designed for object-oriented programming was developed in 1985.

Java is a general-purpose computer programming language that is concurrent, class-based, object-oriented, and specifically designed to have as few implementation dependencies as possible. It is intended to let application developers "write once, run anywhere" (WORA), meaning that compiled Java code can run on all platforms that support Java without the need for recompilation. Java applications are typically compiled to bytecode that can run on any Java virtual machine (JVM) regardless of computer architecture. As of 2016, Java is one of the most popular programming languages in use, particularly for client-server web applications, with a reported 9 million developers. Java was originally developed by James Gosling at Sun Microsystems (which has since been acquired by Oracle Corporation) and released in 1995 as a core component of Sun Microsystems' Java platform. The language derives much of its syntax from C and C++, but it has fewer low-level facilities than either of them.

JavaScript is a high-level, dynamic, untyped, and interpreted programming language. It has been standardized in the ECMAScript language specification. Alongside HTML and CSS, JavaScript is one of the three core technologies of World Wide Web content production; the majority of websites employ it, and all modern Web browsers support it without the need for plug-ins. JavaScript is prototype-based with first-class functions, making it a multi-paradigm language, supporting object-oriented, imperative, and functional programming styles. It has an API for working with text, arrays, dates and regular expressions, but does not include any I/O, such as networking, storage, or graphics facilities, relying for these upon the host environment in which it is embedded.

HTML or Hypertext Markup Language, is the standard markup language for creating web pages and web applications. With Cascading Style Sheets (CSS) and JavaScript it forms a triad of cornerstone technologies for the World Wide Web. Web browsers receive HTML documents from a webserver or from local storage and render them into multimedia web pages. HTML describes the structure of a web page semantically and originally included cues for the appearance of the document. HTML elements are the building blocks of HTML pages. With HTML constructs, images and other objects, such as interactive forms, may be embedded into the rendered page. It provides a means to create structured documents by denoting structural semantics for text such as headings, paragraphs, lists, links, quotes and other items. HTML elements are delineated by tags, written using angle brackets. Tags such as <img /> and <input /> introduce content into the page directly. Others such as <p>...</p> surround and provide information about document text and may include other tags as sub-elements. Browsers do not display the HTML tags, but use them to interpret the content of the page. HTML can embed programs written in a scripting language such as JavaScript which affect the behavior and content of web pages. Inclusion of CSS defines the look and layout of content. Html 5 Framework

The World Wide Web Consortium (W3C), maintainer of both the HTML and the CSS standards, has encouraged the use of CSS over explicit presentational HTML since 1997.

CSS or Cascading Style Sheets, is a style sheet language used for describing the presentation of a document written in a markup language. Although most often used to set the visual style of web pages and user interfaces written in HTML and XHTML, the language can be applied to any XML document, including plain XML, SVG and XUL, and is applicable to rendering in speech, or on other media. Along with HTML and JavaScript, CSS is a cornerstone technology used by most websites to create visually engaging webpages, user interfaces for web applications, and user interfaces for many mobile applications. CSS is designed primarily to enable the separation of document content from document presentation, including aspects such as the layout, colors, and fonts. This separation can improve content accessibility, provide more flexibility and control in the specification of presentation characteristics, enable multiple HTML pages to share formatting by specifying the relevant CSS in a separate .css file, and reduce complexity and repetition in the structural content. Separation of formatting and content makes it possible to present the same markup page in different styles for different rendering methods, such as on-screen, in print, by voice (via speech-based browser or screen reader), and on Braille-based tactile devices. It can also display the web page differently depending on the screen size or viewing device. Readers can also specify a different style sheet, such as a CSS file stored on their own computer, to override the one the author specified. Changes to the graphic design of a document (or hundreds of documents) can be applied quickly and easily, by editing a few lines in the CSS file they use, rather than by changing markup in the documents. The CSS specification describes a priority scheme to determine which style rules apply if more than one rule matches against a particular element. In this so-called cascade, priorities (or weights) are calculated and assigned to rules, so that the results are predictable.

Python is a widely used high-level programming language for general-purpose programming, created by Guido van Rossum and first released in 1991. An interpreted language, Python has a design philosophy which emphasizes code readability (notably using whitespace indentation to delimit code blocks rather than curly braces or keywords), and a syntax which allows programmers to express concepts in fewer lines of code than possible in languages such as C++ or Java. The language provides constructs intended to enable writing clear programs on both a small and large scale. Python features a dynamic type system and automatic memory management and supports multiple programming paradigms, including object-oriented, imperative, functional programming, and procedural styles. It has a large and comprehensive standard library. Python interpreters are available for many operating systems, allowing Python code to run on a wide variety of systems. CPython, the reference implementation of Python, is open source software and has a community-based development model, as do nearly all of its variant implementations. CPython is managed by the non-profit Python Software Foundation.

PHP is a server-side scripting language designed primarily for web development but also used as a general-purpose programming language. Originally created by Rasmus Lerdorf in 1994, the PHP reference implementation is now produced by The PHP Development Team. PHP originally stood for Personal Home Page, but it now stands for the recursive acronym PHP: Hypertext Preprocessor. PHP code may be embedded into HTML or HTML5 code, or it can be used in combination with various web template systems, web content management systems and web frameworks. PHP code is usually processed by a PHP interpreter implemented as a module in the web server or as a Common Gateway Interface (CGI) executable. The web server combines the results of the interpreted and executed PHP code, which may be any type of data, including images, with the generated web page. PHP code may also be executed with a command-line interface (CLI) and can be used to implement standalone graphical applications. The standard PHP interpreter, powered by the Zend Engine, is free software released under the PHP License. PHP has been widely ported and can be deployed on most web servers on almost every operating system and platform, free of charge. The PHP language evolved without a written formal specification or standard until 2014, leaving the canonical PHP interpreter as a de facto standard. Since 2014 work has gone on to create a formal PHP specification.

MySQL is an open-source relational database management system (RDBMS). Its name is a combination of "My", the name of co-founder Michael Widenius' daughter, and "SQL", the abbreviation for Structured Query Language. The MySQL development project has made its source code available under the terms of the GNU General Public License, as well as under a variety of proprietary agreements. MySQL was owned and sponsored by a single for-profit firm, the Swedish company MySQL AB, now owned by Oracle Corporation. For proprietary use, several paid editions are available, and offer additional functionality. MySQL is a central component of the LAMP open-source web application software stack (and other "AMP" stacks). LAMP is an acronym for "Linux, Apache, MySQL, Perl/PHP/Python". Applications that use the MySQL database include: TYPO3, MODx, Joomla, WordPress, phpBB, MyBB, and Drupal. MySQL is also used in many high-profile, large-scale websites, including Google (though not for searches), Facebook, Twitter, Flickr, and YouTube.

Create a Database in MySQL

PHP Mysql

MySql Tutorial

Cloud Sql

MySQL Tutorial for Beginners - 1 - Creating a Database and Adding Tables to it (youtube)

Creating and Managing Research Databases in Microsoft Access (youtube)

Excel Magic Trick #184: Setup Database in Excel (youtube)

BASIC an acronym for "Beginner's All-purpose Symbolic Instruction Code", is a family of general-purpose, high-level programming languages whose design philosophy emphasizes ease of use.

Smalltalk is an object-oriented, dynamically typed, reflective programming language. Smalltalk was created as the language to underpin the "new world" of computing exemplified by "human–computer symbiosis." It was designed and created in part for educational use, more so for constructionist learning, at the Learning Research Group (LRG) of Xerox PARC by Alan Kay, Dan Ingalls, Adele Goldberg, Ted Kaehler, Scott Wallace, and others during the 1970s. The language was first generally released as Smalltalk-80. Smalltalk-like languages are in continuing active development and have gathered loyal communities of users around them. ANSI Smalltalk was ratified in 1998 and represents the standard version of Smalltalk.

Lisp is a family of computer programming languages with a long history and a distinctive, fully parenthesized prefix notation. Originally specified in 1958, Lisp is the second-oldest high-level programming language in widespread use today. Only Fortran is older, by one year. Lisp has changed since its early days, and many dialects have existed over its history. Today, the best known general-purpose Lisp dialects are Common Lisp and Scheme.

D is an object-oriented, imperative, multi-paradigm system programming language created by Walter Bright of Digital Mars and released in 2001.

Go is a free and open source programming language created at Google in 2007 by Robert Griesemer, Rob Pike, and Ken Thompson. It is a compiled, statically typed language in the tradition of Algol and C, with garbage collection, limited structural typing, memory safety features and CSP-style concurrent programming features added.

Rust is a general purpose programming language sponsored by Mozilla Research. It is designed to be a "safe, concurrent, practical language", supporting functional and imperative-procedural paradigms. Rust is syntactically similar to C++, but is designed for better memory safety while maintaining performance. Rust is open source software. The design of the language has been refined through the experiences of writing the Servo web browser layout engine and the Rust compiler. A large portion of current commits to the project are from community members. Rust won first place for "most loved programming language" in the Stack Overflow Developer Survey in 2016 and 2017.

Ada is a structured, statically typed, imperative, wide-spectrum, and object-oriented high-level computer programming language, extended from Pascal and other languages. It has built-in language support for design-by-contract, extremely strong typing, explicit concurrency, offering tasks, synchronous message passing, protected objects, and non-determinism. Ada improves code safety and maintainability by using the compiler to find errors in favor of runtime errors. Ada is an international standard; the current version (known as Ada 2012) is defined by ISO/IEC 8652:2012.

Limbo is a programming language for writing distributed systems and is the language used to write applications for the Inferno operating system. It was designed at Bell Labs by Sean Dorward, Phil Winterbottom, and Rob Pike. The Limbo compiler generates architecture-independent object code which is then interpreted by the Dis virtual machine or compiled just before runtime to improve performance. Therefore all Limbo applications are completely portable across all Inferno platforms.

Limbo's approach to concurrency was inspired by Hoare's Communicating Sequential Processes (CSP), as implemented and amended in Pike's earlier Newsqueak language and Winterbottom's Alef.bib.

Markup Language is a system for annotating a document in a way that is syntactically distinguishable from the text. The idea and terminology evolved from the "marking up" of paper manuscripts, i.e., the revision instructions by editors, traditionally written with a blue pencil on authors' manuscripts.

Swift Playgrounds is a new way to learn to code with Swift on iPad. Swift Playgrounds is a revolutionary iPad app that helps you learn and explore coding in Swift, the same powerful language used to create world-class apps for the App Store.

Each programing language has it own set of rules, and each language has it's own area of input, and each language has its own capabilities and functions. So knowing what you want to accomplish will help you decide what code or programming language you need to learn. Learning computer programming languages is easier then any other time in history, especially if you can get online and access all the free educational material. Don't believe all the media hype that's telling kids that they need to learn computer code, because those people are morons. There's more important knowledge to learn first.

Best Coding Language to Learn

Computer Languages needed to build an App: HTML5 is the ideal programming language if you are looking to build a Web-fronted app for mobile devices. ...Objective-C. The primary programming language for iOS apps, Objective-C was chosen by Apple to build apps that are robust and scalable. ...Swift. ...C++ ...C# ...Java.

Computer Languages Needed to be a Software Developer: SQL (pronounced 'sequel') tops the job list since it can be found far and wide in various flavors. ...Java. The tech community recently celebrated the 20th anniversary of Java. ...JavaScript. ...C# ...C++ ...Python. ...PHP. ...Ruby on Rails.

Computer Programming Contest - USA Computing Olympiad

Top Coder technical competitions helping real world organizations solve real world problems.

Devoxx conference by developers.

Folding Proteins - Learning Games

Designing a Programming System for understanding Program

Competitive Programming is a mind sport usually held over the Internet or a local network, involving participants trying to program according to provided specifications. Contestants are referred to as sport programmers.

Code

Code is a system of rules to convert information—such as a letter, word, sound, image, voltage, or gesture—into another form or representation, sometimes shortened or secret, for communication through a channel or storage in a medium. Paul Ford, what is code?

Encoding is the activity of converting data or information into code. Unicode (symbols) - Source Code.

Binary Code represents text or computer processor instructions using the binary number system's two binary digits, 0 and 1. The binary code assigns a bit string to each symbol or instruction. For example, a binary string of eight binary digits (bits) can represent any of 256 possible values and can therefore correspond to a variety of different symbols, letters or instructions.

Bit is a basic unit of information in computing and digital communications. A bit can have only one of two values, and may therefore be physically implemented with a two-state device. These values are most commonly represented as either a 0 and 1. Transistors.

Byte is a unit of digital information that most commonly consists of eight bits. (01100101).

Megabyte is one million bytes of information. (8 Million Bits).

Sizes of Digital Information

Block of Code is something that has a set of instructions to perform a particular task or tasks, or calculations, or sending data to specified places) Modules, Routines and Subroutines, Methods, Functions. Algorithm.

Block Programming is a section of code which is grouped together.

Block Code is any member of the large and important family of error-correcting codes that encode data in blocks.

Snippet is a programming term for a small region of re-usable source code, machine code, or text. Ordinarily, these are formally defined operative units to incorporate into larger programming modules. Snippet management is a feature of some text editors, program source code editors, IDEs, and related software. It allows the user to avoid repetitive typing in the course of routine edit operations.

Code::Blocks is a free, open-source cross-platform IDE that supports multiple compilers including GCC, Clang and Visual C++.

Computer programming: How to write code functions | lynda.com tutorial (youtube)

Compiler is a computer program (or a set of programs) that transforms source code written in a programming language (the source language) into another computer language (the target language), with the latter often having a binary form known as object code. The most common reason for converting source code is to create an executable program.

Source Code

Coding is Love. Learning to Code is about improving life. You help people to learn things easier, you help people to accomplish goals and tasks faster. Coding helps people save time, energy and resources. So people will have more time to improve life for others, as well as have more time to relax and enjoy life. Coding is Love."

IOPS Input/Output Operations Per Second which describes the performance characteristics of any storage device.

Assignment sets and/or re-sets the value stored in the storage location(s) denoted by a variable name.

Pseudocode is an informal high-level description of the operating principle of a computer program or other algorithm. It uses the structural conventions of a normal programming language, but is intended for human reading rather than machine reading. Pseudocode typically omits details that are essential for machine understanding of the algorithm, such as variable declarations, system-specific code and some subroutines. The programming language is augmented with natural language description details, where convenient, or with compact mathematical notation. The purpose of using pseudocode is that it is easier for people to understand than conventional programming language code, and that it is an efficient and environment-independent description of the key principles of an algorithm. It is commonly used in textbooks and scientific publications that are documenting various algorithms, and also in planning of computer program development, for sketching out the structure of the program before the actual coding takes place. No standard for pseudocode syntax exists, as a program in pseudocode is not an executable program. Pseudocode resembles, but should not be confused with, skeleton programs which can be compiled without errors. Flowcharts, drakon-charts and Unified Modeling Language (UML) charts can be thought of as a graphical alternative to pseudocode, but are more spacious on paper. Languages such as HAGGIS bridge the gap between pseudocode and code written in programming languages. Its main use is to introduce students to high level languages through use of this hybrid language.

Branch in computer science is an instruction in a computer program that can cause a computer to begin executing a different instruction sequence and thus deviate from its default behavior of executing instructions in order.[a] Branch (or branching, branched) may also refer to the act of switching execution to a different instruction sequence as a result of executing a branch instruction. Branch instructions are used to implement control flow in program loops and conditionals (i.e., executing a particular sequence of instructions only if certain conditions are satisfied). A branch instruction can be either an unconditional branch, which always results in branching, or a conditional branch, which may or may not cause branching depending on some condition. Also, depending on how it specifies the address of the new instruction sequence (the "target" address), a branch instruction is generally classified as direct, indirect or relative, meaning that the instruction contains the target address, or it specifies where the target address is to be found (e.g., a register or memory location), or it specifies the difference between the current and target addresses.

Instruction Set Architecture is an abstract model of a computer. It is also referred to as architecture or computer architecture. A realization of an ISA is called an implementation. An ISA permits multiple implementations that may vary in performance, physical size, and monetary cost (among other things); because the ISA serves as the interface between software and hardware. Software that has been written for an ISA can run on different implementations of the same ISA. This has enabled binary compatibility between different generations of computers to be easily achieved, and the development of computer families. Both of these developments have helped to lower the cost of computers and to increase their applicability. For these reasons, the ISA is one of the most important abstractions in computing today.

Control Flow is the order in which individual statements, instructions or function calls of an imperative program are executed or evaluated. The emphasis on explicit control flow distinguishes an imperative programming language from a declarative programming language. Within an imperative programming language, a control flow statement is a statement, the execution of which results in a choice being made as to which of two or more paths to follow. For non-strict functional languages, functions and language constructs exist to achieve the same result, but they are usually not termed control flow statements. A set of statements is in turn generally structured as a block, which in addition to grouping, also defines a lexical scope. Interrupts and signals are low-level mechanisms that can alter the flow of control in a way similar to a subroutine, but usually occur as a response to some external stimulus or event (that can occur asynchronously), rather than execution of an in-line control flow statement. At the level of machine language or assembly language, control flow instructions usually work by altering the program counter. For some central processing units (CPUs), the only control flow instructions available are conditional or unconditional branch instructions, also termed jumps.

Declaration in computer programming is a language construct that specifies properties of an identifier: it declares what a word (identifier) "means". Declarations are most commonly used for functions, variables, constants, and classes, but can also be used for other entities such as enumerations and type definitions. Beyond the name (the identifier itself) and the kind of entity (function, variable, etc.), declarations typically specify the data type (for variables and constants), or the type signature (for functions); types may also include dimensions, such as for arrays. A declaration is used to announce the existence of the entity to the compiler; this is important in those strongly typed languages that require functions, variables, and constants, and their types to be specified with a declaration before use, and is used in forward declaration. The term "declaration" is frequently contrasted with the term "definition", but meaning and usage varies significantly between languages; see below. Declarations are particularly prominent in languages in the ALGOL tradition, including the BCPL family, most prominently C and C++, and also Pascal. Java uses the term "declaration", though Java does not have separate declarations and definitions.

Language Construct is a syntactically allowable part of a program that may be formed from one or more lexical tokens in accordance with the rules of a programming language. In simpler terms, it is the syntax/way a programming language is written.

Subroutine is a sequence of program instructions that performs a specific task, packaged as a unit. This unit can then be used in programs wherever that particular task should be performed. Subprograms may be defined within programs, or separately in libraries that can be used by many programs. In different programming languages, a subroutine may be called a procedure, a function, a routine, a method, or a subprogram. The generic term callable unit is sometimes used. The name subprogram suggests a subroutine behaves in much the same way as a computer program that is used as one step in a larger program or another subprogram. A subroutine is often coded so that it can be started several times and from several places during one execution of the program, including from other subroutines, and then branch back (return) to the next instruction after the call, once the subroutine's task is done. The idea of a subroutine was initially conceived by John Mauchly during his work on ENIAC, and recorded in a Harvard symposium in January of 1947 entitled 'Preparation of Problems for EDVAC-type Machines'. Maurice Wilkes, David Wheeler, and Stanley Gill are generally credited with the formal invention of this concept, which they termed a closed subroutine, contrasted with an open subroutine or macro. Subroutines are a powerful programming tool, and the syntax of many programming languages includes support for writing and using them. Judicious use of subroutines (for example, through the structured programming approach) will often substantially reduce the cost of developing and maintaining a large program, while increasing its quality and reliability. Subroutines, often collected into libraries, are an important mechanism for sharing and trading software. The discipline of object-oriented programming is based on objects and methods (which are subroutines attached to these objects or object classes).In the compiling method called threaded code, the executable program is basically a sequence of subroutine calls.

Character Encoding is used to represent a repertoire of characters by some kind of an encoding system. Depending on the abstraction level and context, corresponding code points and the resulting code space may be regarded as bit patterns, octets, natural numbers, electrical pulses, etc. A character encoding is used in computation, data storage, and transmission of textual data. Character set, character map, codeset and code page are related, but not identical, terms.

Software Bug is an error, flaw, failure or fault in a computer program or system that causes it to produce an incorrect or unexpected result, or to behave in unintended ways. The process of finding and fixing bugs is termed "Debugging" and often uses formal techniques or tools to pinpoint bugs, and since the 1950s, some computer systems have been designed to also deter, detect or auto-correct various computer bugs during operations. Most bugs arise from mistakes and errors made in either a program's source code or its design, or in components and operating systems used by such programs. A few are caused by compilers producing incorrect code. A program that contains a large number of bugs, and/or bugs that seriously interfere with its functionality, is said to be buggy (defective). Bugs can trigger errors that may have ripple effects. Bugs may have subtle effects or cause the program to crash or freeze the computer. Other bugs qualify as security bugs and might, for example, enable a malicious user to bypass access controls in order to obtain unauthorized privileges. Buggy code usually means that code writing used poor coding practices and/or poor testing practices such that their code is likely to have a greater-than-average number of bugs and/or is difficult to maintain without introducing bugs.

Voltage used as a Language

Think of a mechanical computer. You press a lever, some gears turn, and then there's an output. In an electrical computer, you press a key on a keyboard, that changes the voltage from 0(ground) to something like 5v. That then goes through some transistors (switches) that do various things until you end up seeing a letter on the screen. That letter is again represented by voltages which are being applied to the pixel. It's hard to explain because there are so many layers, but basically some sort of electrical signal goes into a series of switches which then change millions of things resulting in an output. All of these switches are operating on a change in voltage between two references (usually ground and then 1.5, 3.3, 5, or some other voltage). Here's another example: Marble adding machine (youtube) - Think of the little levers being left as ground and right as some voltage like 5v. Those are the ones and zeroes. Zeroes and ones are not converted to physical voltages. They are physical voltages, or more precisely open or closed electrical circuits. Astute use of electrical properties and circuitries gives those open or closed circuits various incarnations, like the flickering of a LED, the magnetization of a small portion of a tape, and so on. Code is converted from conceptual form to a voltage level the instant the programmer presses a key. The keypress is registered as a change in voltage inside the keyboard, and every further interaction will be electronic. Remember, the actual data stored on the system is electronic. When you open a file in a hex editor to look at the bits the computer is using the graphics card and the monitor to convert the electronic bits back into Symbols for you to look at. If something is in RAM, its stored as voltages. How does a computer understand the language? Consider a really basic microprocessor. It has 8 storage places, and can add or invert numbers within them. For the assembly command: (ADD R3 R2 R1). We are trying to say R3 = R2+R1. In machine code, this might look like: (1 011 010 001). This gets sent on a 10 bit bus (just 10 wires tied together) from the program register (where commands are stored) to the processor. The first bit gets split off from the rest and gets set as the control bit of an 9 bit 2:1 multiplexer. This sends the other bits to the addition Circuitry. The addition circuitry can retrieve the values from the register, perform the addition (look up a ripple carry Adder for more information), and store the result in another register. Now imagine this implemented with 100 different functions, 64 bit instructions, and you start to get close to how Processors today operate. You write a program, it compiles into machine code and you run it, which mean OS loads it into memory from a drive and jumps the CPU to a starting point of your program. Now you have machine code as a series of bits in RAM that CPU can access. Reading the memory ends up setting memory bus lines to high or low states. Lines, along with the clock signal, are connected to CPU, where insanely complex set of electronic logic changes state according to new input - which can entail triggering new RAM reads (including memory containing more instructions) and writes. How line signals get manipulated in a CPU is a broad topic. Look up Logic Gates and then try wrapping your head around clocked memory cell (J/K flip flop). From these, more complex stuff like Adders can be constructed. The chip has some supply voltage made available to it, and at each stage of computation, either that voltage is switched on or off. And that switching is ultimately controlled by the instructions of the program, combinational logic works, muxes, demuxes, latches, clocks, etc. The full adder can be implemented in five logic gates, and a ripple carry adder capable of adding values wider than a single bit can be built from multiple full Adders. The ones and zeroes are physical voltages. There's no "conversion" from one to the other. You can analyze a digital circuit in terms of 1s and 0s or in terms of voltages depending on which model is more useful at the time. 1s and 0s in a program are stored physically either as magnetic orientations of atoms in a hard drive or electrical charges in a flash memory cell. Those charges go into physical digital logic components like transistors to trigger signals in other transistors. Software doesn't really exist as a thing separate from hardware, it's just a useful abstraction for humans. Everything is an actual physical thing. All of your thoughts and memories are actually nerves in your brain connecting and interacting with each other physically through electrical and chemical interfaces. There are set levels about what voltage constitutes on or off. The 1's and 0's are always voltage levels. If you press a button on your keyboard it doesn't send ones and zeroes which later becomes electrical signals, it sends an electrical signals which can be understood as a sequence of ones and zeroes. When your processor loads an instruction, it's not just loading a sequence of ones and zeroes, flip flops are also being set to the voltage levels which 1 and 0 are used to represent. These models are just different levels of abstraction, there is no physical conversion from one to the other. That is, as you type it in the computer your typing generates a series of on/off bits that represent the code. Those bits are then converted into different sequences of on/off bits by a compiler and those on/off bits are stored as such on some storage medium. When the computer reads those bits back they come back as a bunch of on/off bits already. There's no "conversion". The closest thing to what you're asking would be "the keyboard has a little chip in it that says when the 'A' key is pressed you should send this sequence of on/off bits to represent that". After that point it's all just voltages that represent on/off bits. In reality, there's never an abstract binary bit separate from the physical electronic representations. We just call them 0 and 1 because it's a convenient formalism for working with digital logic. But it's electricity all the way down. Computers are basically a bunch of light switches hooked to little spring loaded motors hooked to more light switches. The only exception are things like HDDs and optical drives; with those, you got a little thing that converts the "light switch" positions into magnetic fields aligned one way or another for the HDD, and as dark and reflective spots on optical drives, and vice-versa. There's no "it's digital here and now it's analog here." It's always analog; there's no such thing as digital in the actual physical world, that's merely an abstraction. Everything stored in the computer (or at least in volatile storage, i.e. memory) is a series of voltages. If you had a probe small enough and sensitive enough you could place it at any point in the circuit and look at the voltage level and determine whether that level represented a 1 or a 0. Everything happens by switching those voltages around. Everything in a computer is clocked. There is an oscillator in there, in fact many, that trigger everything to happen. Its just like the tick of a clock but generally happening at very fast speeds. Upon a clock tick the voltage states of each bit in each register of connected to a particular operation are passed through a series of gates to complete the desired operation. That operation is determined by the states of other registers through again a long chain of logic gates. Multiply this by a few thousand and you have a computer. Machine code, assembly and higher languages are stored in voltage states in registers, ram and flash memory. So they are always electrical. Compiling and assembling are just translating human readable code into numerical operators, constants and memory addresses. Effectively its just rearranging it in memory into a format where the computer can execute it. Machine language doesn't get converted to physical voltages. Physical voltages are the fundamental thing. It is more accurate to say that physical voltages get interpreted as machine language/0's and 1's. The element that does this interpretation is a transistor. It is what fundamentally decides what is a 0 and what is a 1. The transistor has 3 connections and acts as a switch. One of the inputs is a control input and depending on the voltage on the control input, current is either allowed to pass between other inputs or current is blocked (it basically varies the resistance between the other 2 connections dramatically). There is some voltage below which the transistor will easily pass current (say 2.1V). We interpret voltages in the 0-2.1V range as a 0. There is some higher voltage where the transistor will block almost all current (say 2.6V). We interpret voltages in the 2.6-3.3V range as a 1. In between voltages (2.1-2.6V) are not allowed and the system is designed to not produce them. There are other transistors that invert this logic and pass current when the control is a low voltage and block current when the control is a high voltage. Of course, I'm vastly simplifying things to focus on just the most fundamental part. These transistors determine what physical voltages are 0 and which are 1. For the understanding of the machine language code, that happens at a higher level of abstraction. Basically, networks of transistors are designed that create the correct 0/1 output pattern given the 0/1 input pattern of the parameters and the 0/1 input pattern of the machine instruction. There's a whole host of processes and tools that go into creating the machine language itself, that is the binary encoding stored on the computer. Then there's the CPU and its functions. The there's the execution of the program within the operating system and how the different hardware devices can affect the program execution. Then there's the hardware itself and how its connected together and operates. None of those act in isolation either, they are all inter-dependent and influence each other. Consider the Altair 8800, the grand-daddy of all microcomputers. See ? No keyboard. This was programmed by physically toggling the switches, actually opening and closing electrical circuits. Inputting and outputting those programs in the form of a series of letters you can type on a keyboard and read on a screen came later. Each letter on your keyboard is an electrical switch, and how keystrokes are handled to change the state of a memory cell is a matter of basic electronics. Moving further back in time, see the Eniac, generally presented as the first programmable computer. Then, programming meant physically rearranging the cabling. Even more physical, Konrad Zuse's Z1 through Z4 were programmable and electromechanical. Charles Babbage's Analytical Engine, for which Lady Ada Lovelace invented the concept of programming and wrote the first computer program ever. MIPS instruction set architecture in a hardware description language. A program in machine language is stored as patterns of ones and zeroes and is read into main memory from storage. There it is also stored patterns of ones and zeroes (high and low voltages). The magic is something called an instruction decoder. This fetches the information at the location pointed to by the program counter and then decodes the instruction and executes it. This interpretation can be anything from a micro machine of its own (with its own program) to direct implementation using logic gates. Execution will then enable particular logic to act on the data as directed. For example if we have a register containing the value 0 and we want to increment it, at the low level the CPU must be told to load the register into the arithmetic-logic unit (ALU) and the apply the signal to say increment the value then to take to result and write it to the register. So, in effect three operations must take place (read, calculate and write) and we must be careful not to take the result of the ALU before the calculation is complete. Often there is something called a clock in the background to help keep things synchronised and to enable things to communicate at the right time. This would enable the right components to communicate over a common bus. The last thing that would happen is that the program counter would be incremented (using a similar mechanism) so that it points to the next instruction. Program exists on storage device Which has been compiled down to machine code. Program is loaded into memory which is when the voltages are established Flip-Flop in electronics (wiki). The machine code is comprised of Discrete instructions for a specific processor. In an instruction, the Opcode is what dictates the operation the processor will perform. Resistor Ladder (wiki). Using an 8 bit PIC, start writing some C code and eventually work your way down to assembly. With some simple tools like a multimeter and/or an oscilloscope you'll be able to work out what's going on. When people say 1s and 0s, what we're really referring to are logic levels, where a 0 refers to a 'low' level and a 1 refers to a 'high' level. Since these are just voltage levels, the computer can recognize and operate on these natively. Also, a computer program is usually stored as 0s and 1s before compiling as well, as everything on your computer will be stored that way. However, after compiling, you will get a file filled with what is called machine code. Machine code is a list of binary instructions (as opposed to text like the original source code) that can be directly interpreted by the circuitry in the processor.

Why Do Computers Use 1s and 0s? Binary and Transistors Explained (youtube).

How the Computer Reads Voltages and how does a computer interpret read transistors that are on or off?

Computers are digital. Digital means we build components from "analog" hardware, like transistors, resistors, etc., which work with two states: On and Off. Usually we can do this by employing reversed Z-Diodes, which are not conductive until a certain voltage threshold is reached. That way, you either have 0V or <threshold>+ Volt. The resulting components are called "logical gates". Some examples for gates include "AND", "OR", "NAND", "XOR", etc. With these gates, your computer hardware can be built easily in a way which allows for storing states (e.g. NAND flip-flops) and do logical things, like mathematic computations. One storage-place can save the state of either 0 or 1. This state is called "bit". Bits work in the binary system. Let me translate that for you to our widely used decimal system:

Binary Decimal

0 0

1 1

10 2

100 4

101 5

1010 10

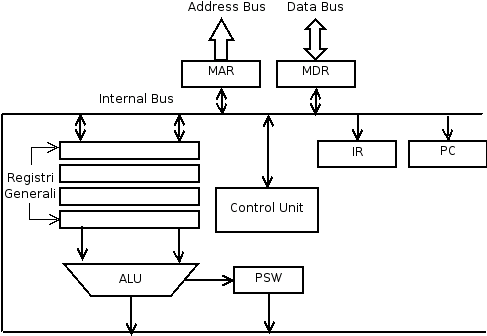

In order to do some processing, your computer uses a clock generator with a very high frequency. The frequency is usually one of the selling points of a CPU, like 4GHz. It means the clock generator alternates between logical 0 and 1 ca. 4,000,000,000 times a second. The signal from the clock generator is used to transfer information through your logical gates and in order to give them time to do their thing. Logical gates do not provide instant calculations, because even electromagnetic alterations (important! It's not the electrons which have to travel fast) have to travel the distance and need a certain time to do so. With the help of the logical gates, a computer is able to pump your data from memory into your CPU. Inside the CPU, there usually are one to several ALUs. ALU stands for Arithmetic Logical Unit and describes a circuit (out of logical gates) which is able to understand a certain combination of bits and do calculations on them. One ALU usually has an input of several bits which are filled in sequentially, meaning the bit stream is pumped into the one side of the ALU until the input is "full". Then the ALU is signalled that it should process the input.

Let's take a look at a simple example. The example ALU can work on 8 bit

and the input sequence is 11001000. One word is 3 bits long (a "word" is

the length of a data entity). The first thing the ALU needs is a command

set ("opcodes"), for example ADD (sum of two numbers), DEC (decrease), INC

(increment data),... Those commands are evaluated via a simple logic. For

the sake of simplicity, let's say the command is encoded in 2 bit (which

sums up to 3 commands + NOOP (no operation; do nothing)) which are stored

in the first two bits of the input sequence (usually an ALU has a separate

opcode input). Let's say the ALU knows all of the mentioned commands

above:

Let's take a look at a simple example. The example ALU can work on 8 bit

and the input sequence is 11001000. One word is 3 bits long (a "word" is

the length of a data entity). The first thing the ALU needs is a command

set ("opcodes"), for example ADD (sum of two numbers), DEC (decrease), INC

(increment data),... Those commands are evaluated via a simple logic. For

the sake of simplicity, let's say the command is encoded in 2 bit (which

sums up to 3 commands + NOOP (no operation; do nothing)) which are stored

in the first two bits of the input sequence (usually an ALU has a separate

opcode input). Let's say the ALU knows all of the mentioned commands

above:Bitcode Command

00 NOOP

01 ADD

10 MOV

11 INC

Since the first two bits in our sequence are 11, the operation for the computation is "INC". The ALU can then proceed to increment the number which follows. Incrementing only takes one input: the number to increment. So the ALU takes the first word after the command (001) and increments it via mathematical logic (also implemented with logical gates). The result then is 010 which is pushed out of the ALU and then saved in a result memory location. But how can we do logical things in addition to math? The thing is that there are a few more commands which allow more logical operations, like jump or jump if equal. Those commands have to be executed by another part of the CPU: a Control Unit. It does the data fetching and executes certain memory related operations, but all in all is quite similar to how the ALU works. By manipulating data and storing it in well defined places or pushing it to certain busses, the data can be used in other places, for example it can be sent to the PCI controller, then it can be consumed by the graphics card and sent to the monitor in order to display an image. It can be sent to a USB controller which stores it on your USB drive. It all depends on the operations and the content of certain memory locations, which are used by logical gates to decide stuff. First of all, your code is always translated into machine codes (it does not matter, if it is compiled to machine code, or if an interpreter translates it). Machine code is nothing more than a sequence of specific signals on a bus. Chips with access to that bus will usually use a clock-signal to trigger transistors, which then allow the signals on the bus to enter the chip. Your signal itself will then trigger more transistors inside the chip to change their state, resulting in a new signal on the output side. That signal could be a write-operation on a memory chip. The clock-signal will then allow other chips to read that output. A clock signal usually comes from the CPU. CPU vendors advertise their CPUs with the speed of the internal CPU clocks. For example, if you have an Intel processor with 3.4GHz, the internal clock will output an on-off-signal with a frequency of 3.4GHz. That's it. There are other chips in your computer, which have their own clocks (for example the RAM), though. That's why you have to make sure that your parts are compatible, or they won't be able to sync.

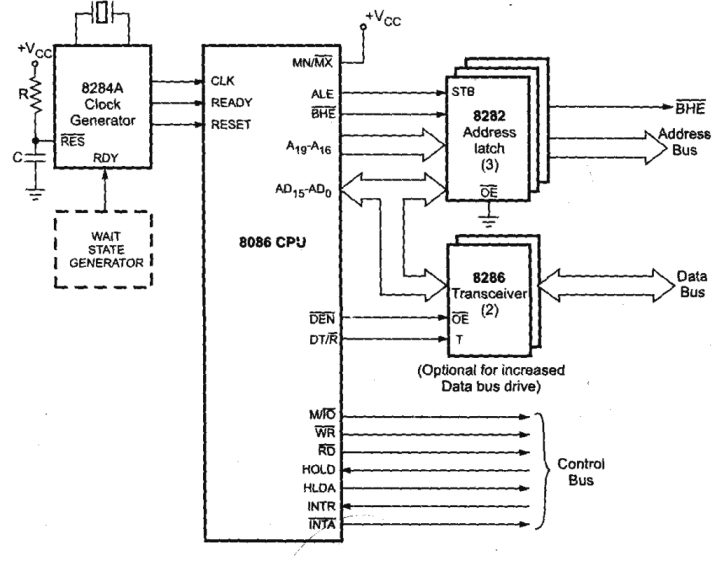

Take a look at this rough CPU schematic. See the clock generator inputting a

CLK signal into the CPU? That's what triggers the read/write

operations from/to the internal bus, which results in your code being

read, triggering internal transistors and even telling others when to

read from the CPU. Which leads to one sad truth: CPU outputs cannot be

read as soon as they are ready, they always have a certain amount of time

to finish and even if they finish earlier, everything has to wait for the

set amount of time >.< It's easier that way, though!

Take a look at this rough CPU schematic. See the clock generator inputting a

CLK signal into the CPU? That's what triggers the read/write

operations from/to the internal bus, which results in your code being

read, triggering internal transistors and even telling others when to

read from the CPU. Which leads to one sad truth: CPU outputs cannot be

read as soon as they are ready, they always have a certain amount of time

to finish and even if they finish earlier, everything has to wait for the

set amount of time >.< It's easier that way, though!Code Translations (ASCII)

Encryption - Algorithms

Logic in computer science covers the overlap between the field of logic and that of computer science. The topic can essentially be divided into three main areas: Theoretical foundations and analysis. Use of computer technology to aid logicians. Use of concepts from logic for computer applications. Data.

Human-Computer Interaction (interfaces) - Data Protection

Side Effects modifies some state or has an observable interaction with calling functions or the outside world.

Computer Virus is a malware that, when executed, replicates by reproducing itself or infecting other programs by modifying them.

Learning to Code - Schools and Resources

If someone ask you "Do you know how to Code?" just ask them, "What Language? And what do you want to accomplish?"

2015 Paul Ford, what is code?

Computer Programming is the craft of writing useful, maintainable, and extensible source code which can be interpreted or compiled by a computing system to perform a meaningful task.

Computer Help and Resources

Computer Knowledge

Structure and Interpretation of Computer Programs

The Art of Computer Programming

EverCode is a Mac app for software developer. It provides basic code editing and syntax highlighting.

Technology News

What are some of the things that I can do with Computer Programming Languages? What kind of problems can I solve?

You can create computer software, create a website, or fix computers that have software problems. You can create an App that saves time, increases productivity, increases quality, increases your abilities and creativity, and also helps you learn more about yourself and the world around you. You could even create Algorithms for Artificial Intelligence, or write instructions for Robotics. You can even make your own Computer Operating System, or learn to modify and improve an OS using C, C++ programming languages. You can make your computer run better by fixing Registry errors. You can also learn to create and manage databases.

Programming

Programmer is a person who writes computer software. The term computer programmer can refer to a specialist in one area of computer programming or to a generalist who writes code for many kinds of software. One who practices or professes a formal approach to programming may also be known as a programmer analyst. A programmer's primary computer language (Assembly, COBOL, C, C++, C#, Java, Lisp, Python, etc.) is often prefixed to these titles, and those who work in a web environment often prefix their titles with web. A range of occupations, including: software developer, web developer, mobile applications developer, embedded firmware developer, software engineer, computer scientist, or software analyst, while they do involve programming, also require a range of other skills. The use of the simple term programmer for these positions is sometimes considered an insulting or derogatory oversimplification. Mind Programming.