BK101

Knowledge Base

Hearing

Hearing is the ability to perceive sound via the auditory sense or the organ such as the ear. Hearing is the act of listening attentively. Hearing is to receive a communication from someone in the range within which a voice can be heard. Hearing is the ability to perceive sound by detecting vibrations and changes in pressure of the surrounding medium through time.

Auditory System

is the

sensory system for the sense of hearing. It includes both the sensory

organs (the ears) and the auditory parts of the sensory system. Listening Effectively when

others Speak.

Auditory System

is the

sensory system for the sense of hearing. It includes both the sensory

organs (the ears) and the auditory parts of the sensory system. Listening Effectively when

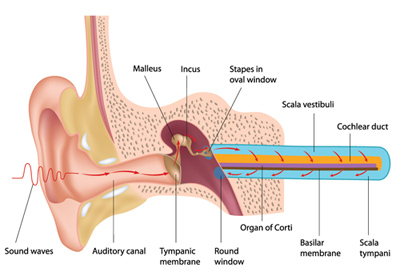

others Speak.Ear is the organ used for hearing and balance. In mammals, the ear is usually described as having three parts—the outer ear, middle ear and the inner ear. The outer ear consists of the pinna and the ear canal. Since the outer ear is the only visible portion of the ear in most animals, the word "ear" often refers to the external part alone. The middle ear includes the tympanic cavity and the three ossicles. The inner ear sits in the bony labyrinth, and contains structures which are key to several senses: the semicircular canals, which enable balance and eye tracking when moving; the utricle and saccule, which enable balance when stationary; and the cochlea, which enables hearing. The ears of vertebrates are placed somewhat symmetrically on either side of the head, an arrangement that aids sound localisation.

Cochlea is the auditory portion of the inner ear. It is a spiral-shaped cavity in the bony labyrinth, in humans making 2.5 turns around its axis, the modiolus. A core component of the cochlea is the Organ of Corti, the sensory organ of hearing, which is distributed along the partition separating fluid chambers in the coiled tapered tube of the cochlea. The name is derived from the Latin word for snail shell, which in turn is from the Greek κοχλίας kokhlias ("snail, screw"), from κόχλος kokhlos ("spiral shell") in reference to its coiled shape; the cochlea is coiled in mammals with the exception of monotremes.

Saccule is a bed of sensory cells situated in the inner ear. The saccule translates head movements into neural impulses which the brain can interpret. The saccule detects linear accelerations and head tilts in the vertical plane. When the head moves vertically, the sensory cells of the saccule are disturbed and the neurons connected to them begin transmitting impulses to the brain. These impulses travel along the vestibular portion of the eighth cranial nerve to the vestibular nuclei in the brainstem. The vestibular system is important in maintaining balance, or equilibrium. The vestibular system includes the saccule, utricle, and the three semicircular canals. The vestibule is the name of the fluid-filled, membranous duct than contains these organs of balance. The vestibule is encased in the temporal bone of the skull.

Hair Cell are the sensory receptors of both the auditory system and the vestibular system in the ears of all vertebrates. Through mechanotransduction, hair cells detect movement in their environment. In mammals, the auditory hair cells are located within the spiral organ of Corti on the thin basilar membrane in the cochlea of the inner ear. They derive their name from the tufts of stereocilia called hair bundles that protrude from the apical surface of the cell into the fluid-filled cochlear duct. Mammalian cochlear hair cells are of two anatomically and functionally distinct types, known as outer, and inner hair cells. Damage to these hair cells results in decreased hearing sensitivity, and because the inner ear hair cells cannot regenerate, this damage is permanent. However, other organisms, such as the frequently studied zebrafish, and birds have hair cells that can regenerate. The human cochlea contains on the order of 3,500 inner hair cells and 12,000 outer hair cells at birth. The outer hair cells mechanically amplify low-level sound that enters the cochlea. The amplification may be powered by the movement of their hair bundles, or by an electrically driven motility of their cell bodies. This so-called somatic electromotility amplifies sound in all land vertebrates. It is affected by the closing mechanism of the mechanical sensory ion channels at the tips of the hair bundles. The inner hair cells transform the sound vibrations in the fluids of the cochlea into electrical signals that are then relayed via the auditory nerve to the auditory brainstem and to the auditory cortex.

Contents.

Dizziness - Fainting - Head Spins - Noise - Ringing

Vertigo is when a person feels as if they or the objects around them are moving when they are not. Often it feels like a spinning or swaying movement. This may be associated with nausea, vomiting, sweating, or difficulties walking. It is typically worsened when the head is moved. Vertigo is the most common type of dizziness.

Vestibular System is the sensory system that provides the leading contribution to the sense of balance and spatial orientation for the purpose of coordinating movement with balance. Together with the cochlea, a part of the auditory system, it constitutes the labyrinth of the inner ear in most mammals, situated in the vestibulum in the inner ear. As movements consist of rotations and translations, the vestibular system comprises two components: the semicircular canal system, which indicate rotational movements; and the otoliths, which indicate linear accelerations. The vestibular system sends signals primarily to the neural structures that control eye movements, and to the muscles that keep an animal upright. The projections to the former provide the anatomical basis of the vestibulo-ocular reflex, which is required for clear vision; and the projections to the muscles that control posture are necessary to keep an animal upright. The brain uses information from the vestibular system in the head and from proprioception throughout the body to understand the body's dynamics and kinematics (including its position and acceleration) from moment to moment.

Audiology is a branch of science that studies hearing, balance, and related disorders. Its practitioners, who treat those with hearing loss and proactively prevent related damage are audiologists. Employing various testing strategies (e.g. hearing tests, otoacoustic emission measurements, videonystagmography, and electrophysiologic tests), audiology aims to determine whether someone can hear within the normal range, and if not, which portions of hearing (high, middle, or low frequencies) are affected, to what degree, and where the lesion causing the hearing loss is found (outer ear, middle ear, inner ear, auditory nerve and/or central nervous system). If an audiologist determines that a hearing loss or vestibular abnormality is present he or she will provide recommendations to a patient as to what options (e.g. hearing aid, cochlear implants, appropriate medical referrals) may be of assistance. In addition to testing hearing, audiologists can also work with a wide range of clientele in rehabilitation (individuals with tinnitus, auditory processing disorders, cochlear implant users and/or hearing aid users), from pediatric populations to veterans and may perform assessment of tinnitus and the vestibular system.

Audiobooks - E-Books

Absolute Threshold of Hearing is the minimum

sound level of a pure

tone that an average human ear with normal hearing can hear with no other

sound present. The absolute threshold relates to the sound that can just

be heard by the organism. The absolute threshold is not a discrete point,

and is therefore classed as the point at which a sound elicits a response

a specified percentage of the time. This is also known as the auditory

threshold. The threshold of hearing is generally reported as the RMS sound

pressure of 20 micropascals, corresponding to a sound intensity of 0.98 pW/m2

at 1 atmosphere and 25 °C. It is approximately the quietest sound a young

human with undamaged hearing can detect at 1,000 Hz. The threshold of

hearing is frequency-dependent and it has been shown that the ear's

sensitivity is best at frequencies between

2 kHz and 5 kHz, where the

threshold reaches as low as −9 dB SPL.

Absolute Threshold of Hearing is the minimum

sound level of a pure

tone that an average human ear with normal hearing can hear with no other

sound present. The absolute threshold relates to the sound that can just

be heard by the organism. The absolute threshold is not a discrete point,

and is therefore classed as the point at which a sound elicits a response

a specified percentage of the time. This is also known as the auditory

threshold. The threshold of hearing is generally reported as the RMS sound

pressure of 20 micropascals, corresponding to a sound intensity of 0.98 pW/m2

at 1 atmosphere and 25 °C. It is approximately the quietest sound a young

human with undamaged hearing can detect at 1,000 Hz. The threshold of

hearing is frequency-dependent and it has been shown that the ear's

sensitivity is best at frequencies between

2 kHz and 5 kHz, where the

threshold reaches as low as −9 dB SPL. Ultrasound is sound waves with frequencies higher than the upper audible limit of human hearing. Ultrasound is not different from "normal" (audible) sound in its physical properties, except that humans cannot hear it. This limit varies from person to person and is approximately 20 kilohertz (20,000 hertz) in healthy young adults. Ultrasound devices operate with frequencies from 20 kHz up to several gigahertz. High power ultrasound produces cavitation that facilitates particle disintegration or reactions.

Masking Threshold is the sound pressure level of a sound needed to make the sound audible in the presence of another noise called a "masker". This threshold depends upon the frequency, the type of masker, and the kind of sound being masked. The effect is strongest between two sounds close in frequency. In the context of audio transmission, there are some advantages to being unable to perceive a sound. In audio encoding for example, better compression can be achieved by omitting the inaudible tones. This requires fewer bits to encode the sound, and reduces the size of the final file. Applications in audio compression it is uncommon to work with only one tone. Most sounds are composed of multiple tones. There can be many possible maskers at the same frequency. In this situation, it would be necessary to compute the global masking threshold using a high resolution Fast Fourier transform via 512 or 1024 points to determine the frequencies that comprise the sound. Because there are bandwidths that humans are not able to hear, it is necessary to know the signal level, masker type, and the frequency band before computing the individual thresholds. To avoid having the masking threshold under the threshold in quiet, one adds the last one to the computation of partial thresholds. This allows computation of the signal-to-mask ratio (SMR).

White Noise - Tinnitus - Electronic Noise

How the brain Distinguishes Speech from Noise. For the first time, researchers have provided physiological evidence that a pervasive neuromodulation system - a group of neurons that regulate the functioning of more specialized neurons - strongly influences sound processing in an important auditory region of the brain. The neuromodulator, acetylcholine, may even help the main auditory brain circuitry distinguish speech from noise. The team conducted electrophysiological experiments and data analysis to demonstrate that the input of the neurotransmitter acetylcholine, a pervasive neuromodulator in the brain, influences the encoding of acoustic information by the medial nucleus of the trapezoid body (MNTB), the most prominent source of inhibition to several key nuclei in the lower auditory system. MNTB neurons have previously been considered computationally simple, driven by a single large excitatory synapse and influenced by local inhibitory inputs. The team demonstrates that in addition to these inputs, acetylcholine modulation enhances neural discrimination of tones from noise stimuli, which may contribute to processing important acoustic signals such as speech. Additionally, they describe novel anatomical projections that provide acetylcholine input to the MNTB. Burger studies the circuit of neurons that are "wired together" in order to carry out the specialized function of computing the locations from which sounds emanate in space. He describes neuromodulators as broader, less specific circuits that overlay the more highly-specialized ones. This modulation appears to help these neurons detect faint signals in noise. You can think of this modulation as akin to shifting an antenna's position to eliminate static for your favorite radio station.

Changing the connection between the hemispheres affects speech perception. When we listen to speech sounds, our brain needs to combine information from both hemispheres.

Head Shadow Effect is the result of a Single-Sided Deafness. When one ear has a hearing loss, then it's up to the other ear to process the sound information from both sides of the head. That's why it's called a shadow: the head blocks sound in the same way that it would block sunlight.

Optimal Features for Auditory Categorization. Sound sense: Brain 'Listens' for Distinctive Features in Sounds. Researchers explore how the auditory system achieves accurate speech recognition. For humans to achieve accurate speech recognition and communicate with one another, the auditory system must recognize distinct categories of sounds -- such as words -- from a continuous incoming stream of sounds. This task becomes complicated when considering the variability in sounds produced by individuals with different accents, pitches, or intonations. Humans and vocal animals use vocalizations to communicate with members of their species. A necessary function of auditory perception is to generalize across the high variability inherent in vocalization production and classify them into behaviorally distinct categories (‘words’ or ‘call types’).

Mild-to-moderate hearing loss in children leads to changes in how brain processes sound. Deafness in early childhood is known to lead to lasting changes in how sounds are processed in the brain, but new research published today in eLife shows that even mild-to-moderate levels of hearing loss in young children can lead to similar changes.

Sounds influence the developing brain earlier than previously thought. In experiments in newborn mice, scientists report that sounds appear to change 'wiring' patterns in areas of the brain that process sound earlier than scientists assumed and even before the ear canal opens.

People 'Hear' Flashes due to Disinhibited flow of Signals around the Brain, suggests study. Study sheds light on why some people hear the 'skipping pylon' and other 'noisy GIFs'. A synaesthesia-like effect in which people 'hear' silent flashes or movement, such as in popular 'noisy GIFs' and memes, could be due to a reduction of inhibition of signals that travel between visual and auditory areas of the brain, according to a new study. It was also found that musicians taking part in the study were significantly more likely to report experiencing visual ear than non-musician participants. Magnetic Perception.

In the auditory system, the outer ear funnels sound vibrations to the eardrum, increasing the sound pressure in the middle frequency range. The middle-ear ossicles further amplify the vibration pressure roughly 20 times. The base of the stapes couples vibrations into the cochlea via the oval window, which vibrates the perilymph liquid (present throughout the inner ear) and causes the round window to bulb out as the oval window bulges in. Vestibular and tympanic ducts are filled with perilymph, and the smaller cochlear duct between them is filled with endolymph, a fluid with a very different ion concentration and voltage. Vestibular duct perilymph vibrations bend organ of Corti outer cells (4 lines) causing prestin to be released in cell tips. This causes the cells to be chemically elongated and shrunk (somatic motor), and hair bundles to shift which, in turn, electrically affects the basilar membrane’s movement (hair-bundle motor). These motors (outer hair cells) amplify the traveling wave amplitudes over 40-fold. The outer hair cells (OHC) are minimally innervated by spiral ganglion in slow (unmyelinated) reciprocal communicative bundles (30+ hairs per nerve fiber); this contrasts inner hair cells (IHC) that have only afferent innervation (30+ nerve fibers per one hair) but are heavily connected. There are three to four times as many OHCs as IHCs. The basilar membrane (BM) is a barrier between scalae, along the edge of which the IHCs and OHCs sit. Basilar membrane width and stiffness vary to control the frequencies best sensed by the IHC. At the cochlear base the BM is at its narrowest and most stiff (high-frequencies), while at the cochlear apex it is at its widest and least stiff (low-frequencies). The tectorial membrane (TM) helps facilitate cochlear amplification by stimulating OHC (direct) and IHC (via endolymph vibrations). TM width and stiffness parallels BM's and similarly aids in frequency differentiation. nerve fibers’ signals are transported by bushy cells to the binaural areas in the olivary complex, while signal peaks and valleys are noted by stellate cells, and signal timing is extracted by octopus cells. The lateral lemniscus has three nuclei: dorsal nuclei respond best to bilateral input and have complexity tuned responses; intermediate nuclei have broad tuning responses; and ventral nuclei have broad and moderately complex tuning curves. Ventral nuclei of lateral lemniscus help the inferior colliculus (IC) decode amplitude modulated sounds by giving both phasic and tonic responses (short and long notes, respectively). IC receives inputs not shown, including visual (pretectal area: moves eyes to sound. superior colliculus: orientation and behavior toward objects, as well as eye movements (saccade)) areas, pons (superior cerebellar peduncle: thalamus to cerebellum connection/hear sound and learn behavioral response), spinal cord (periaqueductal grey: hear sound and instinctually move), and thalamus. The above are what implicate IC in the ‘startle response’ and ocular reflexes. Beyond multi-sensory integration IC responds to specific amplitude modulation frequencies, allowing for the detection of pitch. IC also determines time differences in binaural hearing. The medial geniculate nucleus divides into ventral (relay and relay-inhibitory cells: frequency, intensity, and binaural info topographically relayed), dorsal (broad and complex tuned nuclei: connection to somatosensory info), and medial (broad, complex, and narrow tuned nuclei: relay intensity and sound duration). The auditory cortex (AC) brings sound into awareness/perception. AC identifies sounds (sound-name recognition) and also identifies the sound’s origin location. AC is a topographical frequency map with bundles reacting to different harmonies, timing and pitch. Right-hand-side AC is more sensitive to tonality, left-hand-side AC is more sensitive to minute sequential differences in sound. Rostromedial and ventrolateral prefrontal cortices are involved in activation during tonal space and storing short-term memories, respectively. The Heschl’s gyrus/transverse temporal gyrus includes Wernicke’s area and functionality, it is heavily involved in emotion-sound, emotion-facial-expression, and sound-memory processes. The entorhinal cortex is the part of the ‘hippocampus system’ that aids and stores visual and auditory memories. The supramarginal gyrus (SMG) aids in language comprehension and is responsible for compassionate responses. SMG links sounds to words with the angular gyrus and aids in word choice. SMG integrates tactile, visual, and auditory info. When sound waves travel toward you, the first part of the process of hearing involves the ears. The sound waves enter the ear and the sensitive structures of the inner ear pick up the vibrations. These vibrations stimulate nerves and create a signal which contains the sound information. The sound signal is transmitted along the nerve toward the part of the brain that enables you to perceive the sensation of hearing as sound. In this way, hearing and the nervous system work together to enable you to hear. When the ear receives sound vibrations, there are hair cells in the cochlea that vibrate and translate the sounds into electrical signals. These electrical signals are transmitted to the auditory nerve, which transmits the information to the brain. The part of the brain that enables you to understand electrical signals as specific types of sounds is called the auditory cortex. It’s located within the temporal lobe, which is on either side of the brain in the region called the cerebral cortex. There are specific neurons in the auditory cortex that can process specific frequencies of sound that we perceive as high or low pitches. There are also parts of the brainstem and the midbrain that provide automatic reflex reactions to certain types of sounds. You can’t consciously notice this time difference because it’s only microseconds, but hearing and the nervous system work together to make this miracle possible. That’s why a stereo speaker system enables sounds to seem like they are surrounding you rather than just coming from the speakers. When each speaker is positioned correctly, your ears will hear the same sounds at slightly different times, and your brain will give you a sense of the sounds being in various locations.

Role of protein in development of new hearing hair cells. Finding could lead to future treatments for hearing loss. Findings explain why GFI1 is critical to enable embryonic cells to progress into functioning adult hair cells.

Hypothesis underlying the sensitivity of mammalian auditory system overturned. How hair cells transform mechanical forces arising from sound waves into a neural electrical signal, a process called mechano-electric transduction. Hair cells possess an intrinsic ability to fine-tune the sensitivity of the MET process (termed adaptation), which underlies our capacity to detect a wide range of sound intensities and frequencies with extremely high precision.

Testing Hearing Ability

Hearing Test provides an evaluation of the sensitivity of a person's sense of hearing and is most often performed by an audiologist using an audiometer. An audiometer is used to determine a person's hearing sensitivity at different frequencies. There are other hearing tests as well, e.g., Weber test and Rinne test. Audiologist is a health-care professional specializing in identifying, diagnosing, treating, and monitoring disorders of the auditory and vestibular systems.

Hearing Test to Check Hearing Loss - Hearing Check

Online Hearing Test - National Hearing Test

Dichotic listening Test is a psychological test commonly used to investigate selective attention within the auditory system and is a subtopic of cognitive psychology and neuroscience. Specifically, it is "used as a behavioral test for hemispheric lateralization of speech sound perception." During a standard dichotic listening test, a participant is presented with two different auditory stimuli simultaneously (usually speech). The different stimuli are directed into different ears over headphones. Research Participants were instructed to repeat aloud the words they heard in one ear while a different message was presented to the other ear. As a result of focusing to repeat the words, participants noticed little of the message to the other ear, often not even realizing that at some point it changed from English to German. At the same time, participants did notice when the voice in the unattended ear changed from a male’s to a female’s, suggesting that the selectivity of consciousness can work to tune in some information."

Multisensory Integration is the study of how information from the different sensory modalities, such as sight, sound, touch, smell, self-motion and taste, may be integrated by the nervous system. A coherent representation of objects combining modalities enables us to have meaningful perceptual experiences. Indeed, multisensory integration is central to adaptive behavior because it allows us to perceive a world of coherent perceptual entities. Multisensory integration also deals with how different sensory modalities interact with one another and alter each other's processing.

Hearing Impairment - Deafness

Hearing Impairment or Hearing Loss is a partial or total inability to hear. A Deaf person has little to no hearing. Hearing loss may occur in one or both ears. In children hearing problems can affect the ability to learn spoken language and in adults it can cause work related difficulties. In some people, particularly older people, hearing loss can result in loneliness. Hearing loss can be temporary or permanent.

American Society for Deaf Children - Every year in the U.S., about 24,000 children are born with some degree of hearing loss. Language Deprivation.

Deaf Professional Arts Network

"People with Disabilities are are like Gifts from God. We learn just as much about people with disabilities as we do ourselves. Sometimes they help us realize our own disabilities, ones that we never knew we had."

Auditory Processing Disorder is an umbrella term for a variety of disorders that affect the way the brain processes auditory information. Individuals with APD usually have normal structure and function of the outer, middle and inner ear (peripheral hearing). However, they cannot process the information they hear in the same way as others do, which leads to difficulties in recognizing and interpreting sounds, especially the sounds composing speech. It is thought that these difficulties arise from dysfunction in the central nervous system.

Auditory Scene Analysis is a proposed model for the basis of auditory perception. This is understood as the process by which the human auditory system organizes sound into perceptually meaningful elements. The term was coined by psychologist Albert Bregman. The related concept in machine perception is computational auditory scene analysis (CASA), which is closely related to source separation and blind signal separation. The three key aspects of Bregman's ASA model are: segmentation, integration, and segregation.

Spatial Hearing Loss refers to a form of deafness that is an inability to use spatial cues about where a sound originates from in space. This in turn impacts upon the ability to understand speech in the presence of background noise.

Auditory Verbal Agnosia also known as pure word deafness, is the inability to comprehend speech. Individuals with this disorder lose the ability to understand language, repeat words, and write from dictation. However, spontaneous speaking, reading, and writing are preserved. Individuals who exhibit pure word deafness are also still able to recognize non-verbal sounds. Sometimes, this agnosia is preceded by cortical deafness; however, this is not always the case. Researchers have documented that in most patients exhibiting auditory verbal agnosia, the discrimination of consonants is more difficult than that of vowels, but as with most neurological disorders, there is variation among patients.

Hearing Loss and Cognitive Decline in Older Adults

Hyperacusis is a health condition characterized by an increased sensitivity to certain frequency and volume ranges of sound (a collapsed tolerance to usual environmental sound). A person with severe hyperacusis has difficulty tolerating everyday sounds, some of which may seem unpleasantly or painfully loud to that person but not to others.

Otoacoustic Emission is a sound which is generated from within the inner ear, shown to arise through a number of different cellular and mechanical causes within the inner ear. Studies have shown that OAEs disappear after the inner ear has been damaged, so OAEs are often used in the laboratory and the clinic as a measure of inner ear health. Broadly speaking, there are two types of otoacoustic emissions: spontaneous otoacoustic emissions (SOAEs), which can occur without external stimulation, and evoked otoacoustic emissions (EOAEs), which require an evoking stimulus.

Tinnitus is the hearing of sound when no external sound is present. While often described as a ringing, it may also sound like a clicking, hiss or roaring. Rarely, unclear voices or music are heard. The sound may be soft or loud, low pitched or high pitched and appear to be coming from one ear or both. Most of the time, it comes on gradually. In some people, the sound causes depression or anxiety and can interfere with concentration.

Cell Towers and Wi-Fi - Diet - Sugar - Noise Pollution - Dry Skin - White Noise - Electronic Noise

Tinnitus Maskers are a range of devices based on simple white noise machines used to add natural or artificial sound into a tinnitus sufferer's environment in order to mask or cover up the ringing. The noise is supplied by a sound generator, which may reside in or above the ear or be placed on a table or elsewhere in the environment. The noise is usually white noise or music, but in some cases, it may be patterned sound or specially tailored sound based on the characteristics of the person's tinnitus.

Diagnosis and management of somatosensory tinnitus: review article. Tinnitus is the perception of sound in the absence of an acoustic external stimulus. It affects 10–17% of the world's population and it a complex symptom with multiple causes, which is influenced by pathways other than the auditory one. Recently, it has been observed that tinnitus may be provoked or modulated by stimulation arising from the somatosensorial system, as well as from the somatomotor and visual–motor systems. This specific subgroup – somatosensory tinnitus – is present in 65% of cases, even though it tends to be underdiagnosed. As a consequence, it is necessary to establish evaluation protocols and specific treatments focusing on both the auditory pathway and the musculoskeletal system. Subjected Tinnitus.

Does being exposed to quiet or low noise places for long periods of time make your hearing more sensitive? After I listened to music with my headphones on, I notice less ringing in my ears. It's like a workout for your ears that have been underused. Acute is having or demonstrating ability to recognize or draw fine distinctions.

Why we Hear other Peoples Footsteps and Ignore our Own. A team of scientists has uncovered the neural processes mice use to ignore their own footsteps, adjustable "sensory filter" that allowed them to ignore the sounds of their own footsteps. In turn, this allowed them to better detect other sounds arising from their surroundings. When we learn to speak or to play music, we predict what sounds we're going to hear, such as when we prepare to strike keys on a piano, and we compare this to what we actually hear. We use mismatches between expectation and experience to change how we play and we get better over time because our brain is trying to minimize these errors. Being unable to make predictions like this is also thought to be involved in a spectrum of afflictions. Overactive prediction circuits in the brain are thought to lead to the voice-like hallucinations. Noise Filters - Tuning Out.

Neural “Auto-Correct” Feature We Use to Process Ambiguous Sounds

Discovery of new Neurons in the Inner Ear can lead to new therapies for Hearing Disorders. Researchers have identified four types of neurons in the peripheral auditory system, three of which are new to science. The analysis of these cells can lead

to new therapies for various kinds of hearing disorders, such as tinnitus and age-related hearing loss.

Possible new therapy for Hearing Loss Regrows the Sensory Hair Cells found in the Cochlea -- a part of the inner ear -- that converts sound vibrations into electrical signals and can be permanently lost due to age or noise damage.

Usher Syndrome is an extremely rare genetic disorder caused by a mutation in any one of at least 11 genes resulting in a combination of hearing loss and visual impairment. It is a leading cause of deafblindness and is at present incurable. Hearing through the Tongue.

Sensory Deprivation is the deliberate reduction or removal of stimuli from one or more of the senses. Simple devices such as blindfolds or hoods and earmuffs can cut off sight and hearing, while more complex devices can also cut off the sense of smell, touch, taste, thermoception (heat-sense), and 'gravity'. Sensory deprivation has been used in various alternative medicines and in psychological experiments (e.g. with an isolation tank). Short-term sessions of sensory deprivation are described as relaxing and conducive to meditation; however, extended or forced sensory deprivation can result in extreme anxiety, hallucinations, bizarre thoughts, and depression. A related phenomenon is perceptual deprivation, also called the ganzfeld effect. In this case a constant uniform stimulus is used instead of attempting to remove the stimuli; this leads to effects which have similarities to sensory deprivation. Sensory deprivation techniques were developed by some of the armed forces within NATO, as a means of interrogating prisoners within international treaty obligations. The European Court of Human Rights ruled that the use of the five techniques by British security forces in Northern Ireland amounted to a practice of inhumane and degrading treatment.

Spatial Intelligence - Special Needs - Sonar (sound navigation)

Photoacoustic Imaging is a biomedical imaging modality based on the photoacoustic effect. In photoacoustic imaging, non-ionizing laser pulses are delivered into biological tissues (when radio frequency pulses are used, the technology is referred to as thermoacoustic imaging). Some of the delivered energy will be absorbed and converted into heat, leading to transient thermoelastic expansion and thus wideband (i.e. MHz) ultrasonic emission. The generated ultrasonic waves are detected by ultrasonic transducers and then analyzed to produce images. It is known that optical absorption is closely associated with physiological properties, such as hemoglobin concentration and oxygen saturation. As a result, the magnitude of the ultrasonic emission (i.e. photoacoustic signal), which is proportional to the local energy deposition, reveals physiologically specific optical absorption contrast. 2D or 3D images of the targeted areas can then be formed. Fig. 1 is a schematic illustration showing the basic principles of photoacoustic imaging.

Thermoacoustic Imaging is a strategy for studying the absorption properties of human tissue using virtually any kind of electromagnetic radiation. But Alexander Graham Bell first reported the physical principle upon which thermoacoustic imaging is based a century earlier. He observed that audible sound could be created by illuminating an intermittent beam of sunlight onto a rubber sheet.

CDK2 Inhibitors as Candidate Therapeutics for Cisplatin-and Noise-Induced Hearing. inhibiting an enzyme called cyclin-dependent kinase 2 (CDK2) protects mice and rats from noise- or drug-induced hearing loss. The study suggests that CDK2 inhibitors prevent the death of inner ear cells, which has the potential to save the hearing of millions of people around the world.

Researchers find proteins that might restore damaged sound-detecting cells in the ear. Using genetic tools in mice, researchers say they have identified a pair of proteins that precisely control when sound-detecting cells, known as hair cells, are born in the mammalian inner ear. The proteins may hold a key to future therapies to restore hearing in people with irreversible deafness. In order for mammals to hear, sound vibrations travel through a hollow, snail shell-looking structure called the cochlea. Lining the inside of the cochlea are two types of sound-detecting cells, inner and outer hair cells, which convey sound information to the brain. An estimated 90% of genetic hearing loss is caused by problems with hair cells or damage to the auditory nerves that connect the hair cells to the brain. Deafness due to exposure to loud noises or certain viral infections arises from damage to hair cells. Unlike their counterparts in other mammals and birds, human hair cells cannot regenerate. So, once hair cells are damaged, hearing loss is likely permanent.

Scientists develop new gene therapy for deafness. Breakthrough may help in the treatment of children with hearing loss. Deafness is the most common sensory disability worldwide. Genetic deafness is caused by a mutation in the gene SYNE4.

Hearing and Deaf Infants Process information Differently. Deaf children face unique communication challenges, but a new study shows that the effects of hearing impairment extend far beyond language skills to basic cognitive functions, and the differences in development begin surprisingly early in life. Researchers have studied how deaf infants process visual stimuli compared to hearing infants and found they took significantly longer to become familiar with new objects.

Link between hearing and cognition begins earlier than once thought. A new study finds that cognitive impairment begins in the earliest stages of age-related hearing loss -- when hearing is still considered normal.

Victorian child hearing-loss databank to go global. A unique databank that profiles children with hearing loss will help researchers globally understand why some children adapt and thrive, while others struggle. Victorian Childhood Hearing Impairment Longitudinal Databank (VicCHILD).

Earwax or cerumen, is a brown, orange, red, yellowish or gray waxy substance secreted in the ear canal of humans and other mammals. It protects the skin of the human ear canal, assists in cleaning and lubrication, and provides protection against bacteria, fungi, and water. Earwax consists of dead skin cells, hair, and the secretions of cerumen by the ceruminous and sebaceous glands of the outer ear canal. Major components of earwax are long chain fatty acids, both saturated and unsaturated, alcohols, squalene, and cholesterol. Excess or compacted cerumen is the buildup of ear wax causing a blockage in the ear canal and it can press against the eardrum or block the outside ear canal or hearing aids, potentially causing hearing loss. Bebird N3 Pro: Ear Cleaning Tweezer and Rod 2-in-1.

Hearing Aids - Audio Amplifiers

Hearing Aid is a device designed to improve hearing. Hearing aids are classified as medical devices in most countries, and regulated by the respective regulations. Small audio amplifiers such as PSAPs or other plain sound reinforcing systems cannot be sold as "hearing aids".

Sound Location (sonar).

Cochlear Implant is a surgically implanted electronic device that provides a sense of sound to a person who is profoundly deaf or severely hard of hearing in both ears; as of 2014 they had been used experimentally in some people who had acquired deafness in one ear after learning how to speak. Cochlear implants bypass the normal hearing process; they have a microphone and some electronics that reside outside the skin, generally behind the ear, which transmits a signal to an array of electrodes placed in the cochlea, which stimulate the cochlear nerve. Prosthetics (bionics).

Auditory Brainstem Implant is a surgically implanted electronic device that provides a sense of sound to a person who is profoundly deaf, due to retrocochlear hearing impairment (due to illness or injury damaging the cochlea or auditory nerve, and so precluding the use of a cochlear implant).

ProSounds H2P: Get Up To 6x Normal Hearing Enhancement with Simultaneous Hearing Protection.

Muzo - Personal Zone Creator w Noise Blocking Tech.

AMPSound Personal Bluetooth Stereo Amplifiers & Earbuds Hearing Aid.

Hearing aids may delay cognitive decline.

Experiencing Music through a Cochlear Implant.

Bone Conduction

BLU - World’s Most Versatile Hearing Glasses.

Olive: Next-Gen Hearing Aid.

Sleeping Aids - Headphones

iPS cell-derived inner ear cells may improve congenital hearing loss. A possible therapy for hereditary hearing loss in humans.

Scientists develop method to repair damaged structures deep inside the ear, developed a new approach to repair cells deep inside the ear, we figured out how to deliver a drug into the inner ear.

Good noise, bad noise: White noise improves hearing. White noise is not the same as other noise -- and even a quiet environment does not have the same effect as white noise. With a background of continuous white noise, hearing pure sounds becomes even more precise, as researchers have shown. Their findings could be applied to the further development of cochlear implants.

Sign Language - Visual Communication

Sign

Language is a language which chiefly uses manual

communication to convey meaning, as opposed to acoustically conveyed sound

patterns. This can involve simultaneously combining hand

shapes,

orientation and movement of the hands,

arms or body,

and facial

expressions to express a speaker's thoughts. Sign languages share many

similarities with spoken languages (sometimes called "oral languages"),

which depend primarily on sound, and linguists consider both to be types

of natural language. Although there are some significant differences

between signed and spoken languages, such as how they use space

grammatically, sign languages show the same linguistic properties and use

the same language faculty as do spoken languages. They should not be

confused with Body

Language, which is a kind of non-linguistic

communication.

Sign

Language is a language which chiefly uses manual

communication to convey meaning, as opposed to acoustically conveyed sound

patterns. This can involve simultaneously combining hand

shapes,

orientation and movement of the hands,

arms or body,

and facial

expressions to express a speaker's thoughts. Sign languages share many

similarities with spoken languages (sometimes called "oral languages"),

which depend primarily on sound, and linguists consider both to be types

of natural language. Although there are some significant differences

between signed and spoken languages, such as how they use space

grammatically, sign languages show the same linguistic properties and use

the same language faculty as do spoken languages. They should not be

confused with Body

Language, which is a kind of non-linguistic

communication.Symbols - Visual Language - Let Your Fingers Do the Talking.

American Sign Language is the predominant sign language of Deaf communities in the United States and most of anglophone Canada. ASL signs have a number of phonemic components, including movement of the face and torso as well as the hands. ASL is not a form of pantomime, but iconicity does play a larger role in ASL than in spoken languages. English loan words are often borrowed through fingerspelling, although ASL grammar is unrelated to that of English. ASL has verbal agreement and aspectual marking and has a productive system of forming agglutinative classifiers. Many linguists believe ASL to be a subject–verb–object (SVO) language, but there are several alternative proposals to account for ASL word order.

American Sign Language (youtube)

ASL speakers amount to about 1 % of the 231 million English speakers in America.

List of Sign Languages. There are perhaps three hundred sign languages in use around the world today.

SignAloud: Gloves Translate Sign Language into Text and Speech (youtube)

Christine Sun Kim: The enchanting music of Sign Language (video)

Uni, 1st Sign Language to Voice System

Start American Sign Language

Hand Gestures (people smart)

American Hand Gestures in Different Cultures (youtube)

7 Ways to Get Yourself in Trouble Abroad (youtube)

Song in Sign Language, Eminem Lose Yourself (youtube)

Hand Symbols

Cherology are synonyms of phonology and phoneme previously used in the study of sign languages.

Tactical Hand Signals (Info-Graph)

Communication

vl2 Story Book Apps

Bilingual Interfaces through Visual Narratives

Life Print Sign Language

Lip Reading is a technique of understanding speech by visually interpreting the movements of the lips, face and tongue when normal sound is not available. It relies also on information provided by the context, knowledge of the language, and any residual hearing. Lip-reading is not easy, as this clip demonstrates. Although ostensibly used by deaf and hard-of-hearing people, most people with normal hearing process some speech information from sight of the moving mouth.

Viseme is any of several speech sounds that look the same, for example when lip reading.

Al-Sayyid Bedouin Sign Language

Voices from El-Sayed (youtube)

American Speech-Language-Hearing Association

American Speech-Language-Hearing Association

Speech and Language Center

Learning Specialist

Speech Recognition - Voice Activated

Computer system transcribes words users 'speak silently'. Electrodes on the face and jaw pick up otherwise undetectable neuromuscular signals triggered by internal verbalizations.

Sonitus Medical SoundBite Hearing System

Etymotic

Manually Coded English is a variety of visual communication methods expressed through the hands which attempt to represent the English language. Unlike deaf sign languages which have evolved naturally in deaf communities, the different forms of MCE were artificially created, and generally follow the grammar of English.

Morse Code - Codes

Dragon Notes - Dragon Dictation

Smartphone Technologies

Closed Captioning and subtitling are both processes of displaying text on a television, video screen, or other visual display to provide additional or interpretive information. Both are typically used as a transcription of the audio portion of a program as it occurs (either verbatim or in edited form), sometimes including descriptions of non-speech elements. Other uses have been to provide a textual alternative language translation of a presentation's primary audio language that is usually burned-in (or "open") to the video and unselectable.

Closed Caption Software - Closed Captioning Jobs

Audio Description (blind and visually impaired)

Real-Time Text is text transmitted instantly as it is typed or created. Recipients can immediately read the message while it is being written, without waiting. Real-time text is used for conversational text, in collaboration, and in live captioning. Technologies include TDD/TTY devices for the deaf, live captioning for TV, Text over IP (ToIP), some types of instant messaging, software that automatically captions conversations, captioning for telephony/video teleconferencing, telecommunications relay services including ip-relay, transcription services including Remote CART, TypeWell, collaborative text editing, streaming text applications, next-generation 9-1-1/1-1-2 emergency service. Obsolete TDD/TTY devices are being replaced by more modern real-time text technologies, including Text over IP, ip-relay, and instant messaging. During 2012, the Real-Time Text Taskforce (R3TF) designed a standard international symbol to represent real-time text, as well as the alternate name Fast Text to improve public education of the technology.

Real-Time Transcription is the general term for transcription by court reporters using real-time text technologies to deliver computer text screens within a few seconds of the words being spoken. Specialist software allows participants in court hearings or depositions to make notes in the text and highlight portions for future reference. Typically, real-time writers can produce text using machines at the rate of at least 200 words per minute. Stenographers can typically type up to 300 words per minute for short periods of time, but most cannot sustain such a speed. Real-time transcription is also used in the broadcasting environment where it is more commonly termed "captioning."

Instant Messaging technology is a type of online chat that offers real-time text transmission over the Internet. A LAN messenger operates in a similar way over a local area network. Short messages are typically transmitted between two parties, when each user chooses to complete a thought and select "send". Some IM applications can use push technology to provide real-time text, which transmits messages character by character, as they are composed. More advanced instant messaging can add file transfer, clickable hyperlinks, Voice over IP, or video chat.

Auditory Training - Listening

Communication - Languages

Sound - Music

Dyslexia - Learning Styles

Visual Language is a system of communication using visual elements. Speech as a means of communication cannot strictly be separated from the whole of human communicative activity which includes the visual and the term 'language' in relation to vision is an extension of its use to describe the perception, comprehension and production of visible signs.

Visual Language - Visual Language

Visual Learning - Body Language

Visual Perception (spatial intelligence)

Knowledge Visualization

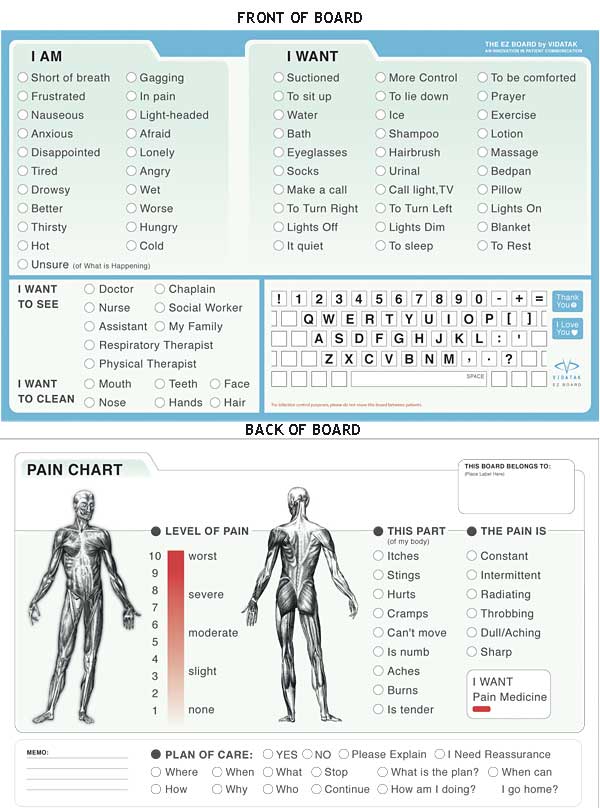

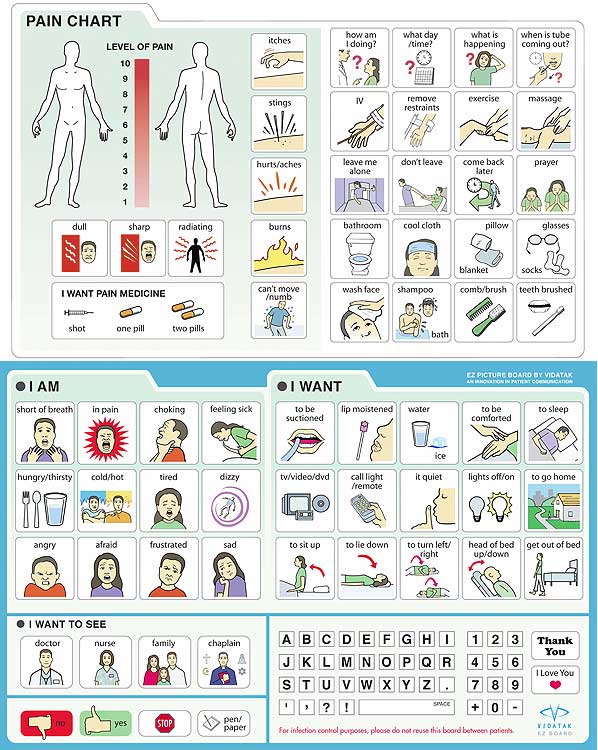

Communication Boards

Boardmaker Software

Alphabet Card (PDF) - Place Mat (PDF)

Augmentative & Alternative Communication devices (AAC)

Facilitated Communication is a discredited technique used by some caregivers and educators in an attempt to assist people with severe educational and communication disabilities. The technique involves providing an alphabet board, or keyboard. The facilitator holds or gently touches the disabled person's arm or hand during this process and attempts to help them move their hand and amplify their gestures. In addition to providing physical support needed for typing or pointing, the facilitator provides verbal prompts and moral support. In addition to human touch assistance, the facilitator's belief in their communication partner's ability to communicate seems to be a key component of the technique.

Ways to Communicate with a Non-Verbal Child

Communication Devices for Children who Can't Talk

Communication Boards

Speech and Communication

Communication Needs

World’s First Touch Enabled T-shirt - BROADCAST: a programmable LED t–shirt that can display any slogan you want using your smartphone.

Software turns Webcams into Eye-Trackers

Sound Pollution - Noise Pollution Tools