BK101

Knowledge Base

Physics

Physics is the science of matter and energy and their interactions and motion through space and time. The science of change, including physical laws, physical properties and phenomena. Observations.

Laws - Chemistry - Scopes - Atoms - Quantum Physics - Particles - Waves

Physicist is a scientist who has specialized knowledge in the field of physics, which encompasses the interactions of matter and energy at all length and time scales in the physical universe. Physicists generally are interested in the root or ultimate causes of phenomena, and usually frame their understanding in mathematical terms. Physicists work across a wide range of research fields, spanning all length scales: from sub-atomic and particle physics, to molecular length scales of chemical and biological interest, to cosmological length scales encompassing the Universe as a whole. The field generally includes two types of physicists: experimental physicists who specialize in the observation of physical phenomena and the analysis of experiments, and theoretical physicists who specialize in mathematical modeling of physical systems to rationalize, explain and predict natural phenomena. Physicists can apply their knowledge towards solving practical problems or developing new technologies. (also known as applied physics or engineering physics). "Physicists do a lot of research to learn more and understand more about our world, and in the process they make many new discoveries. Then Engineers will build and develop new tools and products using this newly discovered knowledge that physicists provided from years of research".

Classical Physics refers to theories of physics that predate modern, more complete, or more widely applicable theories. If a currently accepted theory is considered to be "modern," and its introduction represented a major paradigm shift, then the previous theories, or new theories based on the older paradigm, will often be referred to as belonging to the realm of "classical" physics.

Physics has learned a lot about matter and energy and how it works and behaves. But we are still only scratching the surface of what is known. We live on speck of dust in a universe that is so large that we can't even see where the universe ends, and we can't even see how small things are or how small things can get, so we still have a lot more to learn. So shut up and calculate or shut up and contemplate.

Physical Law is a theoretical statement "inferred from particular facts, applicable to a defined group or class of phenomena, and expressible by the statement that a particular phenomenon always occurs if certain conditions be present." Physical laws are typically conclusions based on repeated scientific experiments and observations over many years and which have become accepted universally within the scientific community. The production of a summary description of our environment in the form of such laws is a fundamental aim of science. These terms are not used the same way by all authors. Fundamental Physics Formulas (wiki) - Forces of Nature (constants).

Phenomena is any thing which manifests itself. Phenomena are often, but not always, understood as "things that appear" or "experiences" for a sentient being, or in principle may be so. To show, shine, appear, to be manifest or manifest itself, plural phenomena).

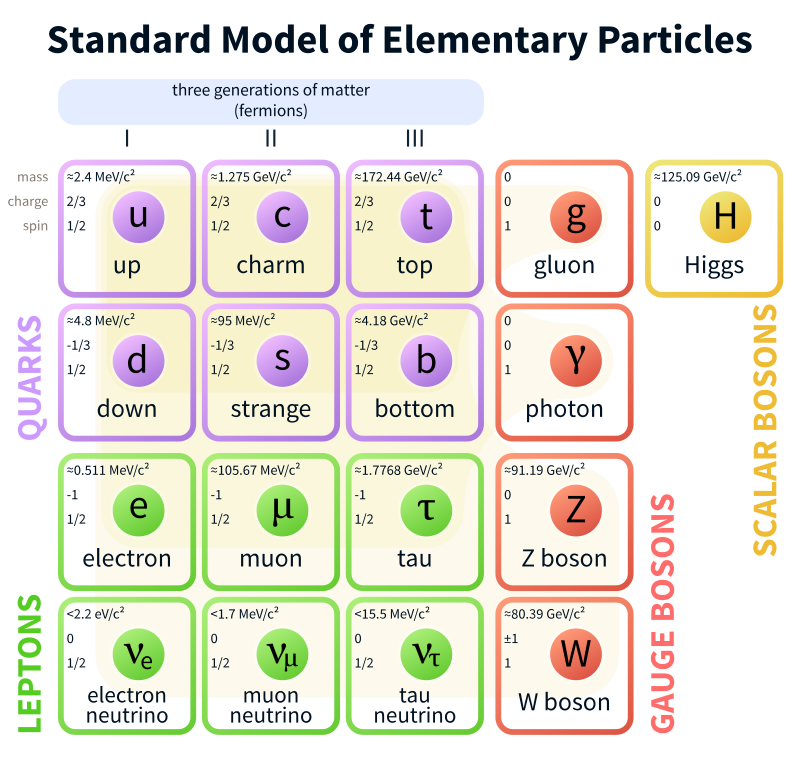

Particle Physics is the branch of physics that studies the nature of the particles that constitute matter (particles with mass) and radiation (massless particles). Waves - Particle Accelerator.

Atomic Physics is the field of physics that studies atoms as an isolated system of electrons and an atomic nucleus. It is primarily concerned with the arrangement of electrons around the nucleus and the processes by which these arrangements change. This comprises ions, neutral atoms and, unless otherwise stated, it can be assumed that the term atom includes ions.

Atomic fingerprint is a term for the unique line spectrum that is characteristic of a given element and can be used for identification. Balmer Formula is a mathematical equation for calculating the emitted wavelength of a light from an excited hydrogen atom when an electron drops to the second energy level. When an electron “drops” to a lower energy level, that electron ends up with less energy than it had originally. That “lost” energy doesn’t just disappear, it’s not destroyed, it has to go somewhere. What happens is that that energy is transformed into another type of energy. In the case of these atoms, it’s transformed into electromagnetic energy; sometimes visible light, sometimes X-rays, sometimes other.

Nuclear Physics is the field of physics that studies atomic nuclei and their constituents and interactions. The most commonly known application of nuclear physics is nuclear power generation, but the research has led to applications in many fields, including nuclear medicine and magnetic resonance imaging, nuclear weapons, ion implantation in materials engineering, and radiocarbon dating in geology and archaeology.

E=MC2 - Action Physics is the study of Motion.

Digital Physics is a collection of theoretical perspectives based on the premise that the universe is, at heart, describable by information. Therefore, according to this theory, the universe can be conceived of as either the output of a deterministic or probabilistic computer program, a vast, digital computation device, or mathematically isomorphic to such a device.

Digital Philosophy is a modern re-interpretation that all information must have a digital means of its representation. An informational process transforms the digital representation of the state of the system into its future state. The world can be resolved into digital bits, with each bit made of smaller bits. These bits form a fractal pattern in fact-space. The pattern behaves like a cellular automaton. The pattern is inconceivably large in size and dimensions. Although the world started simply, its computation is irreducibly complex.

Theoretical Physics employs mathematical models and abstractions of physical objects and systems to rationalize, explain and predict natural phenomena. This is in contrast to experimental physics, which uses experimental tools to probe these phenomena.

Experimental Physics is the category of disciplines and sub-disciplines in the field of physics that are concerned with the observation of physical phenomena and experiments. Methods vary from discipline to discipline, from simple experiments and observations, such as the Cavendish experiment, to more complicated ones, such as the Large Hadron Collider.

Mathematical Physics refers to development of mathematical methods for application to problems in physics.

Science Research

Plasma Physics: Plasma can be created by heating a gas or subjecting it to a strong electromagnetic field, applied with a laser or microwave generator at temperatures above 5000 °C. This decreases or increases the number of electrons in the atoms or molecules, creating positive or negative charged particles called ions, and is accompanied by the dissociation of molecular bonds, if present. Plasma is the most abundant form of ordinary matter in the universe.

Fusion (cold fusion)

Astrophysics is the branch of astronomy that employs the principles of physics and chemistry "to ascertain the nature of the heavenly bodies, rather than their positions or motions in space.

Metaphysics (philosophy)

Biophysics is an interdisciplinary science that applies the approaches and methods of physics to study biological systems. Biophysics covers all scales of biological organization, from molecular to organismic and populations. Biophysical research shares significant overlap with biochemistry, physical chemistry, nanotechnology, bioengineering, computational biology, biomechanics and systems biology. Electromagnetic Radiation - Mitochondria.

If one wants to summarize our knowledge of physics in the briefest possible terms, there are three really fundamental observations: (i) Space-time is a pseudo-Riemannian manifold M, endowed with a metric tensor and governed by geometrical laws. (ii) Over M is a vector bundle X with a nonabelian gauge group G. (iii) Fermions are sections of (S~+⊗VR)⊕(S~−⊗VR~). R and R~ are not isomorphic; their failure to be isomorphic explains why the light fermions are light and presumably has its origins in a representation difference Δ in some underlying theory. All of this must be supplemented with the understanding that the geometrical laws obeyed by the metric tensor, the gauge fields, and the fermions are to be interpreted in quantum mechanical terms.

Einstein Field Equations relate the geometry of space-time with the distribution of matter within it

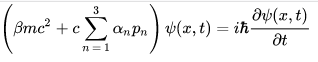

Dirac Equation is a relativistic wave equation describes all spin-1/2 massive particles such as electrons and quarks for which parity is a symmetry. It is consistent with both the principles of quantum mechanics and the theory of special relativity, and was the first theory to account fully for special relativity in the context of quantum mechanics. It was validated by accounting for the fine details of the hydrogen spectrum in a completely rigorous way.

Mills Theory is a gauge theory based on a special unitary group SU(N), or more generally any compact, reductive Lie algebra. Yang–Mills theory seeks to describe the behavior of elementary particles using these non-abelian Lie groups and is at the core of the unification of the electromagnetic force and weak forces (i.e. U(1) × SU(2)) as well as quantum chromodynamics, the theory of the strong force (based on SU(3)). Thus it forms the basis of our understanding of the Standard Model of particle physics.

Forces of Nature - Laws

1: Weak Force is responsible for radioactive decay, which plays an essential role in nuclear fission. Weak Force is also known as Weak Interaction or Weak Nuclear Force. Electroweak Interaction is the unified description of two of the four known fundamental interactions of nature, which are electromagnetism and the weak interaction. Physical Law - Scientific Law.

2: Strong Force is the mechanism responsible for holding atoms together. Strong force is also called strong interaction, the strong force, nuclear strong force or strong nuclear force. At the range of a femtometer, it is the strongest force, being approximately 137 times stronger than electromagnetism, a million times stronger than weak interaction and 1038 times stronger than gravitation. The strong nuclear force ensures the stability of ordinary matter, confining quarks into hadron particles, such as the proton and neutron, and the further binding of neutrons and protons into atomic nuclei. Most of the mass-energy of a common proton or neutron is in the form of the strong force field energy; the individual quarks provide only about 1% of the mass-energy of a proton.

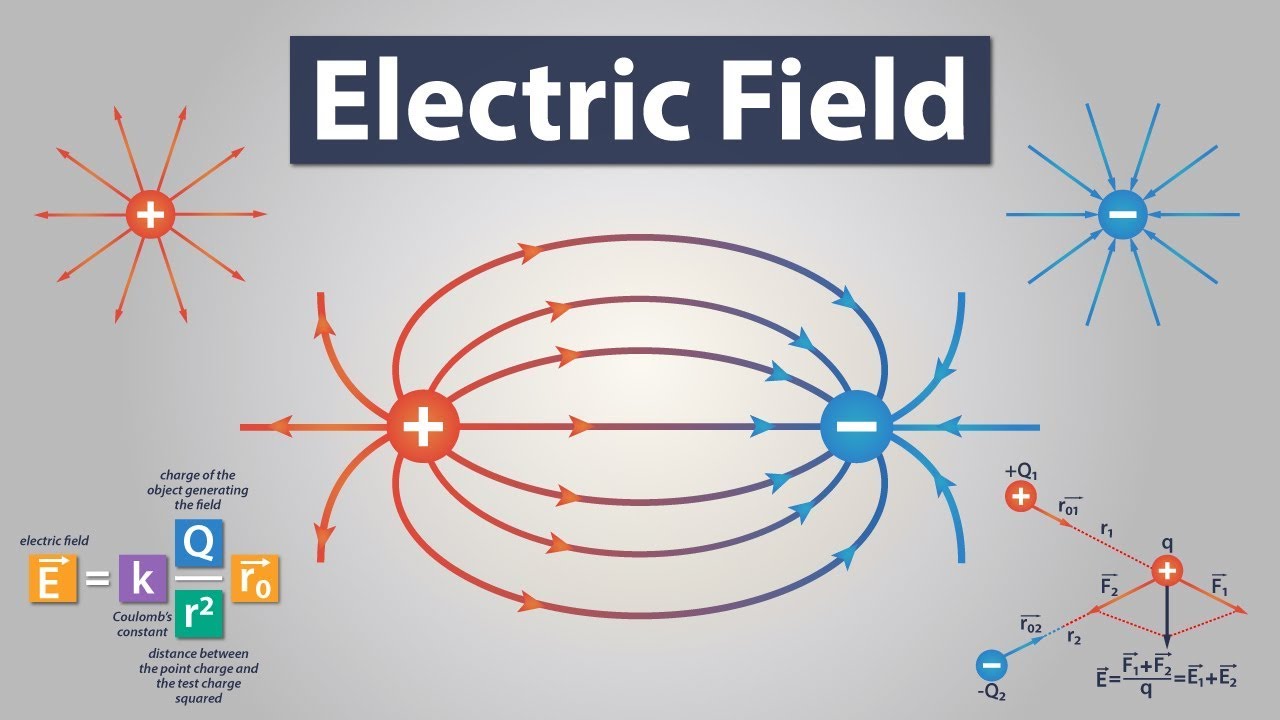

3: Electromagnetic Force is a type of physical interaction that occurs between electrically charged particles. Waves.

4: Gravity is the force of attraction between all masses in the universe; especially the attraction of the earth's mass for bodies near its surface. All things with energy are brought toward or gravitate toward one another, including stars, planets, galaxies and even light and sub-atomic particles. Action Physics.

Fifth Force? - A Description is not an Explanation - Core Theory of Physics (image of equation)

Theory of Everything is a theoretical framework of physics that links together all the physical aspects of the universe into a single coherent explanation.

Standard Model is a theory concerning the electromagnetic, weak, and strong nuclear interactions, as well as classifying all the subatomic particles known.

Penguin Diagram are a class of Feynman diagrams which are important for understanding CP violating processes in the standard model. They refer to one-loop processes in which a quark temporarily changes flavor (via a W or Z loop), and the flavor-changed quark engages in some tree interaction, typically a strong one. For the interactions where some quark flavors (e.g. very heavy ones) have much higher interaction amplitudes than others, such as CP-violating or Higgs interactions, these penguin processes may have amplitudes comparable to or even greater than those of the direct tree processes. A similar diagram can be drawn for leptonic decays.

Fundamental Interaction are the interactions that do not appear to be reducible to more basic interactions. There are four fundamental interactions known to exist: the gravitational and electromagnetic interactions, which produce significant long-range forces whose effects can be seen directly in everyday life, and the strong and weak interactions, which produce forces at minuscule, subatomic distances and govern nuclear interactions. Some scientists hypothesize that a fifth force might exist, but these hypotheses remain speculative. (also known as fundamental forces).

Uniformitarianism was an assumption that the same natural laws and processes that operate in the universe now have always operated in the universe in the past and apply everywhere in the universe. Physical Constant (consistent)

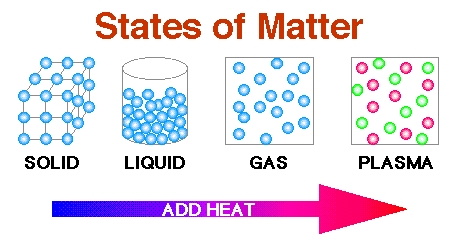

States of Matter

1: Gas may be made up of individual atoms like with a noble gas, or elemental molecules made from one type of atom like with oxygen, or compound molecules made from a variety of atoms like carbon dioxide. A gas mixture would contain a variety of pure gases much like the air. What distinguishes a gas from liquids and solids is the vast separation of the individual gas particles. This separation usually makes a colorless gas invisible to the human observer. The interaction of gas particles in the presence of electric and gravitational fields are considered negligible as indicated by the constant velocity vectors in the image. One type of commonly known gas is steam. Evaporation.

2: Solid is characterized by structural rigidity and resistance to changes of shape or volume. Unlike a liquid, a solid object does not flow to take on the shape of its container, nor does it expand to fill the entire volume available to it like a gas does. The atoms in a solid are tightly bound to each other, either in a regular geometric lattice (crystalline solids, which include metals and ordinary ice) or irregularly (an amorphous solid such as common window glass). Rocks - Minerals - Particles.

3: Liquid is a nearly incompressible fluid that conforms to the shape of its container but retains a nearly constant volume independent of pressure. As such, it is one of the four fundamental states of matter (the others being solid, gas, and plasma), and is the only state with a definite volume but no fixed shape. A liquid is made up of tiny vibrating particles of matter, such as atoms, held together by intermolecular bonds. Water is, by far, the most common liquid on Earth. Like a gas, a liquid is able to flow and take the shape of a container. Most liquids resist compression, although others can be compressed. Unlike a gas, a liquid does not disperse to fill every space of a container, and maintains a fairly constant density. A distinctive property of the liquid state is surface tension, leading to wetting phenomena.

4: Plasma is a gas that becomes heated until the atoms lose all their electrons, leaving a highly electrified collection of nuclei and free electrons. A plasma has properties unlike those of the other three states. A plasma can be created by heating a gas or subjecting it to a strong electromagnetic field, applied with a Laser or microwave generator. This decreases or increases the number of electrons, creating positive or negative charged particles called ions, and is accompanied by the dissociation of molecular bonds, if present. Plasma Oscillation are rapid oscillations of the electron density in conducting media such as plasmas or metals. The oscillations can be described as an instability in the dielectric function of a free electron gas. The frequency only depends weakly on the wavelength of the oscillation. The quasiparticle resulting from the quantization of these oscillations is the plasmon. Plasma is the most common form of matter as most of the universe is composed of stars. Nonthermal Plasma is a plasma which is not in thermodynamic equilibrium, because the electron temperature is much hotter than the temperature of heavy species (ions and neutrals). As only electrons are thermalized, their Maxwell-Boltzmann velocity distribution is very different than the ion velocity distribution. When one of the velocities of a species does not follow a Maxwell-Boltzmann distribution, the plasma is said to be non-Maxwellian. A kind of common nonthermal plasma is the mercury-vapor gas within a fluorescent lamp, where the "electron gas" reaches a temperature of 20,000 K (19,700 °C; 35,500 °F) while the rest of the gas, ions and neutral atoms, stays barely above room temperature, so the bulb can even be touched with hands while operating. Where does laser energy go after being fired into plasma? - Plasma Physics - Plasma Universe.

State of

Matter is one of the distinct forms in which matter can exist.

Four

States of Matter are Observable in Everyday Life: solid, liquid, gas, and

plasma. Many other states are known to exist, such as glass or liquid

crystal, and some only exist under extreme conditions, such as

Bose–Einstein condensates, neutron-degenerate matter, and quark-gluon

plasma, which only occur, respectively, in situations of extreme cold,

extreme density, and extremely high-energy. Some other states are believed

to be possible but remain theoretical for now. For a complete list of all

exotic states of matter,

List of States of Matter (wiki).

State of

Matter is one of the distinct forms in which matter can exist.

Four

States of Matter are Observable in Everyday Life: solid, liquid, gas, and

plasma. Many other states are known to exist, such as glass or liquid

crystal, and some only exist under extreme conditions, such as

Bose–Einstein condensates, neutron-degenerate matter, and quark-gluon

plasma, which only occur, respectively, in situations of extreme cold,

extreme density, and extremely high-energy. Some other states are believed

to be possible but remain theoretical for now. For a complete list of all

exotic states of matter,

List of States of Matter (wiki).

Surface Science is the study of physical and chemical phenomena that occur at the interface of two phases, including solid–liquid interfaces, solid–gas interfaces, solid–vacuum interfaces, and liquid–gas interfaces. It includes the fields of surface chemistry and surface physics, which is the study of physical interactions that occur at interfaces. It overlaps with surface chemistry. Some of the things investigated by surface physics include friction, surface states, surface diffusion, surface reconstruction, surface phonons and plasmons, epitaxy and surface enhanced Raman scattering, the emission and tunneling of electrons, spintronics, and the self-assembly of nanostructures on surfaces. In a confined liquid, defined by geometric constraints on a nanoscopic scale, most molecules sense some surface effects, which can result in physical properties grossly deviating from those of the bulk liquid. Surface Chemistry - Cell Surface - Surface Engineering.

Absorption in chemistry is a physical or chemical phenomenon or a process in which atoms, molecules or ions enter some bulk phase – liquid or solid material. This is a different process from adsorption, since molecules undergoing absorption are taken up by the volume, not by the surface (as in the case for adsorption). A more general term is sorption, which covers absorption, adsorption, and ion exchange. Absorption is a condition in which something takes in another substance.

Physicists have identified a new state of matter whose structural order operates by rules more aligned with quantum mechanics than standard thermodynamic theory. In a classical material called artificial spin ice, which in certain phases appears disordered, the material is actually ordered, but in a "topological" form.

Spin Ice is a magnetic substance that does not have a single minimal-energy state. It has magnetic moments (i.e. "spin") as elementary degrees of freedom which are subject to frustrated interactions. By their nature, these interactions prevent the moments from exhibiting a periodic pattern in their orientation down to a temperature much below the energy scale set by the said interactions. Spin ices show low-temperature properties, residual entropy in particular, closely related to those of common crystalline water ice. (Shakti spin ice).

New State of Physical Matter in which atoms can exist as both Solid and Liquid simultaneously. Applying high pressures and temperatures to potassium -- a simple metal -- creates a state in which most of the element's atoms form a solid lattice structure, the findings show. However, the structure also contains a second set of potassium atoms that are in a fluid arrangement. Under the right conditions, over half a dozen elements -- including sodium and bismuth -- are thought to be capable of existing in the newly discovered state, researchers say.

Matter

Matter is something which has mass and occupies space. Matter includes atoms and molecules and anything made up of these, but not other energy phenomena or waves such as light or sound. Elements.

Substance is the real physical matter of which a person or thing consists. The choicest or most essential or most vital part of some idea or experience. A particular kind or species of matter with uniform properties. The property of holding together and retaining its shape. Mineral.

Material is the tangible substance that goes into the makeup of a physical object. Something derived from or composed of matter and having physical form or substance. Something physical as distinct from intellectual or psychological well-being. Something that is real rather than spiritual or abstract, though information, data or ideas and observations can be used or reworked into a finished form. Material Science - Immaterial.

Physical is something having substance or material existence and perceptible to the senses. Something characterized by energetic bodily activity, matter and energy.

State of Matter is one of the distinct forms that matter takes on. Four states of matter are observable in everyday life: solid, liquid, gas, and plasma.

Pyramid of Complexity - Dark Matter

Phase in matter is a region of space (a thermodynamic system), throughout which all physical properties of a material are essentially uniform. Examples of physical properties include density, index of refraction, magnetization and chemical composition. A simple description is that a phase is a region of material that is chemically uniform, physically distinct, and (often) mechanically separable. In a system consisting of ice and water in a glass jar, the ice cubes are one phase, the water is a second phase, and the humid air over the water is a third phase. The glass of the jar is another separate phase.

Existence of New Form of Electronic Matter Quadrupole Topological Insulators

Baryonic Matter nearly all matter that may be encountered or experienced in everyday life is baryonic matter, which includes atoms of any sort, and provides those with the property of mass. Non-baryonic matter, as implied by the name, is any sort of matter that is not composed primarily of baryons. This might include neutrinos and free electrons, dark matter, such as supersymmetric particles, axions, and black holes. Baryon is a composite subatomic Particle made up of three Quarks.

Baryon Asymmetry problem in physics refers to the imbalance in baryonic matter (the type of matter experienced in everyday life) and antibaryonic matter in the observable universe. Neither the standard model of particle physics, nor the theory of general relativity provides an obvious explanation for why this should be so, and it is a natural assumption that the universe be neutral with all conserved charges. The Big Bang should have produced equal amounts of matter and antimatter. Since this does not seem to have been the case, it is likely some physical laws must have acted differently or did not exist for matter and antimatter. Several competing hypotheses exist to explain the imbalance of matter and antimatter that resulted in baryogenesis. However, there is as of yet no consensus theory to explain the phenomenon. As remarked in a 2012 research paper, "The origin of matter remains one of the great mysteries in physics.

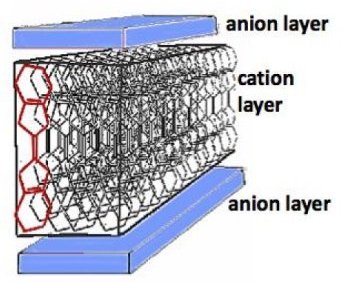

Condensed Matter Physics is a branch of physics that deals with the physical properties of condensed phases of matter, where particles adhere to each other. Condensed matter physicists seek to understand the behavior of these phases by using physical laws. In particular, they include the laws of quantum mechanics, electromagnetism and statistical mechanics.

Programmable Matter is matter which has the ability to change its physical properties (shape, density, moduli, conductivity, optical properties, etc.) in a programmable fashion, based upon user input or autonomous sensing. Programmable matter is thus linked to the concept of a material which inherently has the ability to perform information processing.

Does Matter Die? Stars create new elements in their cores by squeezing elements together in a process called nuclear fusion. But if mass can neither be created nor destroyed, then how does the Sun create different Atoms?

Dark Matter - Time Crystals

Antimatter is a material composed of anti-particles, which have the same mass as particles of ordinary matter, but opposite charges, lepton numbers, and baryon numbers.

In 1928, a physicist named Paul Dirac found something strange in his equations. And he predicted, based purely on mathematical insight, that there ought to be a second kind of matter, the opposite to normal matter, that literally annihilates when it comes in contact. Antimatter.

Annihilation is the process that occurs when a subatomic particle collides with its respective Antiparticle to produce other particles, such as an electron colliding with a positron to produce two photons. The total energy and momentum of the initial pair are conserved in the process and distributed among a set of other particles in the final state. Antiparticles have exactly opposite additive quantum numbers from particles, so the sums of all quantum numbers of such an original pair are zero. Hence, any set of particles may be produced whose total quantum numbers are also zero as long as conservation of energy and conservation

of momentum are obeyed. During a low-energy annihilation, photon production is favored, since these particles have no mass. However, high-energy particle colliders produce annihilations where a wide variety of exotic heavy particles are created. The word annihilation takes use informally for the interaction of two particles that are not mutual antiparticles - not charge conjugate. Some quantum numbers may then not sum to zero in the initial state, but conserve with the same totals in the final state. An example is the "annihilation" of a high-energy electron antineutrino with an electron to produce a W-. If the annihilating particles are composite, such as mesons or baryons, then several different particles are typically produced in the final state.

Hollow Atoms are short-lived multiply excited neutral atoms which carry a large part of their Z electrons (Z ... projectile nuclear charge) in high-n levels while inner shells remain (transiently) empty. This population inversion arises for typically 100 femtoseconds during the interaction of a slow highly charged ion (HCI) with a solid surface. Despite this limited lifetime, the formation and decay of a hollow atom can be conveniently studied from ejected electrons and soft X-rays, and the trajectories, energy loss and final charge state distribution of surface-scattered projectiles. For impact on insulator surfaces the potential energy contained by hollow atom may also cause the release of target atoms and -ions via potential sputtering and the formation of nanostructures on a surface.

Possible explanation for the dominance of matter over antimatter in the Universe. Neutrinos and antineutrinos, sometimes called ghost particles because difficult to detect, can transform from one type to another. The international T2K Collaboration announces a first indication that the dominance of matter over antimatter may originate from the fact that Neutrinos and antineutrinos behave differently during those oscillations. Neutrinos are elementary particles which travel through matter almost without interaction. They appear in three different types: electron- muon- and tau-neutrinos and their respective antiparticle (antineutrinos).

Exotic Matter has several proposed types: Hypothetical particles and states of matter that have "exotic" physical properties that would violate known laws of physics, such as a particle having a negative mass. Hypothetical particles and states of matter that have not yet been encountered, but whose properties would be within the realm of mainstream physics if found to exist. Several particles whose existence has been experimentally confirmed that are conjectured to be exotic hadrons and within the Standard Model. States of matter that are not commonly encountered, such as Bose–Einstein condensates, fermionic condensates, quantum spin liquid, string-net liquid, supercritical fluid, color-glass condensate, quark–gluon plasma, Rydberg matter, Rydberg polaron and photonic matter but whose properties are entirely within the realm of mainstream physics. Forms of matter that are poorly understood, such as dark matter and mirror matter. Ordinary matter placed under high pressure, which may result in dramatic changes in its physical or chemical properties. Degenerate matter. Exotic atoms.

Mass

Mass is both a property of a physical body and a measure of its resistance to acceleration (a change in its state of motion) when a net force is applied. An object's mass also determines the strength of its gravitational attraction to other bodies. The basic SI unit of mass is the kilogram (kg). In physics, mass is not the same as weight, even though mass is often determined by measuring the object's weight using a spring scale, rather than balance scale comparing it directly with known masses. An object on the Moon would weigh less than it does on Earth because of the lower gravity, but it would still have the same mass. This is because weight is a force, while mass is the property that (along with gravity) determines the strength of this force. Mass is the property of a body that causes it to have weight in a gravitational field. Join together into a mass or collect or form a mass. Mass is a property of a physical body. It is the measure of an object's resistance to Acceleration (a change in its state of motion) when a net force is applied. It also determines the strength of its mutual gravitational attraction to other bodies. The basic SI unit of mass is the kilogram (kg). Mass is not the same as weight, even though we often calculate an object's mass by measuring its weight with a spring scale, rather than comparing it directly with known masses. An object on the Moon would weigh less than it does on Earth because of the lower gravity, but it would still have the same mass. This is because weight is a force, while mass is the property that (along with gravity) determines the strength of this Force.

Physical Object is a collection of matter within a defined contiguous boundary in three-dimensional space. The boundary must be defined and identified by the properties of the material. The boundary may change over time. The boundary is usually the visible or tangible surface of the object. The matter in the object is constrained (to a greater or lesser degree) to move as one object. The boundary may move in space relative to other objects that it is not attached to (through translation and rotation). An object's boundary may also deform and change over time in other ways.

Physical Property is any property that is measurable, whose value describes a state of a physical system. The changes in the physical properties of a system can be used to describe its transformations or evolutions between its momentary states. Physical properties are often referred to as observables. They are not modal properties. Quantifiable physical property is called physical quantity. Environment.

Mass in Special Relativity. The word mass has two meanings in special relativity: rest mass or invariant mass is an invariant quantity which is the same for all observers in all reference frames, while relativistic mass is dependent on the velocity of the observer. According to the concept of mass–energy equivalence, the rest mass and relativistic mass are equivalent to the rest energy and total energy of the body, respectively. The term relativistic mass tends not to be used in particle and nuclear physics and is often avoided by writers on special relativity, in favor of using the body's total energy. In contrast, rest mass is usually preferred over rest energy. The measurable inertia and gravitational attraction of a body in a given frame of reference is determined by its relativistic mass, not merely its rest mass. For example, light has zero rest mass but contributes to the inertia (and weight in a gravitational field) of any system containing it. For a discussion of mass in general relativity, see mass in general relativity. For a general discussion including mass in Newtonian mechanics, see the article on mass.

E = mc²

Energy Equals Mass Times The Speed Of Light Squared. The equation says that energy and mass or matter are interchangeable; they are different forms of the same thing. Under the right conditions, energy can become mass, and vice versa. Theory of Relativity.

Mass Energy Equivalence states that anything having mass has an equivalent amount of energy and vice versa, with these fundamental quantities directly relating to one another by Albert Einstein's famous formula: E=mc2 - Simple Version. This formula states that the equivalent energy (E) can be calculated as the mass (m) multiplied by the speed of light (c = about 3×108 m/s) squared. Similarly, anything having energy exhibits a corresponding mass m given by its energy E divided by the speed of light squared c². Because the speed of light is a very large number in everyday units, the formula implies that even an everyday object at rest with a modest amount of mass has a very large amount of energy intrinsically. Chemical, nuclear, and other energy transformations may cause a system to lose some of its energy content (and thus some corresponding mass), releasing it as light (radiant) or thermal energy for example.

Mass and Energy are manifestations of the same thing? The mass of a body is a measure of its energy content. Mass becomes simply a physical manifestation of that energy, rather than the other way around. As we work our way inward—matter into atoms, atoms into sub-atomic particles, sub-atomic particles into quantum fields and forces—we lost sight of matter completely. Matter loses its tangibility. It lost its primacy as mass became a secondary quality, the result of interactions between intangible quantum fields. What we recognize as mass is a behavior of these quantum fields; it is not a property that belongs or is necessarily intrinsic to them. Mass overwhelmingly arises from the protons and neutrons it contains, the answer is now clear and decisive. The inertia of that body, with 95 percent accuracy, is its energy content.

Mass is only one form of energy among many, such as electrical, thermal, or chemical energy, and therefore energy can be transformed from any of these forms into mass, and vice versa. Converting mass into energy is the most energy-efficient process in the Universe. 100% is the greatest energy gain you could ever hope for out of a reaction. Mass can be converted into energy and back again, and underlies everything from nuclear power to particle accelerators to atoms to the Solar System. Mass is not conserved. If you take a block of iron and chop it up into a bunch of iron atoms, you fully expect that the whole equals the sum of its parts. That's an assumption that's clearly true, but only if mass is conserved. In the real world, though, according to Einstein, mass is not conserved at all. If you were to take an iron atom, containing 26 protons, 30 neutrons, and 26 electrons, and were to place it on a scale, you'd find some disturbing facts. An iron atom with all of its electrons weighs slightly less than an iron nucleus and its electrons do separately, An iron nucleus weighs significantly less than 26 protons and 30 neutrons do separately. And if you try and fuse an iron nucleus into a heavier one, it will require you to input more energy than you get out. mass is just another form of energy. When you create something that's more energetically stable than the raw ingredients that it's made from, the process of creation must release enough energy to conserve the total amount of energy in the system. When you bind an electron to an atom or molecule, or allow those electrons to transition to the lowest-energy state, those binding transitions must give off energy, and that energy must come from somewhere: the mass of the combined ingredients. This is even more severe for nuclear transitions than it is for atomic ones, with the former class typically being about 1000 times more energetic than the latter class. Energy is conserved, but only if you account for changing masses. When you have any attractive force that binds two objects together — whether that's the electric force holding an electron in orbit around a nucleus, the nuclear force holding protons and neutrons together, or the gravitational force holding a planet to a star — the whole is less massive than the individual parts. And the more tightly you bind these objects together, the more energy the binding process emits, and the lower the rest mass of the end product. For every 1 kilogram of mass that you convert, you get a whopping 9 × 1016 joules of energy out: the equivalent of 21 Megatons of TNT. Whenever we experience a radioactive decay, a fission or fusion reaction, or an annihilation event between matter and antimatter, the mass of the reactants is larger than the mass of the products; the difference is how much energy is released. In all cases, the energy that comes out — in all its combined forms — is exactly equal to the energy equivalent of the mass loss between products and reactants. The ultimate example is the case of matter-antimatter annihilation, where a particle and its antiparticle meet and produce two photons of the exact rest energy of the two particles. Take an electron and a positron and let them annihilate, and you'll always get two photons of exactly 511 keV of energy out. It's no coincidence that the rest mass of electrons and positrons are each 511 keV/c2: the same value, just accounting for the conversion of mass into energy by a factor of c2. Einstein's most famous equation teaches us that any particle-antiparticle annihilation has the potential to be the ultimate energy source: a method to convert the entirety of the mass of your fuel into pure, useful energy.

Explosion is a rapid increase in volume and release of energy in an extreme manner, usually with the generation of high temperatures and the release of gases. Supersonic explosions created by high explosives are known as detonations and travel via supersonic shock waves. Subsonic explosions are created by low explosives through a slower burning process known as deflagration.

Combustion - Rockets - Chemical Reactions

Coulomb Explosion are a mechanism for transforming energy in intense electromagnetic fields into atomic motion and are thus useful for controlled destruction of relatively robust molecules. The explosions are a prominent technique in laser-based machining, and appear naturally in certain high-energy reactions.

Implosion as a mechanical process is when objects are destroyed by collapsing (or being squeezed in) on themselves. The opposite of explosion, implosion concentrates matter and energy. True implosion usually involves a difference between internal (lower) and external (higher) pressure, or inward and outward forces, that is so large that the structure collapses inward into itself, or into the space it occupied if it is not a completely solid object. Examples of implosion include a submarine being crushed from the outside by the hydrostatic pressure of the surrounding water, and the collapse of a massive star under its own gravitational pressure. An implosion can fling material outward (for example due to the force of inward falling material rebounding, or peripheral material being ejected as the inner parts collapse), but this is not an essential component of an implosion and not all kinds of implosion will do so. If the object was previously solid, then implosion usually requires it to take on a more dense form - in effect to be more concentrated, compressed, denser, or converted into a new material that is denser than the original. Cavitation - Contraction - Impeller.

Exothermic Process describes a process or reaction that releases energy from the system to its surroundings, usually in the form of heat, but also in a form of light (e.g. a spark, flame, or flash), electricity (e.g. a battery), or sound (e.g. explosion heard when burning hydrogen). Its etymology stems from the Greek prefix έξω (exō, which means "outwards") and the Greek word θερμικός (thermikόs, which means "thermal"). The term exothermic was first coined by Marcellin Berthelot. The opposite of an exothermic process is an endothermic process, one that absorbs energy in the form of heat. Combustion.

Mass versus Weight, the mass of an object is often referred to as its weight, though these are in fact different concepts and quantities. In scientific contexts, mass refers loosely to the amount of "matter" in an object (though "matter" may be difficult to define), whereas weight refers to the force exerted on an object by gravity. In other words, an object with a mass of 1.0 kilogram will weigh approximately 9.81 newtons on the surface of the Earth (its mass multiplied by the gravitational field strength). (The newton is a unit of force, while the kilogram is a unit of mass.)

Energy Types - Light

Invariant Mass is a characteristic of the total energy and momentum of an object or a system of objects that is the same in all frames of reference related by Lorentz transformations. If a center of momentum frame exists for the system, then the invariant mass of a system is simply the total energy divided by the speed of light squared. In other reference frames, the energy of the system increases, but system momentum is subtracted from this, so that the invariant mass remains unchanged.

Negative Mass is a hypothetical concept of matter whose mass is of opposite sign to the mass of normal matter, e.g. −2 kg. Such matter would violate one or more energy conditions and show some strange properties, stemming from the ambiguity as to whether attraction should refer to force or the oppositely oriented acceleration for negative mass. It is used in certain speculative theories, such as on the construction of wormholes. The closest known real representative of such exotic matter is a region of pseudo-negative pressure density produced by the Casimir effect, which are physical forces arising from a quantized field, which is the process of transition from a classical understanding of physical phenomena to a newer understanding known as quantum mechanics. It is a procedure for constructing a quantum field theory starting from a classical field theory. Dark Energy.

Inertial mass is a mass parameter giving the inertial resistance to acceleration of the body when responding to all types of force. Gravitational mass is determined by the strength of the gravitational force experienced by the body when in the gravitational field g.

Casimir Effect typical example is of the two uncharged conductive plates in a vacuum, placed a few nanometers apart. In a classical description, the lack of an external field means that there is no field between the plates, and no force would be measured between them. When this field is instead studied using the quantum electrodynamic vacuum, it is seen that the plates do affect the virtual photons which constitute the field, and generate a net force – either an attraction or a repulsion depending on the specific arrangement of the two plates. Although the Casimir effect can be expressed in terms of virtual particles interacting with the objects, it is best described and more easily calculated in terms of the Zero Point Energy of a quantized field in the intervening space between the objects. This force has been measured and is a striking example of an effect captured formally by second quantization. The treatment of boundary conditions in these calculations has led to some controversy. In fact, "Casimir's original goal was to compute the van der Waals force between polarizable molecules" of the conductive plates. Thus it can be interpreted without any reference to the zero-point energy (vacuum energy) of quantum fields. Because the strength of the force falls off rapidly with distance, it is measurable only when the distance between the objects is extremely small. On a submicron scale, this force becomes so strong that it becomes the dominant force between uncharged conductors. In fact, at separations of 10 nm – about 100 times the typical size of an atom – the Casimir effect produces the equivalent of about 1 atmosphere of pressure (the precise value depending on surface geometry and other factors).

Zero Point Energy - Quantized Energy - Anti-Gravity - Magnets

Biefeld–Brown Effect is an electrical effect that produces an ionic wind that transfers its momentum to surrounding neutral particles.

Pauli Exclusion Principle is the quantum mechanical principle which states that two or more identical fermions (particles with half-integer spin) cannot occupy the same quantum state within a quantum system simultaneously. In the case of electrons in atoms, it can be stated as follows: it is impossible for two electrons of a poly-electron atom to have the same values of the four quantum numbers.

Cavitation is the formation of vapour cavities in a liquid, small liquid-free zones ("bubbles" or "voids"), that are the consequence of forces acting upon the liquid. It usually occurs when a liquid is subjected to rapid changes of pressure that cause the formation of cavities in the liquid where the pressure is relatively low. When subjected to higher pressure, the voids implode and can generate an intense shock wave.

Elements - Minerals - Mind over Matter

‘Negative mass’ created at Washington State University

Negative Energy is a concept used in physics to explain the nature of certain fields, including the gravitational field and a number of quantum field effects. In more speculative theories, negative energy is involved in wormholes which allow time travel and warp drives for faster-than-light space travel.

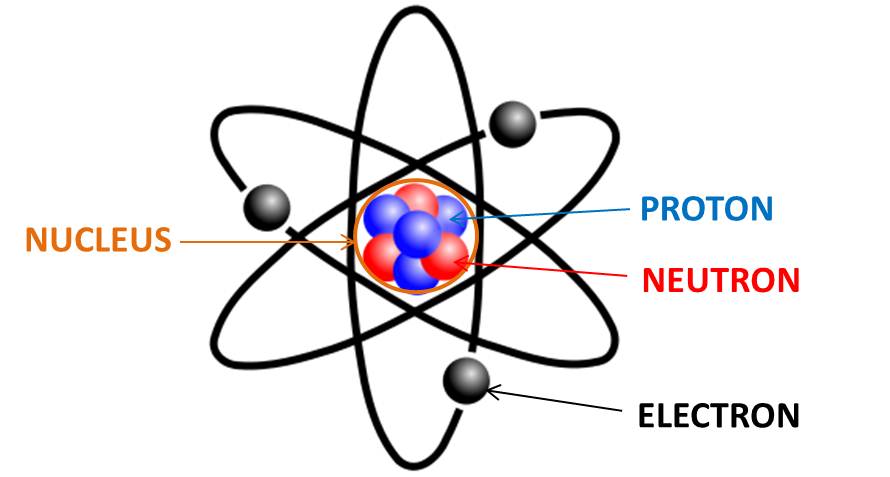

Atoms - Matter - Tiny Particles

An

Atom is the smallest constituent

unit of ordinary

matter that has the properties of a

chemical element. Every solid,

liquid, gas, and plasma is composed of neutral or ionized atoms.

Atoms with electrons are 99.9% empty space.

An

Atom is the smallest constituent

unit of ordinary

matter that has the properties of a

chemical element. Every solid,

liquid, gas, and plasma is composed of neutral or ionized atoms.

Atoms with electrons are 99.9% empty space.

Atomic Number - Atoms (youtube) - Atoms (youtube)

Atoms are very small around ten-billionth of a meter, or in the short scale, roughly 1 angstrom across = one ten millionth of a mm. (1,000 mm in 1 meter). One nanometer is the size of two atoms and a nanometer is one millionth of a millimeter or the size of a small grain of sand. A hydrogen atom is about 0.1 nanometers. Atom is about 0.0000001 of a millimeter in diameter, or 0.1 nanometers. However, atoms do not have well-defined boundaries, and there are different ways to define their size that give different but close values. The nucleus accounts for 99.9% of an atom's mass. So what would be the size difference of an atom if you only measure the size of the protons and neutrons and did not measure the space or the electrons? How many atoms in single drop of water? If a single atom were the size of a football stadium the nucleus of the atom would be the size of your eyeball, and the electrons circling the stadium would be invisible to you. The atom nucleus is 10,000 times smaller than the radius of the circling electrons. If an atoms outer electron layer were the size of a basketball, the nucleus of the atom would be so small that you could not see it with your own eyes. An atom is 99.9% empty space. The world gets really weird at that scale. If the atom were the size of a human eye ball, and you had a proton spinning in the palm of your hand, that means that you are just a little bit bigger than an atom, which means that the next proton would be so far away that you could not see it. Life at that scale would look like empty space to a human.

Size Scales (nano) - Ions - Relativity - Weighing Atoms with Electrons

All Atoms have at least one proton in their core, and the number of protons determines which kind of element an atom is. All atoms have electrons, negatively charged particles that move around in the space surrounding the positively-charged nuclear core. Hydrogen has one proton, one electron and no neutrons. An atom has a positively charged core. The core is surrounded by negatively charged electrons. The electrons spin around the core of the atom. This turns the atom into a tiny magnet. Each atom in an object creates a small magnetic force. In most materials, the atoms align in ways where the magnetic forces of the atoms point in many, random directions. The forces cancel each other out. There are some special materials, though, where the atoms align in a way where the magnetic forces of most of the atoms are pointed in the same direction. The forces of the atoms combine and the object behaves as a magnet. Perpetual Motion.

Carbon Atom - Nitrogen - Oxygen - Photons (light)

Superatom is any cluster of atoms that seem to exhibit some of the properties of elemental atoms. Sodium atoms, when cooled from vapor, naturally condense into clusters, preferentially containing a magic number of atoms (2, 8, 20, 40, 58, etc.). The first two of these can be recognized as the numbers of electrons needed to fill the first and second shells, respectively. The superatom suggestion is that free electrons in the cluster occupy a new set of orbitals that are defined by the entire group of atoms, i.e. cluster, rather than each individual atom separately (non-spherical or doped clusters show deviations in the number of electrons that form a closed shell as the potential is defined by the shape of the positive nuclei.) Superatoms tend to behave chemically in a way that will allow them to have a closed shell of electrons, in this new counting scheme. Therefore, a superatom with one more electron than a full shell should give up that electron very easily, similar to an alkali metal, and a cluster with one electron short of full shell should have a large electron affinity, such as a halogen.

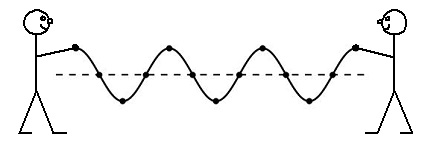

Waves - Resonance - Oscillation

Atomic Theory is a scientific theory of the nature of matter, which states that matter is composed of discrete units called atoms. Artificial Atom.

Atomism is a natural philosophy that developed in several ancient traditions. The atomists theorized that nature consists of two fundamental principles: Atom and Void. Unlike their modern scientific namesake in atomic theory, philosophical atoms come in an infinite variety of shapes and sizes, each indestructible, immutable and surrounded by a void where they collide with the others or hook together forming a cluster. Clusters of different shapes, arrangements, and positions give rise to the various macroscopic substances in the world.

Sound of an Atom - D-Note - 587.33 Hz (youtube)

Everything that you see in the world, including yourself, is made of just three particles of matter, protons, neutrons and electrons, that are interacting through a handful of forces, gravity, electromagnetism and the nuclear forces. DNA - Life.

An Atom is so much smaller than the wavelength of visible light that the two don’t really interact. An Atom is invisible to light itself. Even the most powerful light-focusing microscopes can’t visualize single atoms.

Atoms in your body are 99.9% empty space and none of them are the ones that you were born with. So why do I feel solid? Elementary particles have mass and the space between elementary particles is filled with the binding energy that also has the properties of mass.

Atomic Nuclei - Binding Energy

Nuclear Binding Energy is the energy that would be required to disassemble the nucleus of an atom into its component parts. These component parts are neutrons and protons, which are collectively called nucleons. The binding energy of nuclei is due to the attractive forces that hold these nucleons together, and it is always a positive number, since all nuclei would require the expenditure of energy to separate them into individual protons and neutrons. The mass of an atomic nucleus is less than the sum of the individual masses of the free constituent protons and neutrons (according to Einstein's equation E=mc2) and this 'missing mass' is known as the mass defect, and represents the energy that was released when the nucleus was formed.

Binding Energy is the energy required to disassemble a whole system into separate parts. A bound system typically has a lower potential energy than the sum of its constituent parts; this is what keeps the system together. Often this means that energy is released upon the creation of a bound state. This definition corresponds to a positive binding energy. Coulomb's Law (static) - Chemical Bonds.

Nuclear Force is the force between protons and neutrons, subatomic particles that are collectively called nucleons. The nuclear force is responsible for binding protons and neutrons into atomic nuclei. Neutrons and protons are affected by the nuclear force almost identically. Since protons have charge +1 e, they experience a strong electric field repulsion (following Coulomb's law) that tends to push them apart, but at short range the attractive nuclear force overcomes the repulsive electromagnetic force. The mass of a nucleus is less than the sum total of the individual masses of the protons and neutrons which form it. The difference in mass between bound and unbound nucleons is known as the mass defect. Energy is released when some large nuclei break apart, and it is this energy that is used in nuclear power and nuclear weapons. (m=138 MeV) Excited State.

Atomic Nucleus is the small, dense region consisting of protons and neutrons at the center of an atom. An atom is composed of a positively-charged nucleus, with a cloud of negatively-charged electrons surrounding it, bound together by electrostatic force. Almost all of the mass of an atom is located in the nucleus, with a very small contribution from the electron cloud. Protons and neutrons are bound together to form a nucleus by the nuclear force. The diameter of the nucleus is in the range of 1.75 fm(1.75×10−15 m) for hydrogen (the diameter of a single proton) to about 15 fm for the heaviest atoms, such as uranium. These dimensions are much smaller than the diameter of the atom itself (nucleus + electron cloud), by a factor of about 23,000 (uranium) to about 145,000 (hydrogen). Atom Nucleus is spherical, oblate and prolate simultaneously. Prolate is an elongated spheroid, shaped like an American football or rugby ball.

Nucleon is either a proton or a neutron, considered in its role as a component of an atomic nucleus. The number of nucleons in a nucleus defines an isotope's mass number (nucleon number).

Nucleosynthesis is the process that creates new atomic nuclei from pre-existing nucleons (protons and neutrons) and nuclei. According to current theories, the first nuclei were formed a few minutes after the Big Bang, through nuclear reactions in a process called Big Bang Nucleosynthesis (wiki).

Neutron Capture is a nuclear reaction in which an atomic nucleus and one or more neutrons collide and merge to form a heavier nucleus. Since neutrons have no electric charge, they can enter a nucleus more easily than positively charged protons, which are repelled electrostatically. Neutron capture plays an important role in the cosmic nucleosynthesis of heavy elements. In stars it can proceed in two ways: as a rapid (r-process) or a slow process (s-process). Nuclei of masses greater than 56 cannot be formed by thermonuclear reactions (i.e. by nuclear fusion), but can be formed by neutron capture. Neutron capture on protons yields a line at 2.223 MeV predicted and commonly observed in solar flares.

R-Process or rapid neutron-capture process, is a set of nuclear reactions that is responsible for the creation of approximately half of the atomic nuclei heavier than iron; the "heavy elements", with the other half produced by the p-process and s-process. The r-process usually synthesizes the most neutron-rich stable isotopes of each heavy element. The r-process can typically synthesize the heaviest four isotopes of every heavy element, and the two heaviest isotopes, which are referred to as r-only nuclei, can be created via the r-process only. Abundance peaks for the r-process occur near mass numbers A = 82 (elements Se, Br, and Kr), A = 130 (elements Te, I, and Xe) and A = 196 (elements Os, Ir, and Pt).

Why Neutrons and Protons are modified inside Nuclei. The structure of a neutron or a proton is modified when the particle is bound in an atomic nucleus. Experimental data suggest an explanation for this phenomenon that could have broad implications for nuclear physics. Modified structure of protons and neutrons in correlated pairs. The atomic nucleus is made of protons and neutrons (nucleons), which are themselves composed of quarks and gluons. Understanding how the quark–gluon structure of a nucleon bound in an atomic nucleus is modified by the surrounding nucleons is an outstanding challenge.

A careful re-analysis of data taken as revealed a possible link between correlated protons and neutrons in the nucleus and a 35-year-old mystery. The data have led to the extraction of a universal function that describes the EMC Effect, the once-shocking discovery that quarks inside nuclei have lower average momenta than predicted, and supports an explanation for the effect.

EMC Effect is the surprising observation that the cross section for deep inelastic scattering from an atomic nucleus is different from that of the same number of free protons and neutrons (collectively referred to as nucleons). From this observation, it can be inferred that the quark momentum distributions in nucleons bound inside nuclei are different from those of free nucleons. This effect was first observed in 1983 at CERN by the European Muon Collaboration, hence the name "EMC effect". It was unexpected, since the average binding energy of protons and neutrons inside nuclei is insignificant when compared to the energy transferred in deep inelastic scattering reactions that probe quark distributions. While over 1000 scientific papers have been written on the topic and numerous hypotheses have been proposed, no definitive explanation for the cause of the effect has been confirmed. Determining the origin of the EMC effect is one of the major unsolved problems in the field of nuclear physics.

Orbital Hybridisation is the concept of mixing atomic orbitals into new hybrid orbitals (with different energies, shapes, etc., than the component atomic orbitals) suitable for the pairing of electrons to form chemical bonds in valence bond theory. Hybrid orbitals are very useful in the explanation of molecular geometry and atomic bonding properties. Although sometimes taught together with the valence shell electron-pair repulsion (VSEPR) theory, valence bond and hybridisation are in fact not related to the VSEPR model.

Stable Atom is an atom that has enough binding energy to hold the nucleus together permanently. An unstable atom does not have enough binding energy to hold the nucleus together permanently and is called a radioactive atom.

Island of Stability is a predicted set of isotopes of superheavy elements that may have considerably longer half-lives than known isotopes of these elements. It is predicted to appear as an "island" in the chart of nuclides, separated from known stable and long-lived primordial radionuclides. Its theoretical existence is attributed to stabilizing effects of predicted "magic numbers" of protons and neutrons in the superheavy mass region.

Brownian Motion is the random motion of particles suspended in a fluid (a liquid or a gas) resulting from their collision with the fast-moving atoms or molecules in the gas or liquid. Elements.

Breakthrough in Nuclear Physics. High-precision measurements of the strong interaction between stable and unstable particles. The positively charged protons in atomic nuclei should actually repel each other, and yet even heavy nuclei with many protons and neutrons stick together. The so-called strong interaction is responsible for this. Scientists have now developed a method to precisely measure the strong interaction utilizing particle collisions in the ALICE experiment at CERN in Geneva. The strong interaction is one of the four fundamental forces in physics. It is essentially responsible for the existence of atomic nuclei that consist of several protons and neutrons. Protons and neutrons are made up of smaller particles, the so-called quarks. And they too are held together by the strong interaction. ALICE stands for A Large Ion Collider Experiment.

Proton

Proton is a subatomic particle, symbol p or p+, with a positive electric charge of +1e elementary charge and mass slightly less than that of a neutron. Protons and neutrons, each with masses of approximately one atomic mass unit, are collectively referred to as "nucleons". One or more protons are present in the nucleus of every atom. They are a necessary part of the nucleus. The number of protons in the nucleus is the defining property of an element, and is referred to as the atomic number (represented by the symbol Z). Antiproton is the antiparticle of the proton. Antiprotons are stable, but they are typically short-lived, since any collision with a proton will cause both particles to be annihilated in a burst of energy. Protons are composite particles composed of three valence quarks: two up quarks of charge +23e and one down quark of charge –13e. In vacuum, when free electrons are present, a sufficiently slow proton may pick up a single free electron, becoming a neutral hydrogen atom, which is chemically a free radical.

Charge Radius is a measure of the size of an atomic nucleus, particularly of a proton or a deuteron. It can be measured by the scattering of electrons by the nucleus and also inferred from the effects of finite nuclear size on electron energy levels as measured in atomic spectra.

Proton Radius Puzzle was an unanswered problem in physics relating to the size of the proton. Historically the proton radius was measured via two independent methods, which converged to a value of about 0.877 femtometres (1 fm = 10−15 m). This value was challenged by a 2010 experiment utilizing a third method, which produced a radius about 5% smaller than this, or 0.842 femtometres. The discrepancy was resolved when research conducted by Hessel et al. confirmed the same radius for 'electronic' hydrogen as well as its 'muonic' variant. (0.833 fentometers).

Interaction of Free Protons with ordinary Matter. Although protons have affinity for oppositely charged electrons, this is a relatively low-energy interaction and so free protons must lose sufficient velocity (and kinetic energy) in order to become closely associated and bound to electrons. High energy protons, in traversing ordinary matter, lose energy by collisions with atomic nuclei, and by ionization of atoms or removing electrons until they are slowed sufficiently to be captured by the electron cloud in a normal atom. However, in such an association with an electron, the character of the bound proton is not changed, and it remains a proton. The attraction of low-energy free protons to any electrons present in normal matter (such as the electrons in normal atoms) causes free protons to stop and to form a new chemical bond with an atom. Such a bond happens at any sufficiently "cold" temperature (i.e., comparable to temperatures at the surface of the Sun) and with any type of atom. Thus, in interaction with any type of normal (non-plasma) matter, low-velocity free protons are attracted to electrons in any atom or molecule with which they come in contact, causing the proton and molecule to combine. Such molecules are then said to be "protonated", and chemically they often, as a result, become so-called Brønsted acids.

Proton Pump is an integral membrane protein pump that builds up a proton gradient across a biological membrane. Transport of the positively charged proton is typically electrogenic, i.e. it generates an electrical field across the membrane also called the membrane potential. Proton transport becomes electrogenic if not neutralized electrically by transport of either a corresponding negative charge in the same direction or a corresponding positive charge in the opposite direction. An example of a proton pump that is not electrogenic, is the proton/potassium pump of the gastric mucosa which catalyzes a balanced exchange of protons and potassium ions. The combined transmembrane gradient of protons and charges created by proton pumps is called an electrochemical gradient. An electrochemical gradient represents a store of energy or potential energy that can be used to drive a multitude of biological processes such as ATP synthesis, nutrient uptake and action potential formation. In cell respiration, the proton pump uses energy to transport protons from the matrix of the mitochondrion to the inter-membrane space. It is an active pump that generates a proton concentration gradient across the inner mitochondrial membrane because there are more protons outside the matrix than inside. The difference in pH and electric charge (ignoring differences in buffer capacity) creates an electrochemical potential difference that works similar to that of a battery or energy storing unit for the cell. The process could also be seen as analogous to cycling uphill or charging a battery for later use, as it produces potential energy. The proton pump does not create energy, but forms a gradient that stores energy for later use. Proton Tunneling.

Neutron

Neutron is a subatomic particle, symbol n or n0, with no net electric charge. The amount of positive and negative charges in the neutron are equal. So an electrically neutral object does contain charges. The neutron mass slightly larger than that of a proton. Protons and neutrons, each with mass approximately one atomic mass unit, constitute the nucleus of an atom, and they are collectively referred to as nucleons. Their properties and interactions are described by nuclear physics. The chemical properties of an atom are mostly determined by the configuration of electrons that orbit the atom's heavy nucleus. The electron configuration is determined by the charge of the nucleus, which is determined by the number of protons, or atomic number. The number of neutrons is the neutron number. Neutrons do not affect the electron configuration, but the sum of atomic and neutron numbers is the mass of the nucleus. Atoms of a chemical element that differ only in neutron number are called isotopes. For example, carbon, with atomic number 6, has an abundant isotope carbon-12 with 6 neutrons and a rare isotope carbon-13 with 7 neutrons. Some elements occur in nature with only one stable isotope, such as fluorine. Other elements occur with many stable isotopes, such as tin with ten stable isotopes. The properties of an atomic nucleus depend on both atomic and neutron numbers. With their positive charge, the protons within the nucleus are repelled by the long-range electromagnetic force, but the much stronger, but short-range, nuclear force binds the nucleons closely together. Neutrons are required for the stability of nuclei, with the exception of the single-proton hydrogen nucleus. Neutrons are produced copiously in nuclear fission and fusion. They are a primary contributor to the nucleosynthesis of chemical elements within stars through fission, fusion, and neutron capture processes. The neutron is essential to the production of nuclear power. In the decade after the neutron was discovered by James Chadwick in 1932, neutrons were used to induce many different types of nuclear transmutations. With the discovery of nuclear fission in 1938, it was quickly realized that, if a fission event produced neutrons, each of these neutrons might cause further fission events, in a cascade known as a nuclear chain reaction. These events and findings led to the first self-sustaining nuclear reactor (Chicago Pile-1, 1942) and the first nuclear weapon (Trinity, 1945). Free neutrons, while not directly ionizing atoms, cause ionizing radiation. So they can be a biological hazard, depending on dose. A small natural "neutron background" flux of free neutrons exists on Earth, caused by cosmic ray showers, and by the natural radioactivity of spontaneously fissionable elements in the Earth's crust. Dedicated neutron sources like neutron generators, research reactors and spallation sources produce free neutrons for use in irradiation and in neutron scattering experiments.

Isotopes are variants of a particular chemical element which differ in neutron number. All isotopes of a given element have the same number of protons in each atom. The number of protons within the atom's nucleus is called atomic number and is equal to the number of electrons in the neutral (non-ionized) atom. Each atomic number identifies a specific element, but not the isotope; an atom of a given element may have a wide range in its number of neutrons. The number of nucleons (both protons and neutrons) in the nucleus is the atom's mass number, and each isotope of a given element has a different mass number. For example, carbon-12, carbon-13 and carbon-14 are three isotopes of the element Carbon with mass numbers 12, 13 and 14 respectively. The atomic number of carbon is 6, which means that every carbon atom has 6 protons, so that the neutron numbers of these isotopes are 6, 7 and 8 respectively.

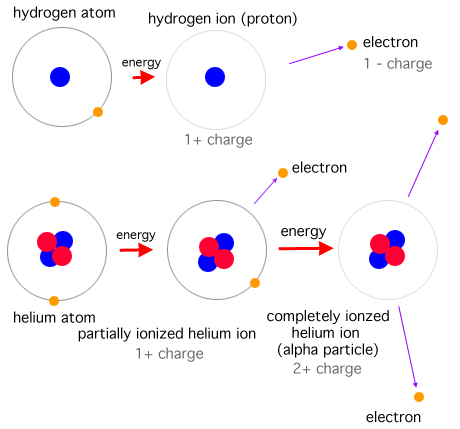

Electron

Electron is a

subatomic particle, symbol e−orβ−, with a

negative

electric

charge with an electric field. An electron

moving in orbit creates a magnetic field with a

magnetic monopole that does not require

a positive charge. Electrons belong to the first generation of the lepton

particle

family, and are generally thought to be elementary particles because they

have no known components or substructure. The electron has a mass that is

approximately 1/1836 that of the proton. An electron can

spin up or spin down. Quantum mechanical properties of

the electron include an intrinsic

angular momentum

or spin of a

half-integer value, expressed in units of the reduced Planck constant, ħ.

As it is a fermion, no two electrons can occupy the same quantum state, in

accordance with the pauli exclusion principle. Like all matter, electrons

have properties of both particles and waves: they can collide with other

particles and can be diffracted like light. The wave properties of

electrons are easier to observe with experiments than those of other

particles like neutrons and protons because electrons have a lower mass

and hence a larger De Broglie wavelength for a given energy.

Poly-electron is an atom containing more

than one electron. Hydrogen is the only atom in the periodic table that

has one electron in the orbitals under ground state.

Electron is a

subatomic particle, symbol e−orβ−, with a

negative

electric

charge with an electric field. An electron

moving in orbit creates a magnetic field with a

magnetic monopole that does not require

a positive charge. Electrons belong to the first generation of the lepton

particle

family, and are generally thought to be elementary particles because they

have no known components or substructure. The electron has a mass that is

approximately 1/1836 that of the proton. An electron can

spin up or spin down. Quantum mechanical properties of

the electron include an intrinsic

angular momentum

or spin of a

half-integer value, expressed in units of the reduced Planck constant, ħ.

As it is a fermion, no two electrons can occupy the same quantum state, in

accordance with the pauli exclusion principle. Like all matter, electrons

have properties of both particles and waves: they can collide with other

particles and can be diffracted like light. The wave properties of

electrons are easier to observe with experiments than those of other

particles like neutrons and protons because electrons have a lower mass

and hence a larger De Broglie wavelength for a given energy.

Poly-electron is an atom containing more

than one electron. Hydrogen is the only atom in the periodic table that

has one electron in the orbitals under ground state.A new window into electron behavior. Quantum mechanical tunneling material's band structure. Momentum and energy is a process by which electrons can traverse energetic barriers by simply appearing on the other side. An electron is a wave of probability. Q-Bit - Entanglement.

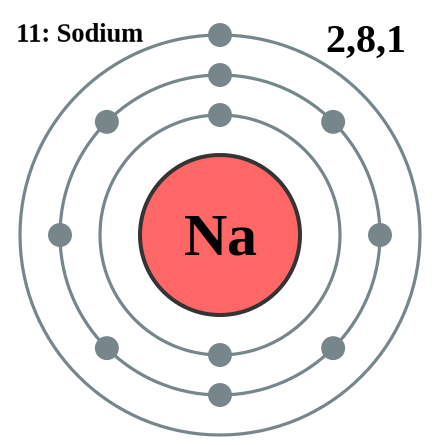

Electron Shell is the area that electrons orbit in around an atom's nucleus. Each shell can contain only a fixed number of electrons: The first shell can hold up to two electrons, the second shell can hold up to eight (2 + 6) electrons, the third shell can hold up to 18 (2 + 6 + 10) and so on. Shell 1 can hold up to 2 electrons, Shell 2 can hold up to 8 electrons, Shell 3 can hold up to 18 electrons, Shell 4 can hold up to 32 electrons, Shell 5 can hold up to 50 electrons. The general formula is that the nth shell can in principle hold up to 2(n2) electrons. Since electrons are electrically attracted to the nucleus, an atom's electrons will generally occupy outer shells only if the more inner shells have already been completely filled by other electrons. However, this is not a strict requirement: atoms may have two or even three incomplete outer shells. The valence shell is the outermost shell of an atom in its uncombined state, which contains the electrons most likely to account for the nature of any reactions involving the atom and of the bonding interactions it has with other atoms. Care must be taken to note that the outermost shell of an ion is not commonly termed valence shell. Electrons in the valence shell are referred to as valence electrons. Energy Level of a particle that is bound or confined spatially—can only take on certain discrete values of energy. If the potential energy is set to zero at infinite distance from the atomic nucleus or molecule, the usual convention, then bound electron states have negative potential energy. If an atom, ion, or molecule is at the lowest possible energy level, it and its electrons are said to be in the ground state. If it is at a higher energy level, it is said to be excited, or any electrons that have higher energy than the ground state are excited. If more than one quantum mechanical state is at the same energy, the energy levels are "degenerate". They are then called degenerate energy levels. (electrons also travel through protons).

Sublevels of Electron are known by the letters s, p, d, and f. The s sublevel has just one orbital, so can contain 2 electrons max. The p sublevel has 3 orbitals, so can contain 6 electrons max. The d sublevel has 5 orbitals, so can contain 10 electrons max. And the 4 sublevel has 7 orbitals, so can contain 14 electrons max. Examples of the subevels found in various atoms are shown below. The superscript shows the number of electrons in each sublevel. Hydrogen: 1s1, Carbon: 1s2 2s2 2p2, Chlorine: 1s2 2s2 2p6 3s2 3p5, Argon: 1s2 2s2 2p6 3s2 3p6. The sublevels contain orbitals. The s sublevel has just one orbital, so can contain 2 electrons max. The p sublevel has 3 orbitals, so can contain 6 electrons max. The d sublevel has 5 orbitals, so can contain 10 electrons max.

Atomic Orbital is a mathematical function that describes the wave-like behavior of either one electron or a pair of electrons in an atom. This function can be used to calculate the probability of finding any electron of an atom in any specific region around the atom's nucleus. The term atomic orbital may also refer to the physical region or space where the electron can be calculated to be present, as defined by the particular mathematical form of the orbital.

Electron Spin is a quantum property of electrons. It is a form of angular momentum. The magnitude of this angular momentum is permanent. Like charge and rest mass, spin is a fundamental, unvarying property of the electron. If the electron spins clockwise on its axis, it is described as spin-up; counterclockwise is spin-down. This is a convenient explanation, if not fully justifiable mathematically. The spin angular momentum associated with electron spin is independent of orbital angular momentum, which is associated with the electron's journey around the nucleus. Electron spin is not used to define electron shells, subshells, or orbitals, unlike the quantum numbers n, l, and ml. Since these two electrons are in the same orbital, they occupy the same region of space within the atom. As a result, their spin quantum numbers cannot be the same, and thus these two electrons cannot exist in the same atom.